torvuswalds

Posts: 40 +87

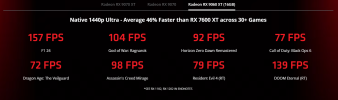

Exactly, this was not a review it was an opinion piece that shows no real quantifiable data and neither does his YouTube video. A proper review would be a comparison with the 5060 including 1080p and 1440p, 1440p with FSR/DLSS (If I'm not mistaken the 9060XT has access to FSR4 correct?)What I like from Steve is his numbers, his opinions is literally as bad as any techinfluencer out there. I am an engineer, so I make my own opinion after seeing the numbers and the methodology.

That's the only thing I like from HardwareUnboxed. All the rest, especially with Tims bias, is absolutely unbearable. The guy literally give a pass for Nvidia for anything, but if AMD is doing something similar, he will go in crusade against them.

That 8GB drama is the perfect example. Where is all the backlash against Nvidia for releasing their 5060 TI and their 5060 in 8GB variants? Ah yeah, they didn't bother to go at the same extent even if the price of those GPU are more expensive for the same performances... and their drivers still broken... and didn't provided review drivers for the 5060 launch.

I would even argue that the 5060 NON-TI has the same MSRP than the 9060 XT 8GB...

I prefer the numbers as well, but when you deliberately take a card and push it past its limits that HE KNOWS it has, and then is upset it doesn't perform the same as the 16GB version.