Oh man, I see a bright future for my next laptop with the 6800 junior. I'm looking at you Asus, MSI, Acer, and (Alienware  ). If they get the TDP down to maybe 225 watts it will just be an *** kicking laptop. If they can get a Vega 56 into laptops the way it sucked down the power and the RX 5700M (180 watt TDP) they can do it with the baby 6800 .

). If they get the TDP down to maybe 225 watts it will just be an *** kicking laptop. If they can get a Vega 56 into laptops the way it sucked down the power and the RX 5700M (180 watt TDP) they can do it with the baby 6800 .

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD Radeon RX 6800 Review: Excellent Performance, High-end Value

- Thread starter Steve

- Start date

Irata

Posts: 2,290 +4,004

All these new cards are *overkill* for the average gamer and are priced accordingly. If there are enough people who are convinced/hyped into buying, well then Nvidia and AMD have done their marketing job well.

And of course to cover development costs, you release the biggest margin and profit cards first. Again, that's AMD and Nvidia doing their business jobs well.

Last gen, rational people could buy the 1660 Super or 5600XT and get an actual decent deal for price/performance. Hell, even the 1650 Super was a good performing card for the money.

But you gotta wait for those actually good deal cards to trickle out 6-12 months later and consider that maybe you don't *need* 144 fps at 1440p to have a good gameplay experience.

Personally, I want to move from 1080p 60 fps (limited by the 60hz monitor) to 1440p > 60 fps.

Of course, that does not have to be at the max possible quality settings so as always I will adjust settings on a per-game level (including RT if offered) to get the best quality vs. fps combination.

These settings do depend on the type of game. For fps, it's speed over quality, for others I may be willing to sacrifice some fps for better quality.

Either way, both the 3070 and 6800 and up are too expensive (in terms of value for money) for me, so I'll be looking at the 3060(ti) or 6700(XT) when they are released.

I've been saying it for a while the 5700XT is over priced even when it launched. AMD sold the RX480/580 for £250 in 2016/2017 and 16nm was a fairly new node then, now we're on the mature side of 7nm I would expect the 5700XT to cost no more than £250, not the £350-400 it currently costs.It is interesting that 11 years ago, a graphics card, sporting two 330 mm2 GPUs, eight 256MB GDDR5 modules, and a PCI switch only cost $599, whereas a card sporting one 520 mm2 GPU, eight 2GB GDDR6 modules, and no PCIe switch costs $400 more.

But let's take inflation into account - one could pick any random inflation calculator, and you'd get something like $730 in 2020 dollars. If correct, that would make the RX 6900 XT $269 more expensive, an increase of 37%. Seems ridiculous, yes? Well, at the Tom's Hardware were reporting that some brands of HD 5970 were going for as high as $679 soon after launch, and the price of the RX 6900 XT now, was matched by the GTX 690 in 2012 (it was $999 too) and in the case of the RTX 3090, by the RX 390 X2 in 2015 (at a mere $1399). And let's not even think about the absurd Titan Z...

Modern high end graphics don't have an easy escape route, when it comes to keeping the price down. The processors themselves are enormous now, and even if the wafer yields were really good, there's no way a 520mm2 chip could be sold for the same price as a 250mm2 one (I.e. the very first Navi offering), when manufactured on the same process.

By contrast, a Zen 2 chiplet (also manufactured on N7) is just 74 mm2, with the I/O chip being something like 120 mm2. Even Intel's hulking i9-10900K is only around 205 mm2. And CPUs don't require a complex PCB nor onboard memory.

Only Samsung and Micron manufacture 2 GB, 16 Gbps GDDR6 modules - there's only 14 Gbps ones from both of them, as a slower alternative. So the need for ever more bandwidth and local memory means that only the very fastest and biggest graphics DRAM is going to be used, and naturally, that's not cheap.

Of course, there's always a hefty mark-up on the top end models, but this is true of any 'best of the best' product. But given the size of the processors and memory requirements, it would take some exceptional circumstances for a card like the 6900 XT to be sold at $730.

Edit: As the inflation thing was piquing my curiosity, I got to wonder what cards in 2009 cost the same as the RX 6800 does now (accounting for the dollar inflation) - $580 now is roughly $474 in 2009 dollars; let's make it a simple $470.

Well, Anandtech was stating $430 to $450 (bar a cent each) for the dual-GPU Radeon HD 4870 X2 in March 2009. Tom's Hardware gave prices of around $465 for the (also dual-GPU) GeForce GTX 295 in November 2009. So the RX 6800 in 2009 prices is 2% to 10% extra - closer than I'd thought it would be.

If any advancement in visual quality would have been dismissed as not worth computational power we`d still be playing Mario with 10000 fps. Because you don`t SEE much improvement or how Techspot put it "slightly better shadows" is purely subjective. Ray tracing provides real world lighting by simulating the physical behavior of light. That means reflection, refraction, dispersion, diffraction, polarization and ...well, I don`t know all proprieties of light, but it`s more than "better shadows". Improve on that and add sharper textures and you`ll soon enter complete photorealism in games, which I would say is the ultimate goal of graphic cards. Yes, it should be noted that at this time, it still needs a lot of improvement and there is a noticeable difference in quality between NVidia`s RT and AMD`s. So, is it worth at this moment? If you would care to check DXR benchmarks, it is very much playable even without DLSS for 3070: 1080p: from 72 to a max 132fps, 1440p: 47-97fps and for 3080 1440p: 68-150fps and 4k: 32 to 72 fps. Obviously, DLSS adds a few frames more, so ofc it is worth adding visual quality, especially if you were to buy a high end card and thinking all big games coming out will have to support it.Ray tracing just seems like a huge waste of computational power at this moment. The results are really not worth the added computations.

Avro Arrow

Posts: 3,721 +4,822

I'm sorry but that inflation argument doesn't hold water and I'll show you why. Inflation is a constant. It never stops and it has existed since the dawn of capitalism. It is one capitalism's greatest weaknesses. So, let's see just how inflation has affected video cards over time:It is interesting that 11 years ago, a graphics card, sporting two 330 mm2 GPUs, eight 256MB GDDR5 modules, and a PCI switch only cost $599, whereas a card sporting one 520 mm2 GPU, eight 2GB GDDR6 modules, and no PCIe switch costs $400 more.

But let's take inflation into account - one could pick any random inflation calculator, and you'd get something like $730 in 2020 dollars. If correct, that would make the RX 6900 XT $269 more expensive, an increase of 37%. Seems ridiculous, yes? Well, at the Tom's Hardware were reporting that some brands of HD 5970 were going for as high as $679 soon after launch, and the price of the RX 6900 XT now, was matched by the GTX 690 in 2012 (it was $999 too) and in the case of the RTX 3090, by the RX 390 X2 in 2015 (at a mere $1399). And let's not even think about the absurd Titan Z...

Modern high end graphics don't have an easy escape route, when it comes to keeping the price down. The processors themselves are enormous now, and even if the wafer yields were really good, there's no way a 520mm2 chip could be sold for the same price as a 250mm2 one (I.e. the very first Navi offering), when manufactured on the same process.

By contrast, a Zen 2 chiplet (also manufactured on N7) is just 74 mm2, with the I/O chip being something like 120 mm2. Even Intel's hulking i9-10900K is only around 205 mm2. And CPUs don't require a complex PCB nor onboard memory.

Only Samsung and Micron manufacture 2 GB, 16 Gbps GDDR6 modules - there's only 14 Gbps ones from both of them, as a slower alternative. So the need for ever more bandwidth and local memory means that only the very fastest and biggest graphics DRAM is going to be used, and naturally, that's not cheap.

Of course, there's always a hefty mark-up on the top end models, but this is true of any 'best of the best' product. But given the size of the processors and memory requirements, it would take some exceptional circumstances for a card like the 6900 XT to be sold at $730.

Edit: As the inflation thing was piquing my curiosity, I got to wonder what cards in 2009 cost the same as the RX 6800 does now (accounting for the dollar inflation) - $580 now is roughly $474 in 2009 dollars; let's make it a simple $470.

Well, Anandtech was stating $430 to $450 (bar a cent each) for the dual-GPU Radeon HD 4870 X2 in March 2009. Tom's Hardware gave prices of around $465 for the (also dual-GPU) GeForce GTX 295 in November 2009. So the RX 6800 in 2009 prices is 2% to 10% extra - closer than I'd thought it would be.

GTX 295 (January 2009): $500

GTX 480 (March 2010): $500

GTX 580 (November 2010): $500

GTX 680 (March 2012): $500

GTX 780 (May 2013): $500

GTX 980 (September 2014): $550 <- Huh?

GTX 1080 (May 2016): $600 <- Huh?

RTX 2080 (September 2018): $700 <- WTH????

RTX 3080 (November 2020): $700

Advancements in efficiency with regard to the manufacturing processes across the board cause manufacturing costs to DROP which more than makes up for inflation when it comes to tech. Note how nVidia's price didn't budge for five years, remaining at $500USD. Then over the next four, it increased by 40%.

This is not inflation because if it were, nVidia wouldn't have held the cost of their cards for 5 years. This is not inflation because the US Dollar hasn't lost 40% of its value over the last 6 years.

Whether you like it or not, this is corporate greed. US-based corporations are so obsessed with their stock value and short-term profits that most of them don't live for very long. Their approach to business means that you'll never see an American corporation as old as Lloyd's of London or the Hudson's Bay Company. They will keep squeezing the lemon until the lemon explodes. This is not a new thing.

Last edited:

Avro Arrow

Posts: 3,721 +4,822

I agree with you completely, there's just one problem. If you use the word "overpriced" to describe the RX 5700 XT, what word do you use to describe everything else?:I've been saying it for a while the 5700XT is over priced even when it launched. AMD sold the RX480/580 for £250 in 2016/2017 and 16nm was a fairly new node then, now we're on the mature side of 7nm I would expect the 5700XT to cost no more than £250, not the £350-400 it currently costs.

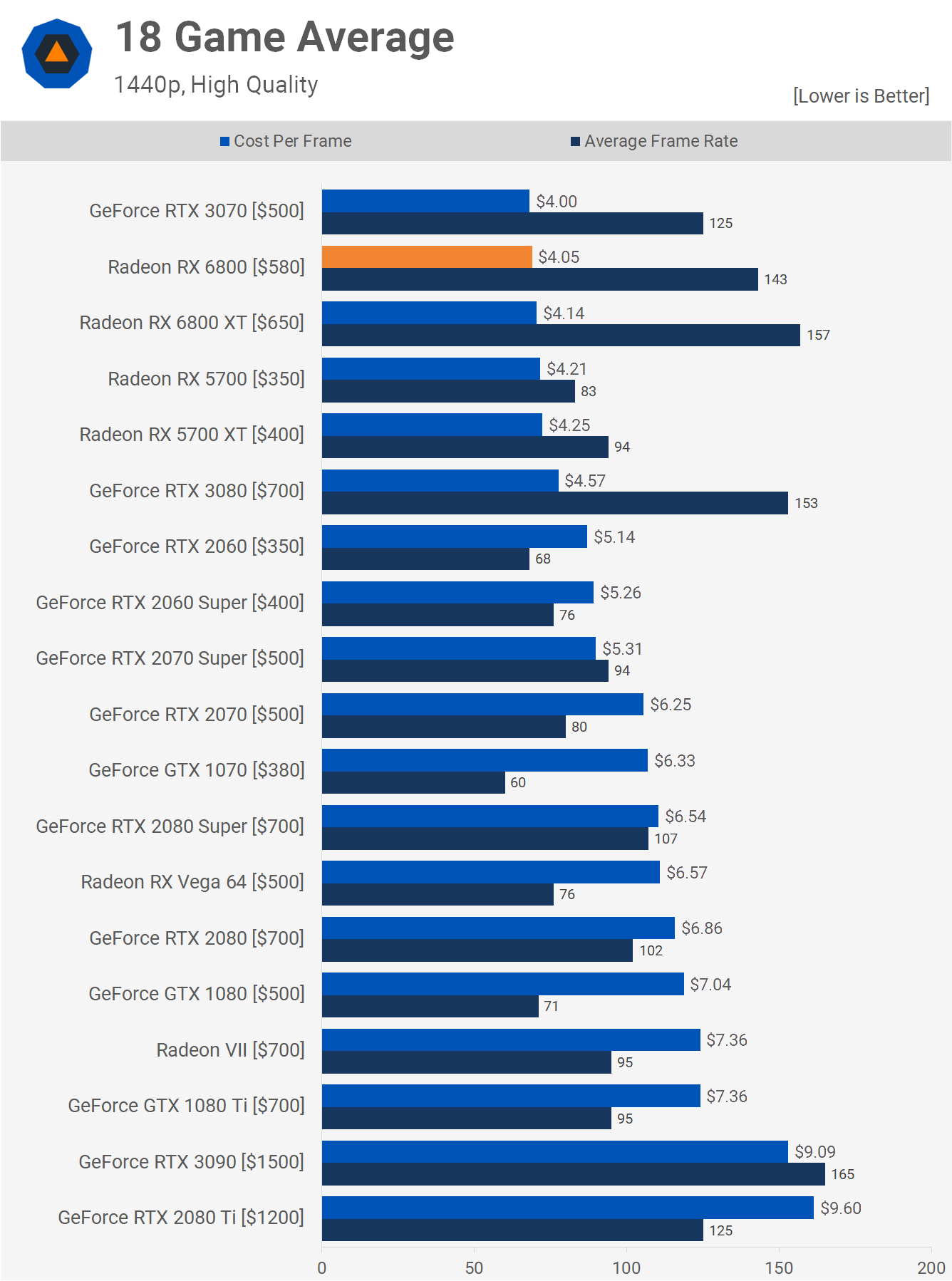

At 1080p, you can see that the RX 5700 XT is the second-LEAST overpriced card released in the last two years.

At 1440p, it is the fifth-least overpriced card released in the last two years.

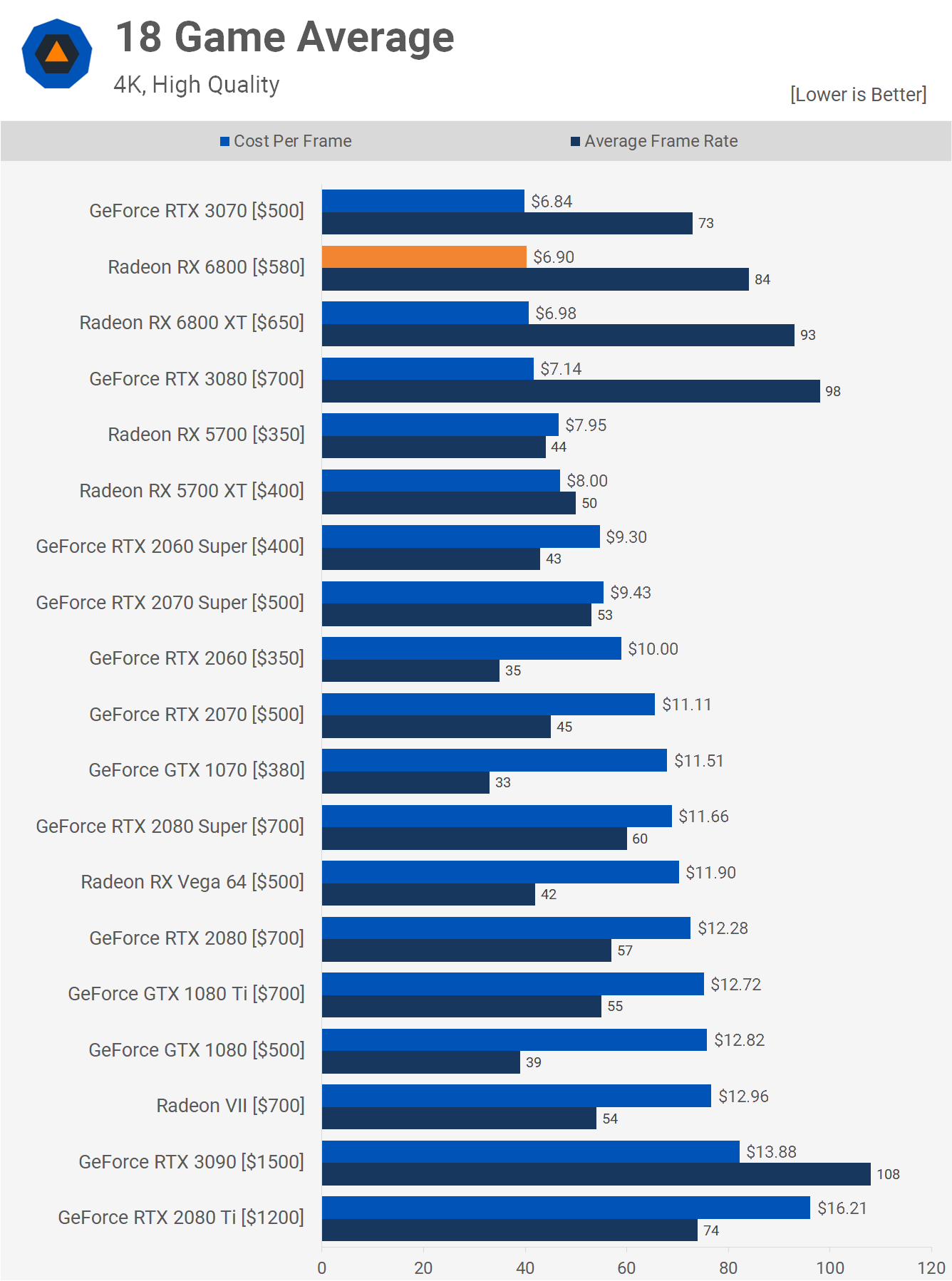

At 2160p, it is the sixth-least overpriced.

So the problem is that yes, it's overpriced but what word do we use to describe the vast majority of the video card market that is an even worse deal than the RX 5700 XT?

Last edited:

That's only true for manufacturing on the same process and even then, only for when economy of scale applies. There is nothing inherent about VLSIC production, compared to other manufacturing forms, that automatically reduces costs over time. But even if it did, R&D, marketing, and shipping costs do increase - partly because of inflation, partly because designing multilayer, multiple billion transistor chips becoming increasing more challenging design, requiring more resources to do so.Advancements in efficiency with regard to the manufacturing processes across the board cause manufacturing costs to DROP which more than makes up for inflation when it comes to tech.

But do note that I never said that inflation was the sole reason behind the prices we see today - I even pointed out that when accounting for that, the likes of the RX 6900 XT was 37% higher than an inflated HD 5970.

The GeForce GTX 780 was released with an MSRP of $649, and don't forget that the Founder's Edition of the GTX 1080 was $699 - the same applies for the 2080, where the FE model was $799. So Nvidia has been churning high prices for longer that the list suggests.GTX 295 (January 2009): $500

GTX 480 (March 2010): $500

GTX 580 (November 2010): $500

GTX 680 (March 2012): $500

GTX 780 (May 2013): $500

GTX 980 (September 2014): $550 <- Huh?

GTX 1080 (May 2016): $600 <- Huh?

RTX 2080 (September 2018): $700 <- WTH????

RTX 3080 (November 2020): $700

It could be argued that the 480 and 580 were the same price, because they ostensibly used the same chip: the GF110 in the 580 is a minor layout alteration of the GF100 in the 480, to reduce power consumption. Both were manufactured on the same process, too.

The GK104 in the 680 wasn't a large chip, even by the standards of the time, at just 294 mm2 with 3.54b transistors - the GK110 in the 780, made on the same process at the GK104, is far larger: 561 mm2 with 7.08b transistors.

So the price hike over the 680 should be expected. The GM204 in the 980 contained substantially fewer transistors than the GK104, at 5.2b - so even though it used the same process, the chip was a lot smaller at 398 mm2. The GP104 in the 1080 was smaller again, at 314 mm2, but the complexity had increased once more, jumping back to just over 7b transistors.

By then, Nvidia were using a semi-custom process node with TSMC, and I doubt they would offer any price reduction for Nvidia to use it. A fully custom node was used for the TU104, in the 2080, and that was another big step up in complexity: 13.6b in a 545 mm2 die.

So when you add that information into the list, the changes in prices become a little more understandable:

GTX 295 - $500 - 470 mm2, 1.4b trans, TSMC '55nm'

GTX 480 - $500 - 529 mm2, 3.1b trans, TSMC '40nm'

GTX 580 - $500 - 520 mm2, 3.0b trans, TSMC '40nm'

GTX 680 - $500 - 294 mm2, 3.5b trans, TSMC '28HP'

GTX 780 - $649 - 561 mm2, 6.1b trans, TSMC '28HP'

GTX 980 - $550 - 398 mm2, 5.2b trans, TSMC '28HP'

GTX 1080 - $599/$649 - 314 mm2, 7.2b trans, TSMC '16FF'

RTX 2080 - $699/$799 - 545 mm2, 13.6b trans, TSMC '12FFN'

RTX 3080 - $699 - 628 mm2, 28.3b trans, Samsung '8NN'

So for the period of a constant $500, 2 chips were virtually the same, and one was a notable reduction in size. Nvidia would have had to absorb increases in their own costs, but they could be offset by the points just made. The real oddities, though, are the 980 and the 2080 - the former can be explained by the fact that it was on a very mature node, and also a lot smaller than its predecessor. The latter, specifically the FE version, was just ridiculously priced.

Now understandable isn't the same as accepting or condoning such prices, of course, because ultimately Nvidia were able to keep the price the same between the 295 and 480, over a 4 year period, despite moving to a notably larger and far denser chip, and on a new process node. But that was 11 years ago, a time when was heavily focused on improving their gross margins and it's interesting to note that in their 2010 financial report, they admit that average selling prices and competition play just as much of a factor in their gross margins, as do manufacturer costs/yields and the competition.

I get the feeling that Nvidia first tested the waters with the price of the GTX 780, and found that it wasn't ready for a $650+ not-quite-the-very-best graphics card. Cue the 980's drop, to sweeten the market ready for Pascal - which, as we known, sold extremely well. Corporates can only be as greedy as the market allows, and with the die set by Pascal, it was no surprise to see the 2080 and 3080 hitting $700.

That said, the GA102 is absolutely massive - only the GV100 and GA100 are larger (at over 800 mm2 apiece). The wafer yields can't be all that great, so although it's $200 more, I'd argue that the 3080 is back to the $500 days, in terms of what you're getting for your money. Or put another way, I find the price of that far more acceptable than the price of the FE 1080 and 2080.

Freedom Now

Posts: 13 +11

It's a fine article but what's with the word "tad" more expensive when comparing to a 3070. It is 30% more and it's not 30% faster. For titles that support DLSS like COD you get a +85% with visuals on par with native rendering. 3070 is by far the best !/$ card in the market.

It's a fine article but what's with the word "tad" more expensive when comparing to a 3070. It is 30% more and it's not 30% faster. For titles that support DLSS like COD you get a +85% with visuals on par with native rendering. 3070 is by far the best !/$ card in the market.

Check your math, the 6800 is 16% more than the 3070, not 30%. And it's 14.4% faster at 1440p, which is pretty good scaling with price for higher end cards.

If you play one of the few games which support DLSS 2.0, then the 3070 will be faster for those games, but for the vast majority of games out there, the 6800 will be faster.

Last edited:

Freedom Now

Posts: 13 +11

If Nvidia priced Turing at the same price as Pascal then lowered the price of Pascal, AMD would not be here today. If you go back 4 years AMD was almost dead with a stock price of $2. AMD would had to of sold all there cards for a loss. Nvidia did AMD a favor, a favor Qualcomm and Intel would never do. People are not stupid. Spending 700 on something that brings you around 15 days worth of entertainment a year is less then you would spend going on a vacation for 15 days.

Dimitrios

Posts: 1,388 +1,191

amd stock recovering has nothing to do with Nvidia and everything to do with their cpu division... namely Zen...If Nvidia priced Turing at the same price as Pascal then lowered the price of Pascal, AMD would not be here today. If you go back 4 years AMD was almost dead with a stock price of $2. AMD would had to of sold all there cards for a loss. Nvidia did AMD a favor, a favor Qualcomm and Intel would never do. People are not stupid. Spending 700 on something that brings you around 15 days worth of entertainment a year is less then you would spend going on a vacation for 15 days.

I guess some of you weren't around between the 90s and 2000s, when the flagship graphics card had a 1 square cm die and cost 500 bucks.

Now you can get the same die size GPU for less than 100.

I'm not trying to justify these ridiculous prices, I'm just saying that back then the price per die area was even higher.

Now you can get the same die size GPU for less than 100.

I'm not trying to justify these ridiculous prices, I'm just saying that back then the price per die area was even higher.

NightAntilli

Posts: 929 +1,196

Having 8GB of VRAM will cripple the card in the very near future though. It's much safer to have 16GB of RAM. That alone is worth the additional $80.It's a fine article but what's with the word "tad" more expensive when comparing to a 3070. It is 30% more and it's not 30% faster. For titles that support DLSS like COD you get a +85% with visuals on par with native rendering. 3070 is by far the best !/$ card in the market.

There’s 0 evidence to suggest that... perhaps the DISTANT future... not the near futureHaving 8GB of VRAM will cripple the card in the very near future though. It's much safer to have 16GB of RAM. That alone is worth the additional $80.

I've just bought the card and after testing it with Metro Exodus, I'm nowhere near the numbers above in 4K. I can achieve those with MEDIUM settings, but not with ULTRA. Are those in game numbers? benchmark? What other settinsg might be inbolved? my numbers are closer to the ones KitGuru posted

RIG:

3900x/ D15

Asrock B550m Phantom itx

Gigabyte NVME Gen4 2tb

ASRock Radeon 6800 Reference

Corsair SF750

Anyone with the same card with results far from the ones posted here?

RIG:

3900x/ D15

Asrock B550m Phantom itx

Gigabyte NVME Gen4 2tb

ASRock Radeon 6800 Reference

Corsair SF750

Anyone with the same card with results far from the ones posted here?

Similar threads

- Replies

- 47

- Views

- 743

- Replies

- 25

- Views

- 259

Latest posts

-

Apple iOS 26 will freeze iPhone FaceTime video if it detects nudity

- Draconian replied

-

The Radeon RX 9070 XT is Now Faster, AMD FineWine

- LimyG replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.