In brief: AMD has been stealing Intel's performance thunder for years, and now it wants to hold the energy-efficiency crown. To that end, the company will have to innovate fast enough to outperform the entire industry by 150 percent over the next three years.

For years, Intel had been sitting on its CPU throne, gradually losing its drive to innovate process technology. Instead, the company chose to take the "abnormally bad" Skylake platform and tweak it for several generations, right up until this year's Rocket Lake lineup. This has prompted Apple to transition its Mac products from x86 CPUs to its Arm-based custom silicon and left a lot of room for underdog AMD to make a comeback.

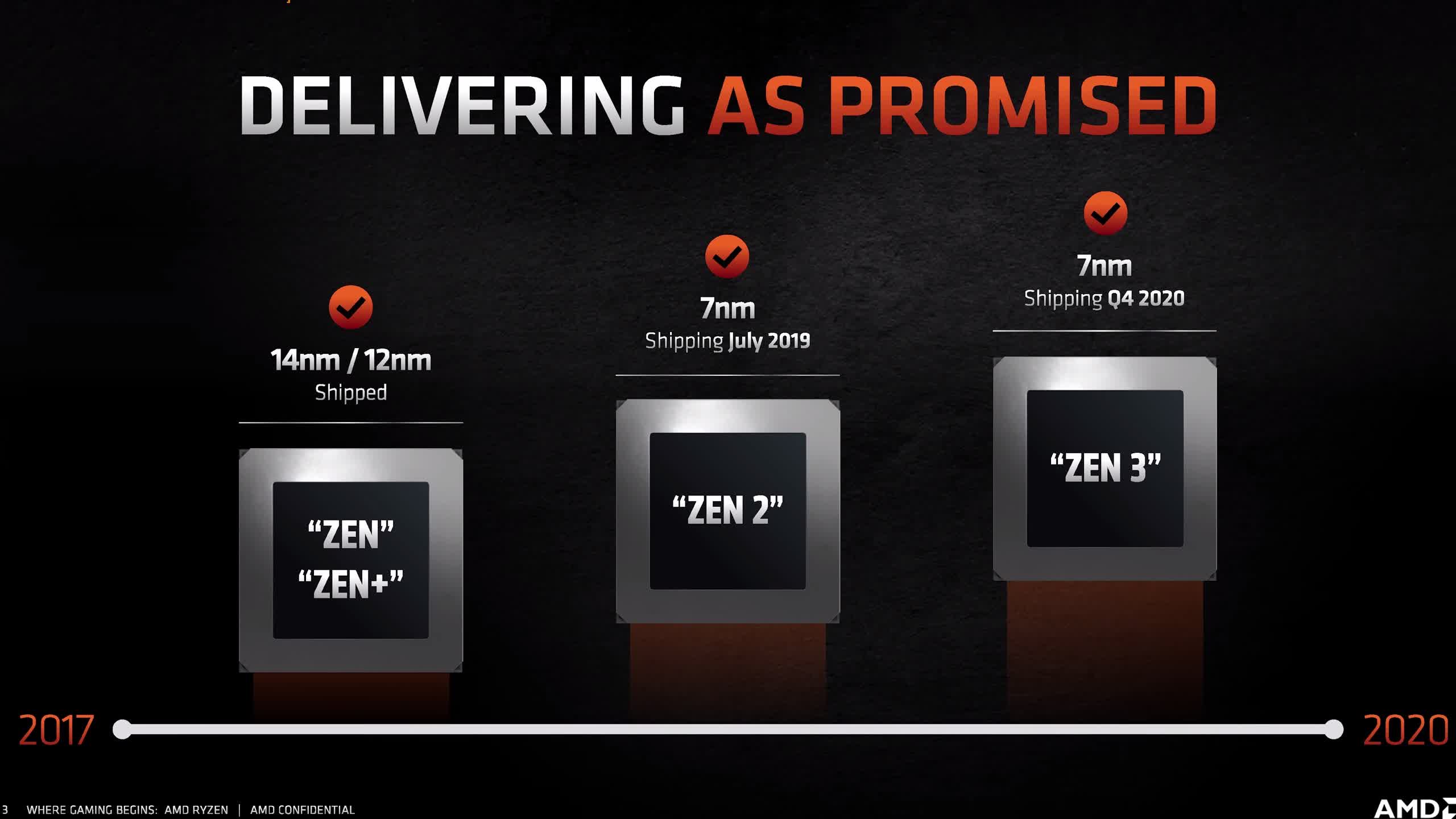

At this point, everyone that's been running a Ryzen-powered PC and following the news on progress made on the Zen microarchitecture knows that AMD has so far executed on its promise to deliver significant performance improvements with each new generation of Ryzen CPUs.

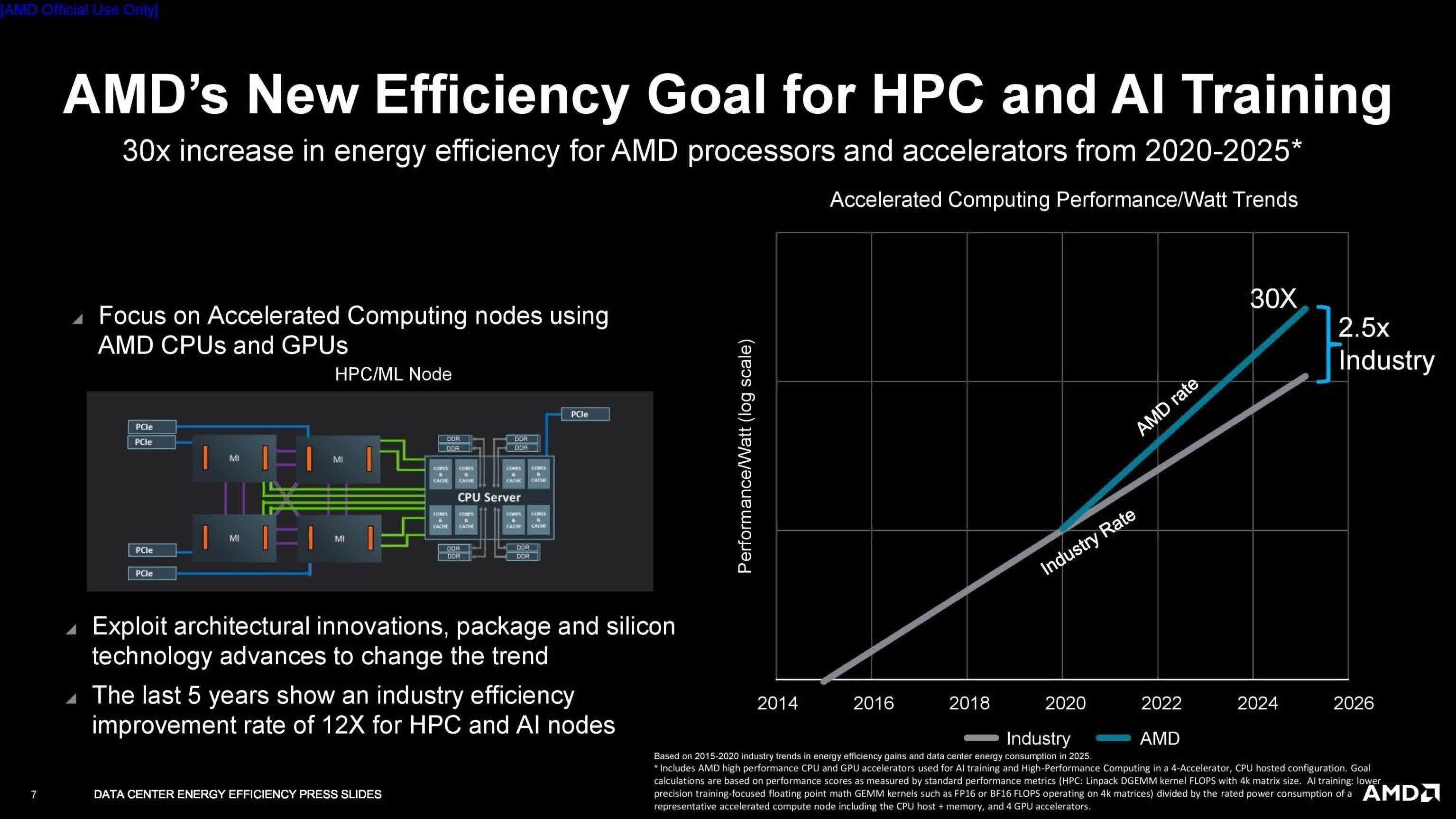

However, the Lisa Su-powered Team Red isn't stopping here. Today, the company announced its most ambitious goal yet—to increase the energy efficiency of its Epyc CPUs and Instinct AI accelerators 30 times by 2025. This would help data centers and supercomputers achieve high performance with significant power savings over current solutions.

If it achieves this goal, the savings would add up to billions of kilowatt-hours of electricity saved in 2025 alone, meaning the power required to perform a single calculation in high-performance computing tasks will have decreased by 97 percent.

Increasing energy efficiency this much will involve a lot of engineering wizardry, including AMD's stacked 3D V-Cache chiplet technology. The company acknowledges the difficult task ahead of it, now that "energy-efficiency gains from process node advances are smaller and less frequent."

To put things in perspective, AMD is talking about outperforming the rest of the computing industry in energy efficiency improvements by 150 percent. The company has been stealing Intel's server lunch as of late, and it currently holds the performance crown in the desktop and notebook departments. For now, Team Red has earned the benefit of the doubt, as it has been pumping innovation into CPUs and GPUs for almost five years with no signs of slowing down.

https://www.techspot.com/news/91485-amd-wants-make-chips-30-times-more-energy.html