In brief: AMD's philosophy with RDNA 3 was to develop better value products that draw gamers away from Team Green RTX 40 series offerings instead of going for the performance crown. However, removing the power limit on the RX 7900 XTX reveals the underlying architecture is technically capable of reaching a higher performance tier.

Nvidia's GeForce RTX 4090 graphics card is the undisputed performance king and it probably will be for a while. Our own Steven Walton called it "brutally fast," which is an accurate description for a card that can be bottlenecked by relatively capable processors like the Ryzen 7 5800X3D even when playing games at 1440p.

AMD has chosen not to launch a competitor for the RTX 4090 and instead opted to offer an alternative to Nvidia's RTX 4080. During a Q&A session last year, the company explained the main reason behind this decision was its philosophy of building great value products for gamers, which is why it uses a chiplet architecture for its RDNA 3 GPUs. At the same time, we were told it didn't want to create a higher-end product with extreme power and cooling requirements just to prove that it can.

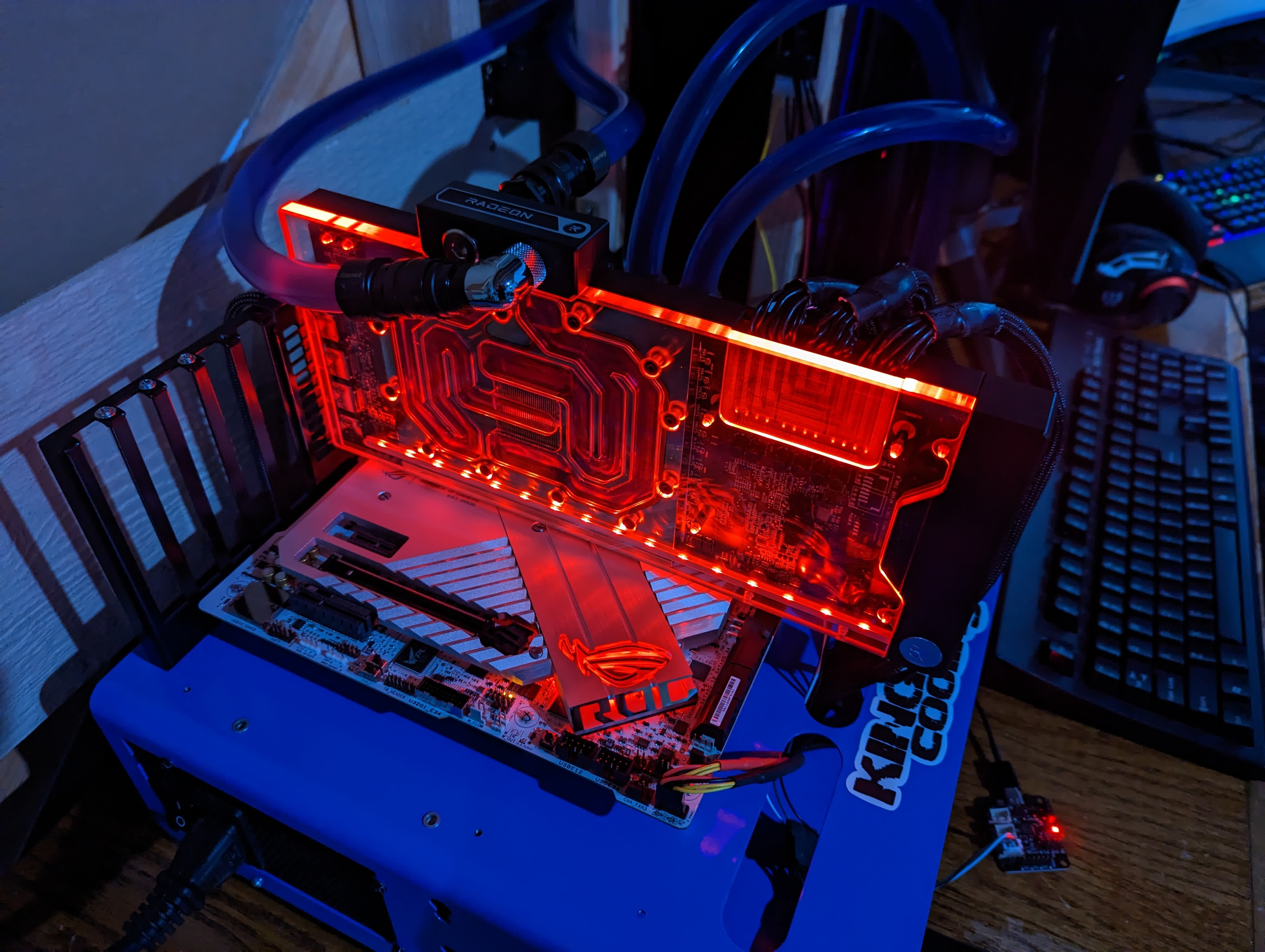

Image credit: jedi95

That said, an enthusiast who goes by "jedi95" on the AMD subreddit sought to test the limits of the Radeon RX 7900 XTX, and their experiment led to some interesting findings. For one, removing the power limits allowed the GPU clock speed to climb as high as 3,467 MHz. The average clock speed was around 3,333 MHz during benchmarks, which is also an impressive figure.

More importantly, the card was able to achieve a graphics score of 19,137 points in 3DMark's Time Spy Extreme test. For reference, the RTX 4090 in all its incarnations achieves an average of 19,508 points in the same benchmark, with the world record being 24,486 points at the time of writing this. As you'd expect, the modded Asus TUF RX 7900 XTX used in this experiment was a massive power sink, with a peak power draw of 696 watts.

The enthusiast used a high-end custom water loop cooling system to tame the GPU during the testing, though he believes the stock cooler on the TUF RX 7900 XTX should also be able to handle thermals when power consumption is pushed higher than the 430-watt power limit.

Still, it's clear AMD optimized the RX 7900 XTX for better performance-per-watt as many users aren't going to run this card with an exotic cooling solution. As for the potential performance improvements in actual gaming scenarios, jedi95 says the 700-watt RX 7900 XTX was around 15 percent faster in Cyberpunk 2077 at 1440p using the built-in benchmark with graphics set to the Ultra preset. Warzone 2 gains compared to stock were a touch over 17 percent at the same resolution, which is also an impressive result.

It's unlikely that AMD will release a higher-end RDNA 3 SKU, but a recent leak suggests the company may have been toying with an RX 7950 XTX. After all, Team Red did previously say the RDNA 3 architecture was designed to achieve clock speeds of 3 GHz and beyond. We'll have to wait and see, but then again it would be an expensive, power-hungry product in a GPU market that desperately needs better mainstream offerings.

https://www.techspot.com/news/98716-enthusiasts-proves-amd-rx-7900-xtx-can-reach.html