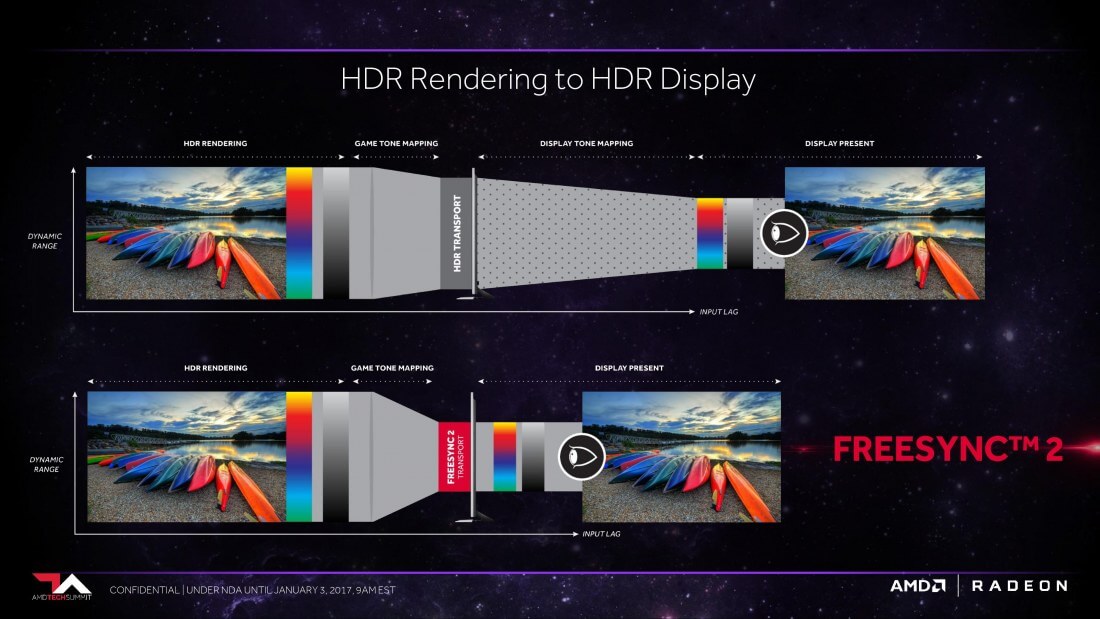

FreeSync 2 was announced over a year ago but it's only recently that we're starting to see the its ecosystem expand with new display options. As HDR and wide-gamut monitors become more of a reality over the next year, there's no better time to discuss FreeSync 2 than now, when you can actually buy it.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

FreeSync 2 Explained: How has it evolved & what's available now?

- Thread starter Scorpus

- Start date

phillai

Posts: 57 +16

Why are all these FreeSync 2 monitors curved?

That absolutely turns me off them!!

That absolutely turns me off them!!

JaredTheDragon

Posts: 685 +443

Why are all these FreeSync 2 monitors curved?

That absolutely turns me off them!!

Curved monitors are usually awesome for gaming. For content-creation or any kind of actual work, of course they're terrible! But I don't think that's their marker.

Fearghast

Posts: 621 +572

Evernessince

Posts: 5,469 +6,158

Why are all these FreeSync 2 monitors curved?

That absolutely turns me off them!!

Curved monitors are usually awesome for gaming. For content-creation or any kind of actual work, of course they're terrible! But I don't think that's their marker.

I used to want a curved gaming monitor until I bought VR. I don't know why but VR kind of scratched that itch.

Footlong

Posts: 153 +82

Why are all these FreeSync 2 monitors curved?

That absolutely turns me off them!!

Curved monitors are usually awesome for gaming. For content-creation or any kind of actual work, of course they're terrible! But I don't think that's their marker.

Gamers value curved monitors. I don't like them either, but that is where the market.

Really good article! thanks for all the insights on this technology, I just bought the Samsung 27" Quantom Dot monitor with an Rx 580 and I didn't know much about Freesync 2.

Requires an AMD GPU? Nope, that makes this tech just as **** as nVidia's.

Hardware manufacturers need to be forced to move to open standards. I wish people would stop buying this proprietary garbage.

Hardware manufacturers need to be forced to move to open standards. I wish people would stop buying this proprietary garbage.

mbrowne5061

Posts: 2,157 +1,362

Meh, VRR is getting mainlined in the HDMI 2.1 specification, so I really don't see either Freesync2 or Gsync really needing to remain around for too much longer.

As long as you want higher bandwidth, you're going to need DisplayPort, and thus VRR-"addons" like FreeSync and Gsync. HDMI is alright for gaming, but its a bit of a walled garden. DisplayPort is slightly more open - it doesn't care what travels the pipe, just that the pipe is big enough.

nVidia can use it if it wants Freesync is an open tech, but nVidia chooses not to. While g-sync is a good it requires licensed tech and nonstandard HW which add big bucks to a monitor priceRequires an AMD GPU? Nope, that makes this tech just as **** as nVidia's.

Hardware manufacturers need to be forced to move to open standards. I wish people would stop buying this proprietary garbage.

Stingy McDuck

Posts: 300 +268

If Freesync 2 provides additional features on top of the original FreeSync feature set, how comes it isn't a replacement for Freesync "1"? Is it acumulative, I could have a Freesync2 display without Freesync "1" features?

ShagnWagn

Posts: 1,297 +1,085

Requires an AMD GPU? Nope, that makes this tech just as **** as nVidia's.

Hardware manufacturers need to be forced to move to open standards. I wish people would stop buying this proprietary garbage.

It's most likely their money scheme for you to buy into their supported monitor so your future video cards will also have to be from them to "match" your monitor. At least in this case, AMD has gone with open source instead of proprietary. Unfortunately I've been burned on just about everything I've bought from them - for the last 15 years anyway. I still only see Intel/nVidia in my future. :/

Vrmithrax

Posts: 1,609 +679

Requires an AMD GPU? Nope, that makes this tech just as **** as nVidia's.

Hardware manufacturers need to be forced to move to open standards. I wish people would stop buying this proprietary garbage.

It's most likely their money scheme for you to buy into their supported monitor so your future video cards will also have to be from them to "match" your monitor. At least in this case, AMD has gone with open source instead of proprietary. Unfortunately I've been burned on just about everything I've bought from them - for the last 15 years anyway. I still only see Intel/nVidia in my future. :/

Sorry, but there is much wrong with these statements... FreeSync 2 is a royalty free protocol that any card could use, if they chose to. In fact, parts of the original FreeSync were rolled in the VESA standards for displays. You only NEED to have an AMD card to use FreeSync 2 because Nvidia has chosen NOT to adopt or support the relatively open protocol in favor of their own proprietary and more expensive option.

I can't see how that is a "money scheme" on the part of AMD, when it's just an open standard meant to provide useful graphics features to everyone. The real "money scheme" is, and always has been, Nvidia pushing their G-Sync technology to lock out everyone but themselves. That's business, and smart business to boot, considering how effective G-Sync hardware can be. They can get people to pay more money for their compatible monitors, which encourages them to stay in the Nvidia ecosystem to continue to enjoy the benefits they paid extra for. Shrewd and effective business tactic, for sure!

Evernessince

Posts: 5,469 +6,158

Requires an AMD GPU? Nope, that makes this tech just as **** as nVidia's.

Hardware manufacturers need to be forced to move to open standards. I wish people would stop buying this proprietary garbage.

It doesn't require an AMD GPU. Anyone can implement the optional displayport standard to use FreeSync monitors. Nvidia chooses not to so it can charge $200 a G-Sync adapter to customers.

I would not be surprised if Intel's upcoming dGPUs have FreeSync support.

Evernessince

Posts: 5,469 +6,158

If Freesync 2 provides additional features on top of the original FreeSync feature set, how comes it isn't a replacement for Freesync "1"? Is it acumulative, I could have a Freesync2 display without Freesync "1" features?

FreeSync and FreeSync 2 are not software only implementations, they require certain hardware specifications in order to work properly. It is possible for a monitor to be updated to be given FreeSync 2 support only if it meets the HDR and low latency requirements. FreeSync 2 cannot magically improve the contrast ratio and latency by itself though, the hardware needs to support it.

tipstir

Posts: 2,873 +206

Another push to sell HDR monitors and I am not buying Samsung products. ACER all the way for me for computer monitors and computers in general. SONY for HDTV/UHDTV side. I am not a fan of curve HDTV/UHDTV. I see my AMD APU/GPU is supported for this Freesync 2, just need a new monitor. That's not on my current list. Yet look promising but Windows 10 HDR has to get on the ballgame along with ARC/Perfect world games. I already have graphics Max/Ultra Max already at 16x. Intel HD and AMD HD APU/GPU. Edges are sharp and detail. How much more can we go with this Freesync 2 beyond Edge of sharpness.

NightAntilli

Posts: 929 +1,195

No. But you can have a FreeSync1 monitor with certain FreeSync 2 features. For example, LFC can work on FreeSync 1 monitors, and it does already. But if a monitor does not have ALL the required FreeSync 2 features, it will simply be labeled as FreeSync 1. This is to keep the monitor space liquid. FreeSync 2 monitors will be a lot more costly than FreeSync 1 monitors, but they can easily exist side by side.If Freesync 2 provides additional features on top of the original FreeSync feature set, how comes it isn't a replacement for Freesync "1"? Is it acumulative, I could have a Freesync2 display without Freesync "1" features?

Shadowboxer

Posts: 2,074 +1,655

FreeSync 2 sounds great. But its forces you into using AMD GPU's. Fine if you are on 1080p but if you are gaming at greater than that you will have trouble.

Evernessince

Posts: 5,469 +6,158

FreeSync 2 sounds great. But its forces you into using AMD GPU's. Fine if you are on 1080p but if you are gaming at greater than that you will have trouble.

It doesn't require an AMD GPU. Anyone can implement the optional displayport standard to use FreeSync monitors. Nvidia chooses not to so it can charge $200 a G-Sync adapter to customers.

I would not be surprised if Intel's upcoming dGPUs have FreeSync support.

Also, insinuating that AMD can only do 1080p? I think that speaks for itself.

msroadkill612

Posts: 112 +43

msroadkill612

Posts: 112 +43

What did the supercilious Freesync monitor say to the fixed refresh monitor?

"I sync therefore I am."

"I sync therefore I am."

gamerk2

Posts: 967 +976

As long as you want higher bandwidth, you're going to need DisplayPort, and thus VRR-"addons" like FreeSync and Gsync. HDMI is alright for gaming, but its a bit of a walled garden. DisplayPort is slightly more open - it doesn't care what travels the pipe, just that the pipe is big enough.

You're repeating the argument in favor of Fiberwire after USB 2.0 came out, while forgetting the lessons. While DP is more open, no company is going to pay to support two ports that do the same thing. Since HDMI is more ubiquitous in the HT scene AND has higher bandwidth (as of 2.1), there really isn't a cost justification to continue supporting DP.

gamerk2

Posts: 967 +976

Gsync is a superior technical solution, given it works over a much wider refresh range then Freesync. When paired with a lower-cost GPU that is unable to stay within Freesyncs refresh window, the benefits of Freesync basically vanish.

Again, both Gsync and Freesync are likely DOA once HDMI 2.1 hits. Hell, Displayport itself is likely DOA once HDMI 2.1 hits.

Similar threads

- Replies

- 14

- Views

- 533

- Replies

- 1

- Views

- 199

- Replies

- 55

- Views

- 867

Latest posts

-

Mercedes-Benz becomes first automaker to sell Level 3 autonomous vehicles in the US

- Theinsanegamer replied

-

Apple secures supplier to replace physical buttons on iPhone

- Loli Pop Carbon replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.