Besides being one of the year's biggest blockbuster games, the arrival of Gears of War 4 a few days ago is especially exciting for a number of reasons: Windows/Xbox cross-platform multiplayer, the exclusion of older DirectX APIs, the use Unreal Engine 4 versus UE3, and for the simple fact that this is the first GoW title developed outside of Epic Games.

Gears of War 4 is DX12-only, meaning the game has been built from the ground up to leverage this low-level API on both the PC and Xbox versions, and considering developer 'The Coalition' is a subsidiary of Microsoft, it should come as no surprise that they worked together on incorporating the technology, nor that Epic Games assisted in allowing Gears of War 4 to take full advantage of UE4's DX12 support.

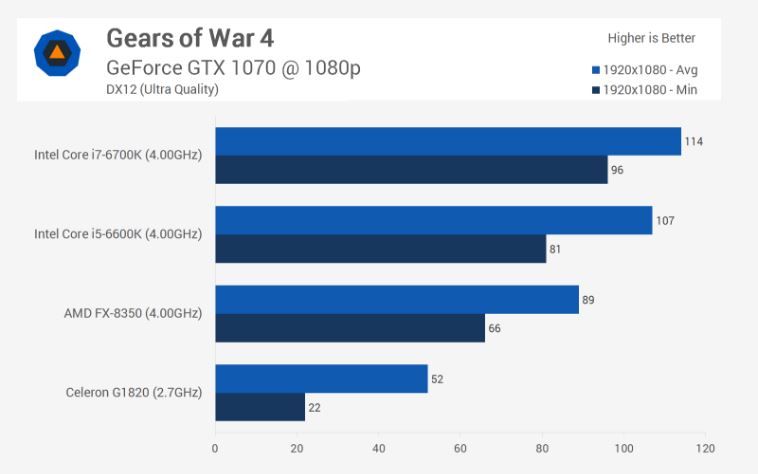

In an effort to figure that out, we've thrown not 20 or even 30 graphics cards at this title, but 40 -- 41 to be exact. As the cherry on top, we've also tested a number of Intel and AMD chips to see the impact processors have on performance.