Besides being one of the year's biggest blockbuster games, the arrival of Gears of War 4 a few days ago is especially exciting for a number of reasons: Windows/Xbox cross-platform multiplayer, the exclusion of older DirectX APIs, the use Unreal Engine 4 versus UE3, and for the simple fact that this is the first GoW title developed outside of Epic Games.

Gears of War 4 is DX12-only, meaning the game has been built from the ground up to leverage this low-level API on both the PC and Xbox versions, and considering developer 'The Coalition' is a subsidiary of Microsoft, it should come as no surprise that they worked together on incorporating the technology, nor that Epic Games assisted in allowing Gears of War 4 to take full advantage of UE4's DX12 support.

It's said that DX12 has increased performance thanks to more direct control over the hardware, simplifying the driver layer, and allowing the developer to make fully informed decisions on how to optimally manage graphics resources.

In an effort to figure out performance, we've thrown not 20 or even 30 graphics cards at this title, but 40 – 41 to be exact.

Because Gears of War 4 only supports DX12, it's exclusive to Windows 10 and sadly at this point can only be purchased through the clunky Windows Store, a rubbish alternative to popular services such as Steam (it had to be said...).

In fact, gamers require the latest version of Windows 10 with Anniversary Update and it must be the 64-bit version. On top of that, your system's hardware needs to be pretty decent as well. The developer suggests at least a Core i5 (Haswell) or AMD FX-6300 (in other words a quad-core processor), 8GB of system memory, 2GB of VRAM on either the GeForce GTX 750 Ti or Radeon R7 260X. Oh, and you need 80GB of drive space!

Again, those are only the minimum requirements to play the game, so what if you intend to enjoy it in all of its glory?

In an effort to figure that out, we've thrown not 20 or even 30 graphics cards at this title, but 40 – 41 to be exact. As the cherry on top, we've also tested a number of Intel and AMD chips to see the impact processors have on performance.

As usual, we've published a separate piece for gameplay commentary, but having been well received thus far, you might say this marks a new beginning of sorts for the franchise.

Testing Methodology

Our job has been made quite a bit easier thanks to Gears of War 4's built-in benchmark. The test runs for roughly 90 seconds and we found it to be an accurate representation of actual gameplay performance.

Since the game appears to be well optimized, we didn't test a heap of quality presets for this title and instead we focused on getting every graphics card we could. The 'Ultra' preset was used and async compute along with tiled resources were enabled. The ultra preset was re-applied after every resolution change as some of the options seem to revert back to recommended settings upon a resolution switch. Speaking of resolution, we also tested at 1080p, 1440p and 4K.

The latest AMD and Nvidia graphics drivers were used for testing and for those wondering, multi-GPU technology isn't supported at this time.

Test System Specs

- Intel Core i7-6700K (4.50GHz)

- 8GBx4 Kingston Predator DDR4-3000

- Asrock Z170 Extreme7+ (Intel Z170)

- Silverstone Strider 700w PSU

- Crucial MX200 1TB

- Microsoft Windows 10 Pro 64-bit

- Nvidia GeForce 373.06 WHQL

- AMD Crimson Edition 16.10.1 Hotfix

- Radeon RX 480 (8192MB)

- Radeon RX 470 (4096MB)

- Radeon RX 460 (4096MB)

- Radeon R9 Fury X (4096MB)

- Radeon R9 Fury (4096MB)

- Radeon R9 Nano (4096MB)

- Radeon R9 390X (8192MB)

- Radeon R9 390 (8192MB)

- Radeon R9 380X (4096MB)

- Radeon R9 380 (2048MB)

- Radeon R7 360 (2048MB)

- Radeon R9 290X (4096MB)

- Radeon R9 290 (4096MB)

- Radeon R9 285 (2048MB)

- Radeon R9 280X (3072MB)

- Radeon R9 280 (3072MB)

- Radeon R9 270X (2048MB)

- Radeon R9 270 (2048MB)

- Radeon HD 7970 GHz (3072MB)

- Radeon HD 7970 (3072MB)

- Radeon HD 7950 Boost (3072MB)

- Radeon HD 7950 (3072MB)

- Radeon HD 7870 (2048MB)

- Nvidia Titan X (12288MB)

- GeForce GTX 1080 (8192MB)

- GeForce GTX 1070 (8192MB)

- GeForce GTX 1060 (6144MB)

- GeForce GTX 1060 (3072MB)

- GeForce GTX Titan (6144MB)

- GeForce GTX 980 Ti (6144MB)

- GeForce GTX 980 (4096MB)

- GeForce GTX 970 (4096MB)

- GeForce GTX 960 (2048MB)

- GeForce GTX 950 (2048MB)

- GeForce GTX 780 Ti (3072MB)

- GeForce GTX 780 (3072MB)

- GeForce GTX 770 (2048MB)

- GeForce GTX 760 (2048MB)

- GeForce GTX 750 Ti (2048MB)

- GeForce GTX 680 (2048MB)

- GeForce GTX 660 Ti (2048MB)

Ultra Doesn't Always Mean Ultra

Something I noticed when testing many GPUs was the difference in image quality, despite the settings remaining the same. Forcing the settings to ultra, there were still distinct differences between the 3GB and 6GB versions of Nvidia's GeForce GTX 1060 for example.

The character resolution seemed lower at times on the 3GB model. It was almost like a different anti-aliasing method was being used. The characters would appear blurrier and feature lower textures. The environments would often feature lower quality textures as well, less lighting and lower quality shadows.

Here are a few examples between the 3GB and 6GB 1060 models:

I admit the close up of this screen doesn't make the difference super obvious, but the GTX 1060 6GB is showing a slight shadow over the green support while there is no shadow at all for the 3GB card. This is easier to spot when looking at the screenshot in its entirety.

Here you can see that the character is clearly softer/blurrier on the 3GB 1060.

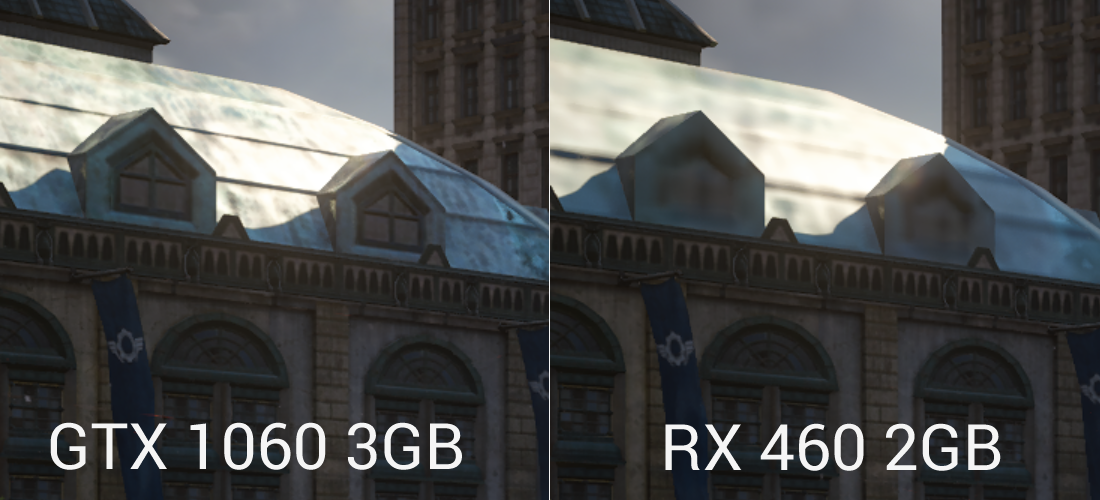

Now let's look at a 2GB vs 4GB comparison with the RX 460.

The first example isn't exactly day and night but the 4GB texturing is better than what we see on the 2GB model.

This shot shows a distinct difference between the two RX 460 models, the 4GB version is clearly providing a superior image with much greater texture and shadow detail.

Now, we're well aware that the GTX 1060 and RX 460 are in no way competitors, so please calm yourselves fans of the red team. The purpose here, since we have already looked at these two cards, was to see what kind of image quality difference there is between a 3GB GeForce to a 2GB Radeon graphics card. Again, they are not competitors and don't occupy the same price brackets.

Here we see that the 3GB 1060 provides much more detail over the 2GB RX 460, just as the 4GB RX 460 did. However, it is interesting to note that there is significantly more shadow detail on the Nvidia graphics card, this is also true when compared to the 4GB RX 460.

With limited VRAM and a dynamic environment, it's difficult to accurately compare the two and texture pop-ins make this even harder. With the character staring at the same scene for a minute we compared screenshots and you can see that the 3GB card can house more texture information.

This makes Gears of War 4 hard to test GPU performance-wise, as two GPUs can deliver similar performance though one might offer better image quality. Keeping that in mind let's check out the performance numbers...