Here's an extensive benchmark session comparing the new GeForce RTX 4070 with its more formal tie-wearing sibling, the RTX 4070 Ti, to determine whether the Ti is worth the extra $200.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

GeForce RTX 4070 vs. 4070 Ti: $600 or $800 GPU Upgrade

- Thread starter Steve

- Start date

Yeah the Ti is worth it, unless you buy for a small build where temps/airflow can be a problem. Plus 4070s are generally much smaller and easier to fit - for example there is many 2 slot versions with short pcb as well.

4070 is 3080 perf with ~200 watts instead of 320-350 watts + 2GB VRAM more + DLSS 3 support + lower price and higher availablity.

4070 Ti is more like 3090 Ti performance at ~275 watts instead of ~525 watts Yeah the 3090 Ti runs at 537 watts average in gaming according to Techpowerup... 4070 is at 201 watts and 4070 Ti is at 277 watts, crazy difference.

Yeah the 3090 Ti runs at 537 watts average in gaming according to Techpowerup... 4070 is at 201 watts and 4070 Ti is at 277 watts, crazy difference.

3090 Ti should never have been released. It came way too late and has very high power usage, peaked to the absolute limit. Just like some peaked 6950XTs which essentially did not bring anything over custom 6900XT + OC, same chip with higher clock.

According to TPU, 6800XT spikes at 579 watts, 6900XT spikes at 636 watts, 3090 Ti spikes at 570 watts...

Watt spikes is what makes PSU pop, or system reboot.

I would never buy a GPU that uses more than 325-350 watts and even these often needs undervolting to not heat up the entire room. My 3080 uses 275 watts post UV and perf loss is around 2% compared to stock - at stock it can hit 350 watt at times

4070 is 3080 perf with ~200 watts instead of 320-350 watts + 2GB VRAM more + DLSS 3 support + lower price and higher availablity.

4070 Ti is more like 3090 Ti performance at ~275 watts instead of ~525 watts

3090 Ti should never have been released. It came way too late and has very high power usage, peaked to the absolute limit. Just like some peaked 6950XTs which essentially did not bring anything over custom 6900XT + OC, same chip with higher clock.

According to TPU, 6800XT spikes at 579 watts, 6900XT spikes at 636 watts, 3090 Ti spikes at 570 watts...

Watt spikes is what makes PSU pop, or system reboot.

I would never buy a GPU that uses more than 325-350 watts and even these often needs undervolting to not heat up the entire room. My 3080 uses 275 watts post UV and perf loss is around 2% compared to stock - at stock it can hit 350 watt at times

Last edited:

ScottSoapbox

Posts: 1,044 +1,818

4070 should be $500

4070 Ti should be $700 with 16 GB

(To actually sell, cheaper would be nice)

4070 Ti should be $700 with 16 GB

(To actually sell, cheaper would be nice)

Neatfeatguy

Posts: 1,616 +3,045

Yeah the Ti is worth it, unless you buy for a small build where temps/airflow can be a problem. Plus 4070s are generally much smaller and easier to fit - for example there is many 2 slot versions with short pcb as well.

4070 is 3080 perf with ~200 watts instead of 320-350 watts + 2GB VRAM more + DLSS 3 support + lower price and higher availablity.

Correction:

FE 3080 10GB gaming power is 303W (average)

20ms spike is 360W

4070 Ti is more like 3090 Ti performance at ~275 watts instead of ~525 watts Yeah the 3090 Ti runs at 537 watts average in gaming according to Techpowerup... 4070 is at 201 watts and 4070 Ti is at 277 watts, crazy difference.

Correction:

FE 3090Ti, TPU shows gaming for it is 445W (average)

20ms spikes can hit 528W

I'm not disagreeing with your post, just providing more accurate numbers from TPU. AIB cards can certainly have higher power usage, but not all of them are that much of a difference over the FE card unless they're pushing RGB and overclocks, trying to entice folks to buy them instead of the FE.

Correction:

FE 3080 10GB gaming power is 303W (average)

20ms spike is 360W

Correction:

FE 3090Ti, TPU shows gaming for it is 445W (average)

20ms spikes can hit 528W

I'm not disagreeing with your post, just providing more accurate numbers from TPU. AIB cards can certainly have higher power usage, but not all of them are that much of a difference over the FE card unless they're pushing RGB and overclocks, trying to entice folks to buy them instead of the FE.

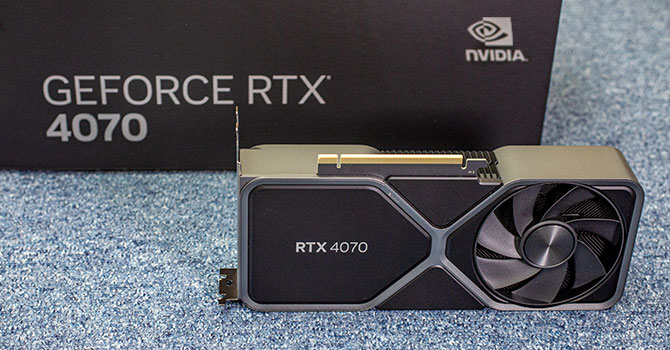

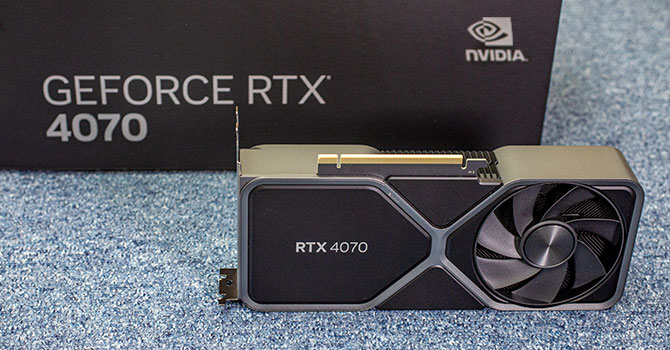

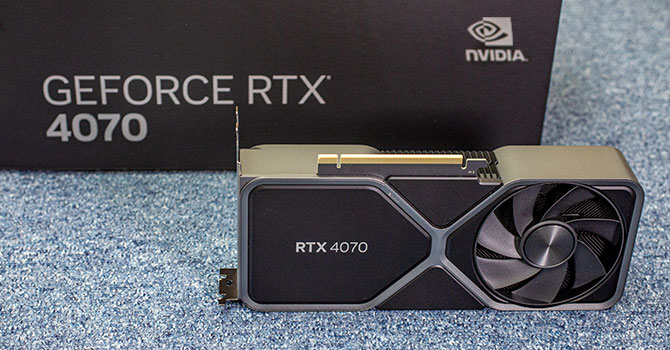

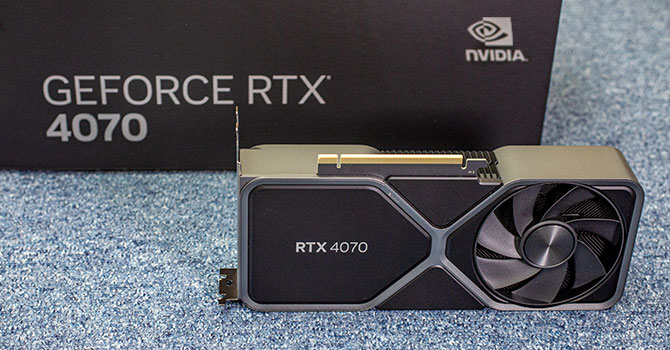

NVIDIA GeForce RTX 4070 Founders Edition Review

NVIDIA's GeForce RTX 4070 launches today. In this review we're taking a look at the Founders Edition, which sells at the baseline MSRP of $600. NVIDIA's new FE is a beauty, and its dual-slot design is compact enough to fit into all cases. In terms of performance, the card can match RTX 3080...

What I said was accurate according to their newest GPU (founders) review of 4070

And these numbers are founders/reference numbers, AIB custom cards will be higher in most cases (average watts, maybe not spikes)

Neatfeatguy

Posts: 1,616 +3,045

Odd. The standalone reviews show different numbers. I wonder where W1zzard got the numbers from then.

NVIDIA GeForce RTX 4070 Founders Edition Review

NVIDIA's GeForce RTX 4070 launches today. In this review we're taking a look at the Founders Edition, which sells at the baseline MSRP of $600. NVIDIA's new FE is a beauty, and its dual-slot design is compact enough to fit into all cases. In terms of performance, the card can match RTX 3080...www.techpowerup.com

What I said was accurate according to their newest GPU (founders) review of 4070

And these numbers are founders/reference numbers, AIB custom cards will be higher in most cases (average watts, maybe not spikes)

DSirius

Posts: 788 +1,613

Can you provide with a link or proof that Tech Power Up is stating this on it's reviews?According to TPU, 6800XT spikes at 579 watts, 6900XT spikes at 636 watts, 3090 Ti spikes at 570 watts...

Watt spikes is what makes PSU pop, or system reboot.

I would never buy a GPU that uses more than 325-350 watts and even these often needs undervolting to not heat up the entire room. My 3080 uses 275 watts post UV and perf loss is around 2% compared to stock - at stock it can hit 350 watt at times

Because what I found on Tech Power Up is different from what you say in this post.

Maybe they re-test every time, not sure tho. Game might change over time I guessOdd. The standalone reviews show different numbers. I wonder where W1zzard got the numbers from then.

Link is aboveCan you provide with a link or proof that Tech Power Up is stating this on it's reviews?

Because what I found on Tech Power Up is different from what you say in this post.

Achaios

Posts: 482 +1,287

[HEADING=2]"GeForce RTX 4070 vs. 4070 Ti: $600 or $800 GPU Upgrade?"[/HEADING]

Neither.

RTX 4070 should have been around $400, 4070Ti around $500 and the 4080 around $650 and I am being too generous towards NVIDIA.

Neither.

RTX 4070 should have been around $400, 4070Ti around $500 and the 4080 around $650 and I am being too generous towards NVIDIA.

P

punctualturn

I sincerely dont understand how out of the blue, Steven has decided to push DLSS and RT so hard without even warning that the sole existence of DLSS is to keep you locked into nvidia hardware.

Its a disservice to games, gamers and the industry and simply pushes the industry to a horrible monopoly.

And RT is simply not there yet, specially now with PT.

When AMD, nvidia, Intel and whoever else left can sell a gpu that produces proper PT games at 120 FPS@4K without any gimmicks and lock in tech like DLSS (FSR is another gimmick but at least is not locked into one GPU vendor) AND it cost less than US$500, then we should be spending this much energy in pushing this.

Its a disservice to games, gamers and the industry and simply pushes the industry to a horrible monopoly.

And RT is simply not there yet, specially now with PT.

When AMD, nvidia, Intel and whoever else left can sell a gpu that produces proper PT games at 120 FPS@4K without any gimmicks and lock in tech like DLSS (FSR is another gimmick but at least is not locked into one GPU vendor) AND it cost less than US$500, then we should be spending this much energy in pushing this.

DSirius

Posts: 788 +1,613

Thank you for the review Steve. And please, sometimes less is better, instead o talking so much about DLSS. Among all of Nvidia 1-2-3-4xxx videocards on Steam survey, 50% are GTX 1xxx cards and do not support Nvidia's own DLSS, due to Nvidia anticonsumer and market segmentation dark pattern business model. Or at least would be more objective and fair to mention this as a limitation.

Last edited:

DSirius

Posts: 788 +1,613

My suggestion to you is to check more, before posting, for consistencies, though power spikes vary quite much for different implementations of the same videocard, depending on AIBs.Link is above

About power spikes, on Nvidia forum, they explained better this issues. Check njuffa user post.

https://forums.developer.nvidia.com/t/maximum-power-draw-3090/169134/3

So the real issue is when you combine a power hungry video card with a power hungry CPU.

Like in 3090 or 4090 with 11900K, 12900K, 13900K. This is a recipe for power spikes issues.

If you have a processor like those mentioned and a powerful videocard, better use a minimum 1000W Platinum rated PSU.

87789-B66-6642-417-D-9-CC1-9620-AE726-AE3 hosted at ImgBB

Image 87789-B66-6642-417-D-9-CC1-9620-AE726-AE3 hosted in ImgBB

Regardless, your post came right on time, because, from my research and posts from this chat forum, I could make a better and proper picture of the importance and issues of power spikes.

I have a Seasonic Prime Titanium 1000W PSU, even so, I'll check how power spike proof is my current system. In fact, I could say that everybody from this chat forum should check

P.S. I saw after I posted my 1st post, that you updated and corrected your post

Last edited:

DSirius

Posts: 788 +1,613

I think I found 2 reasons why TPU shows different power spikes results even for the same class, model videocards.Maybe they re-test every time, not sure tho. Game might change over time I guess

1 reason is different implementations of AIBs for the same videocard.

2nd reason and the most important one I think, it is that TPU changed the PC test system between their previous reviews and the latter.

In their previous reviews TPU used an Intel 9900K processor in their tests for videocards.

MSI Radeon RX 6800 XT Gaming X Trio Review

The MSI Radeon RX 6800 XT Gaming X Trio has the best cooler out of all RX 6800 XT cards we've reviewed so far. With just 67°C, this triple-slot, triple-fan design runs extremely cool, yet stays very quiet at the same time. As expected, idle fan stop is included, too.

In the latter reviews they use a 13900K, which is a completely different power hungry story.

NVIDIA GeForce RTX 4070 Founders Edition Review

NVIDIA's GeForce RTX 4070 launches today. In this review we're taking a look at the Founders Edition, which sells at the baseline MSRP of $600. NVIDIA's new FE is a beauty, and its dual-slot design is compact enough to fit into all cases. In terms of performance, the card can match RTX 3080...

Would be interesting to check with a 7800X3D or 7950X3D system, if power spikes levels will be the same, higher or lower.

Last edited:

Do you remember when the 4070Ti was called the 4080 12GB and Nvidia wanted $900 for it?

Pepperidge Farm remembers.

Pepperidge Farm remembers.

DSirius

Posts: 788 +1,613

Well, Steve got a lot of hate from both Nvidia and AMD users. Though phrases like this from Steve are quite odd and is not helping him in this situation:I sincerely dont understand how out of the blue, Steven has decided to push DLSS and RT so hard without even warning that the sole existence of DLSS is to keep you locked into nvidia hardware.

Its a disservice to games, gamers and the industry and simply pushes the industry to a horrible monopoly.

"However, the 6950 XT will be inferior in ray tracing performance, it lacks DLSS support".

He "forgot" to mention that 6950 XT has FSR2 support which is AMD competing technology to DLSS2. No need for DLSS is a logic conclusion, is not a "lack", because has FSR2.

But as I mentioned in my previous posts, if we want to talk about what is lacking of Nvidia videocards the list is longer.

Also, allarming is that how many reviewers are hit by Nvidia Amnesia and forget to mention the many issues and limitations which Nvidia videocards or "technologies" have or blatantly choose to disregard them when they are confronted with proofs of their own inconsistency.

Last edited:

Thatsdisgusting

Posts: 231 +286

Neither.

That's 295mm die, size of 3060-level that they're trying to sell you for up to 1000$...

That's 295mm die, size of 3060-level that they're trying to sell you for up to 1000$...

ScottSoapbox

Posts: 1,044 +1,818

As more and more games add RT it becomes increasingly relevant. The fact that PT is on the horizon doesn't change this because there is always something better coming "soon".I sincerely dont understand how out of the blue, Steven has decided to push DLSS and RT so hard without even warning that the sole existence of DLSS is to keep you locked into nvidia hardware.

Its a disservice to games, gamers and the industry and simply pushes the industry to a horrible monopoly.

And RT is simply not there yet, specially now with PT.

When AMD, nvidia, Intel and whoever else left can sell a gpu that produces proper PT games at 120 FPS@4K without any gimmicks and lock in tech like DLSS (FSR is another gimmick but at least is not locked into one GPU vendor) AND it cost less than US$500, then we should be spending this much energy in pushing this.

If you don't play new games or don't care about RT - why are are you upset? Reviewers are always going to focus on the latest games as there are already plenty of reviews on old games.

As for DSLL, there are currently two ways to enable RT with max settings: use DSLL or spend $1200+ on a GPU. And guess what, that isn't changing any time soon. Why? Because the developers will push graphics as fast as GPUs get better so DSLL or shelling out a grand or more will be the norm for a while. Sure in 6 years that will probably be PT rather than RT, but the result is the same.

"However, the 6950 XT will be inferior in ray tracing performance, it lacks DLSS support"

FSR exist too ... and with a thing like "Lossless Scaling" (it cost 4€ on steam ) or "Magpie" (Free on github) you can use FSR on ALL your games... in 4k with FSR "quality" I'm sure almost every gamer would never know it's on if nobody tell them ... so many BS and pseudo-elitism... get real

FSR exist too ... and with a thing like "Lossless Scaling" (it cost 4€ on steam ) or "Magpie" (Free on github) you can use FSR on ALL your games... in 4k with FSR "quality" I'm sure almost every gamer would never know it's on if nobody tell them ... so many BS and pseudo-elitism... get real

DSirius

Posts: 788 +1,613

From where in NeoMorpheus user post you cited, did you find that he is upset? I read his post carefully and did NOT find anything as he may be "upset". And why do you make such affirmation when you do not have any proof? On the contrary I found his post a very good description of the actual state of RT and PT in gaming from nowadays.As more and more games add RT it becomes increasingly relevant. The fact that PT is on the horizon doesn't change this because there is always something better coming "soon".

If you don't play new games or don't care about RT - why are are you upset? Reviewers are always going to focus on the latest games as there are already plenty of reviews on old games.

As for DSLL, there are currently two ways to enable RT with max settings: use DSLL or spend $1200+ on a GPU. And guess what, that isn't changing any time soon. Why? Because the developers will push graphics as fast as GPUs get better so DSLL or shelling out a grand or more will be the norm for a while. Sure in 6 years that will probably be PT rather than RT, but the result is the same.

A good idea is to check how many gamers, on Steam chart percentage for example, play games with RT enabled or to make a poll here on TechSpot.

Regardless, it is one thing to debate different opinions with valid arguments which is useful and may help others to learn new things and share their experience;

And it is another thing to bring personal and emotional affirmations as "arguments".

Let's be more constructive and open to a civilized debate and sharing of opinions.

Last edited:

b3rdm4n

Posts: 180 +130

I tend to agree with Steve on this one, if you have $600 to spend, and want to buy new today, it's very hard not to give the 4070 the nod. Sure, if you can get a 6950XT for the same price before all that new stock disappears forever, it could be worth it if you really want that extra 4GB, slight perf bump, and can deal with ~200w more heat in the room and a different/less capable feature set overall, but as discussed that window is rapidly closing, AMD desperately need to announce a $550-650 7800XT and get it on shelves.

Yeah the Ti is worth it, unless you buy for a small build where temps/airflow can be a problem. Plus 4070s are generally much smaller and easier to fit - for example there is many 2 slot versions with short pcb as well.

4070 is 3080 perf with ~200 watts instead of 320-350 watts + 2GB VRAM more + DLSS 3 support + lower price and higher availablity.

4070 Ti is more like 3090 Ti performance at ~275 watts instead of ~525 wattsYeah the 3090 Ti runs at 537 watts average in gaming according to Techpowerup... 4070 is at 201 watts and 4070 Ti is at 277 watts, crazy difference.

3090 Ti should never have been released. It came way too late and has very high power usage, peaked to the absolute limit. Just like some peaked 6950XTs which essentially did not bring anything over custom 6900XT + OC, same chip with higher clock.

According to TPU, 6800XT spikes at 579 watts, 6900XT spikes at 636 watts, 3090 Ti spikes at 570 watts...

Watt spikes is what makes PSU pop, or system reboot.

I would never buy a GPU that uses more than 325-350 watts and even these often needs undervolting to not heat up the entire room. My 3080 uses 275 watts post UV and perf loss is around 2% compared to stock - at stock it can hit 350 watt at times

4070 Ti too has 2-slot models. I run mine in a Node 304 mini-ITX case where a 2-slot GPU is needed. I would rather pick 7900 XT but all models are almost 3-slot thick, require more power and run hotter, so I would need a different case, preferably full ATX one.

MaxSmarties

Posts: 559 +326

I’m su full about your AMD sponsorship. At the end of every comparison you are suggesting 6800XT or 6950XT as a viable option. No they are not ! No DLSS3, and the prices are low because people don’t buy them ! A 6950XT consumes significantly more power than a 4070Ti.

You keep suggesting that 12 GB VRAM are “not enough”, and your results said something different (at the target resolution of 1440P the 4070’s are perfectly fine). And now I’m curious to see if your are going to retest The Last of Us after che 49 GB patch released today where one of the main point is REDUCED VRAM IMPACT that should allow most of the players (their words) to raise texture quality. So they admittedly poorly optimized the game, but you used this game as an example on how bad Nvidia is.

I HATE Nvidia, it is the worst Company on the planet, in this field, but you must stop worshipping AMD because this channel is great, but you are losing credibility.

You keep suggesting that 12 GB VRAM are “not enough”, and your results said something different (at the target resolution of 1440P the 4070’s are perfectly fine). And now I’m curious to see if your are going to retest The Last of Us after che 49 GB patch released today where one of the main point is REDUCED VRAM IMPACT that should allow most of the players (their words) to raise texture quality. So they admittedly poorly optimized the game, but you used this game as an example on how bad Nvidia is.

I HATE Nvidia, it is the worst Company on the planet, in this field, but you must stop worshipping AMD because this channel is great, but you are losing credibility.

The Inno3D or? I can find zero reviews on it4070 Ti too has 2-slot models. I run mine in a Node 304 mini-ITX case where a 2-slot GPU is needed. I would rather pick 7900 XT but all models are almost 3-slot thick, require more power and run hotter, so I would need a different case, preferably full ATX one.

I think I found 2 reasons why TPU shows different power spikes results even for the same class, model videocards.

1 reason is different implementations of AIBs for the same videocard.

2nd reason and the most important one I think, it is that TPU changed the PC test system between their previous reviews and the latter.

In their previous reviews TPU used an Intel 9900K processor in their tests for videocards.

MSI Radeon RX 6800 XT Gaming X Trio Review

The MSI Radeon RX 6800 XT Gaming X Trio has the best cooler out of all RX 6800 XT cards we've reviewed so far. With just 67°C, this triple-slot, triple-fan design runs extremely cool, yet stays very quiet at the same time. As expected, idle fan stop is included, too.www.techpowerup.com

In the latter reviews they use a 13900K, which is a completely different power hungry story.

And the combination between the highest power hungry 13900K and power hungry videocards is causing PSUs to reboot the system.

NVIDIA GeForce RTX 4070 Founders Edition Review

NVIDIA's GeForce RTX 4070 launches today. In this review we're taking a look at the Founders Edition, which sells at the baseline MSRP of $600. NVIDIA's new FE is a beauty, and its dual-slot design is compact enough to fit into all cases. In terms of performance, the card can match RTX 3080...www.techpowerup.com

Would be interesting to check with a 7800X3D or 7950X3D system, if power spikes levels will be the same, higher or lower.

Good find however, their wattage numbers says GPU only? "Card Only"

13900K can use alot of watts, in gaming tho, it's probably "only" 100-150 watts tops

7800X3D is the best gaming chip right now - in most cases - and very impressive considering the watts, sadly for many that needs good multi threaded perf outside of games, it's not the best option, since its slower than 7700X in most - pretty much all - applications

I'd love to see a 12-16 core chip with 3D cache on all cores. Because 7900X3D tends to be even worse than 7800X3D in gaming and only have 6 cores with 3D cache compared to 8 on the 7800X3D.

Why did AMD do this? Is 3D cache expensive?

Last edited:

Similar threads

- Replies

- 17

- Views

- 228

- Replies

- 74

- Views

- 2K

Latest posts

-

Nintendo DMCA lawyers shut down everything Mario on Garry's Mod

- RudyBob replied

-

BlizzGone: Blizzard cancels 2024 convention but promises an eventual return

- ScottSoapbox replied

-

How Sam Altman became a billionaire without equity in OpenAI

- anastrophe replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.