In brief: Intel has had a rough time launching its freshman series of dedicated graphics cards, struggling to get them to consumers on time and possibly with unimpressive results in gaming benchmarks. However, they represent the debut of AV1 hardware encoding, and early tests show big efficiency gains against Nvidia and AMD's H.264 encoders.

The AV1 hardware encoder in Intel's new Arc A380 destroyed Nvidia and AMD's H.264 encoders in initial real-world tests this week. The results are sorely needed good news for Intel's entry into the GPU space and bode well for AV1's future in content creation.

Late last month, computers featuring Intel's entry-level Arc Alchemist graphics cards hit the international market after weeks of delays. YouTuber EposVox bought one of the first available A380s and measured its AV1 encoding against multiple H.264 encoders.

Also see: TechSpot's Intel Arc A380 Review

Support for the new AV1 video codec has expanded rapidly over the last several months. It promises more efficient compression than competitors like VP9 or H.264 and is royalty-free, unlike H.265.

However, decoding AV1 requires relatively recent hardware like Nvidia's RTX 30 series graphics cards, AMD's Radeon 6000 GPUs, or 11th generation or later Intel CPUs.

Apple devices don't support it yet, but a Qualcomm Snapdragon chip will next year. Streaming services like YouTube, Twitch, and Netflix at least partially support AV1 at the moment and will likely expand their support in the future. Firefox added AV1 hardware decoding in May after relying solely on software decoding.

While hardware decoding to view AV1 content is gaining broad support, encoding to produce AV1 videos was only possible through software until Intel Arc. EposVox's results indicate content creators may want to switch to the codec as its use widens.

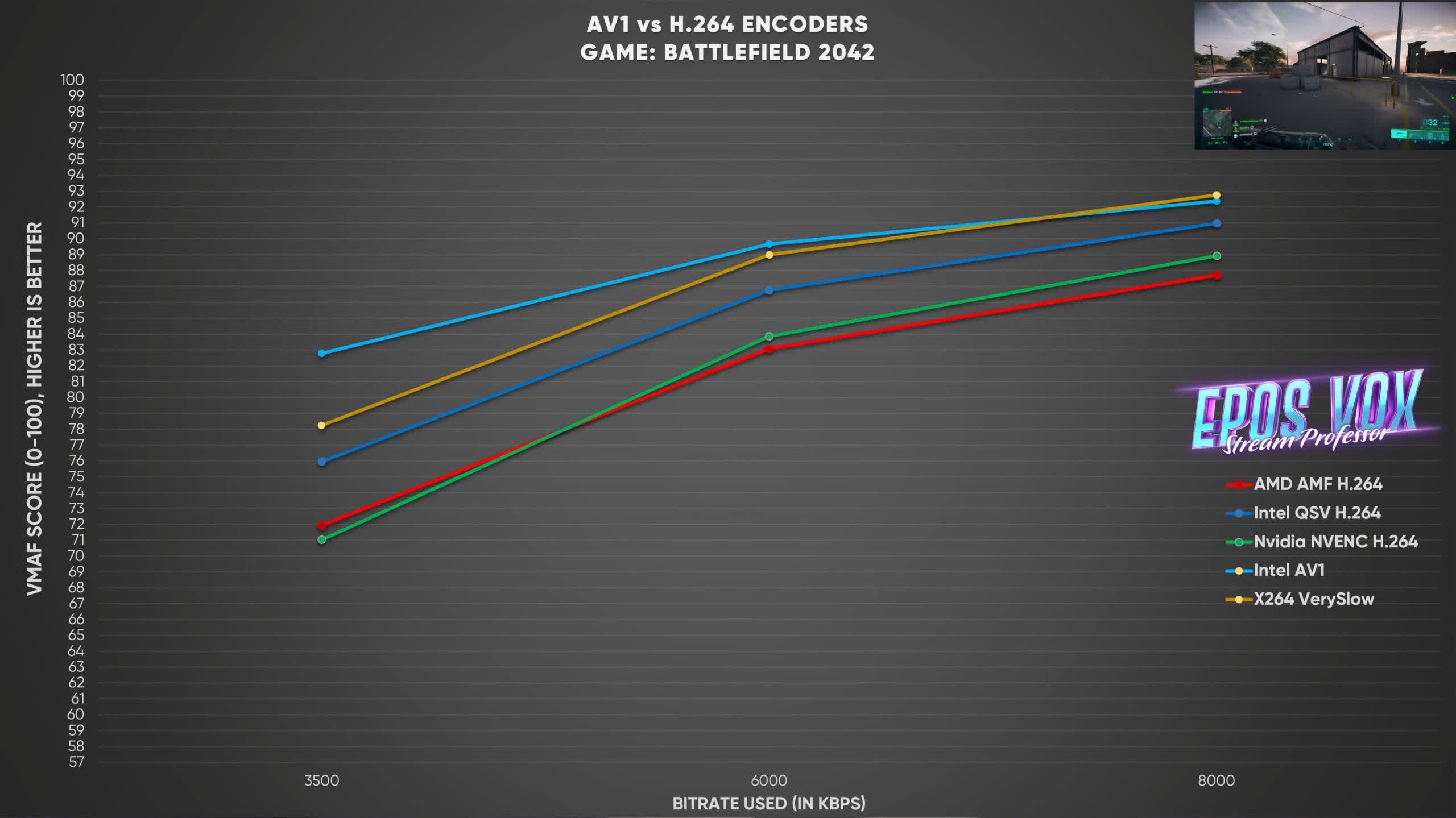

When streaming games like Halo Infinite and Battlefield 2042, Intel's AV1 encoder produced significantly cleaner video than H.264 encoders like Nvidia's NVENC, AMD's AMF, and Intel's QSV, even at lower bitrates. The Intel AV1 encoder at 3.5Mbps appears to beat AMD and Nvidia's at 6Mbps. AV1's lead shrinks at higher bitrates, but at 6Mbps it still outperforms the other GPU vendors' 8Mbps results.

These tests suggest that as more devices and services add AV1 compatibility, they will deliver better-looking streams using less data. AV1 hardware encoding could be featured in next-gen GPUs from both Nvidia and AMD, set to arrive later this year.

https://www.techspot.com/news/95518-intel-arc-av1-encoder-beats-amd-nvidia-h264.html