Intel quietly announced at IDF this past week that Ivy Bridge will support 4K video resolutions using the integrated GPU. Intel broke the news in one of their side technical sessions at the conference, according to VR Zone.

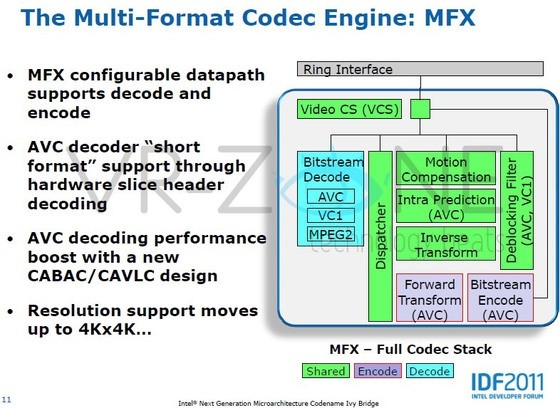

4K resolution means that the GPU (and supporting monitor) can run a video stream at up to 4096 x 4096 pixels (known as 4Kx4K), a feat that Intel claims Ivy Bridge can do with ease thanks to their Multi-Format Codec Engine called MFX.

We already knew that the GPU in Ivy Bridge would be fast, up to 60 percent faster than Sandy Bridge in some scenarios, but being able to run 4K video is pretty significant. And if that weren’t enough, chipzilla says that their next-generation platform can run multiple 4K videos simultaneously.

YouTube announced support for 4K video resolution in July 2010 and we have used this sample video run in “Original” mode as an informal measuring stick in several of our notebook reviews. The video is extremely taxing on the GPU and CPU of modern computers and you will need a fast Internet connection, lest you want to waste half your day buffering the video.

Anandtech points out that current Sandy Bridge GPUs only support resolutions of up to 2560 x 1600 and a bump up to 4Kx4K has over four times the number of pixels. Furthermore, displays that support resolutions over 2560 x 1600 are extremely rare and expensive and the bandwidth needed to push 4Kx4K video isn’t feasible at a refresh rate of 60Hz.

https://www.techspot.com/news/45539-intel-ivy-bridge-gpu-to-support-4k-resolutions.html