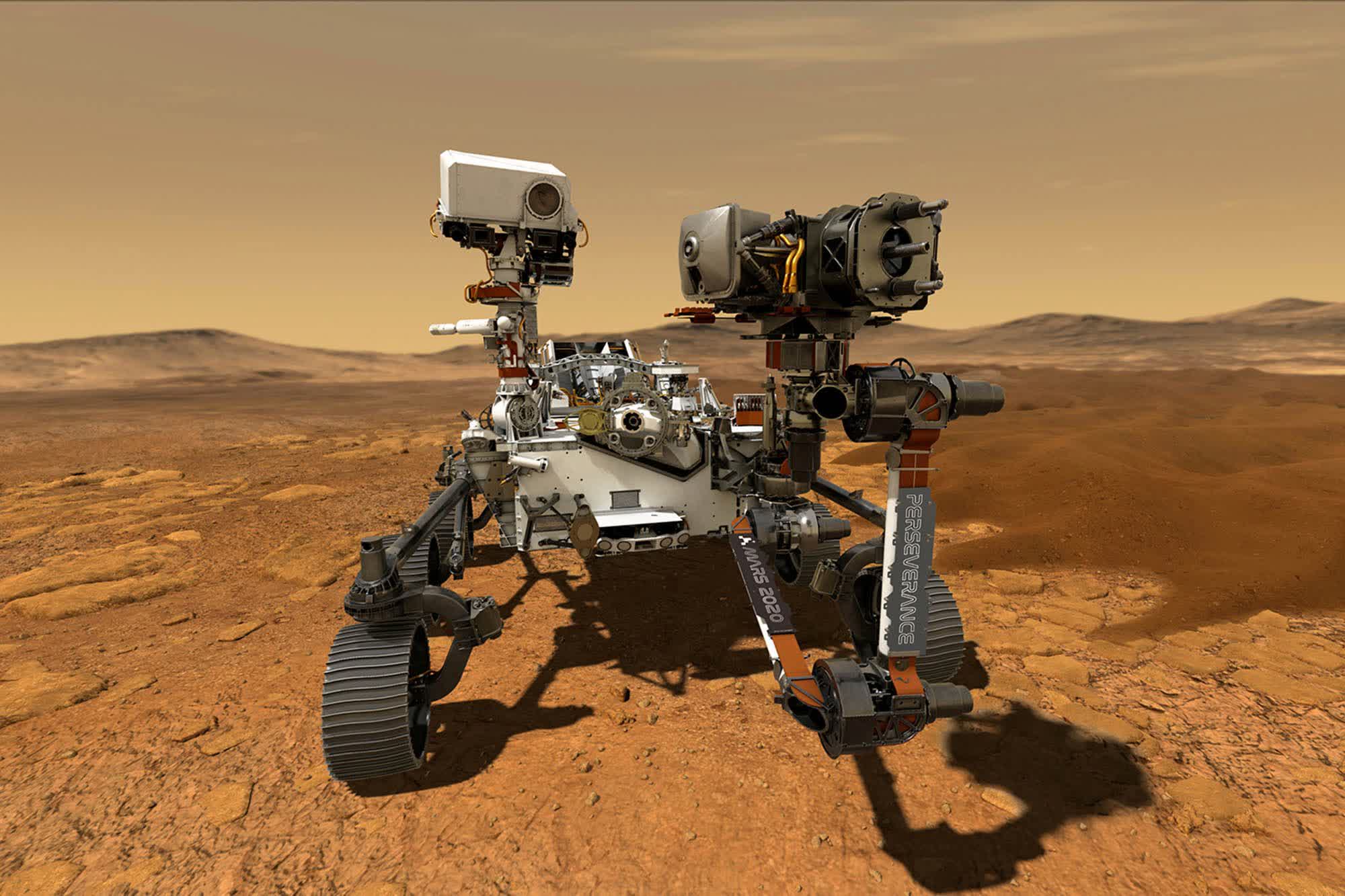

Editor's take: Conventional wisdom would suggest that when sending a robot to explore another planet, you’d want to outfit it with the latest and greatest technology available to maximize its potential. As it turns out, that wasn’t a top priority for NASA.

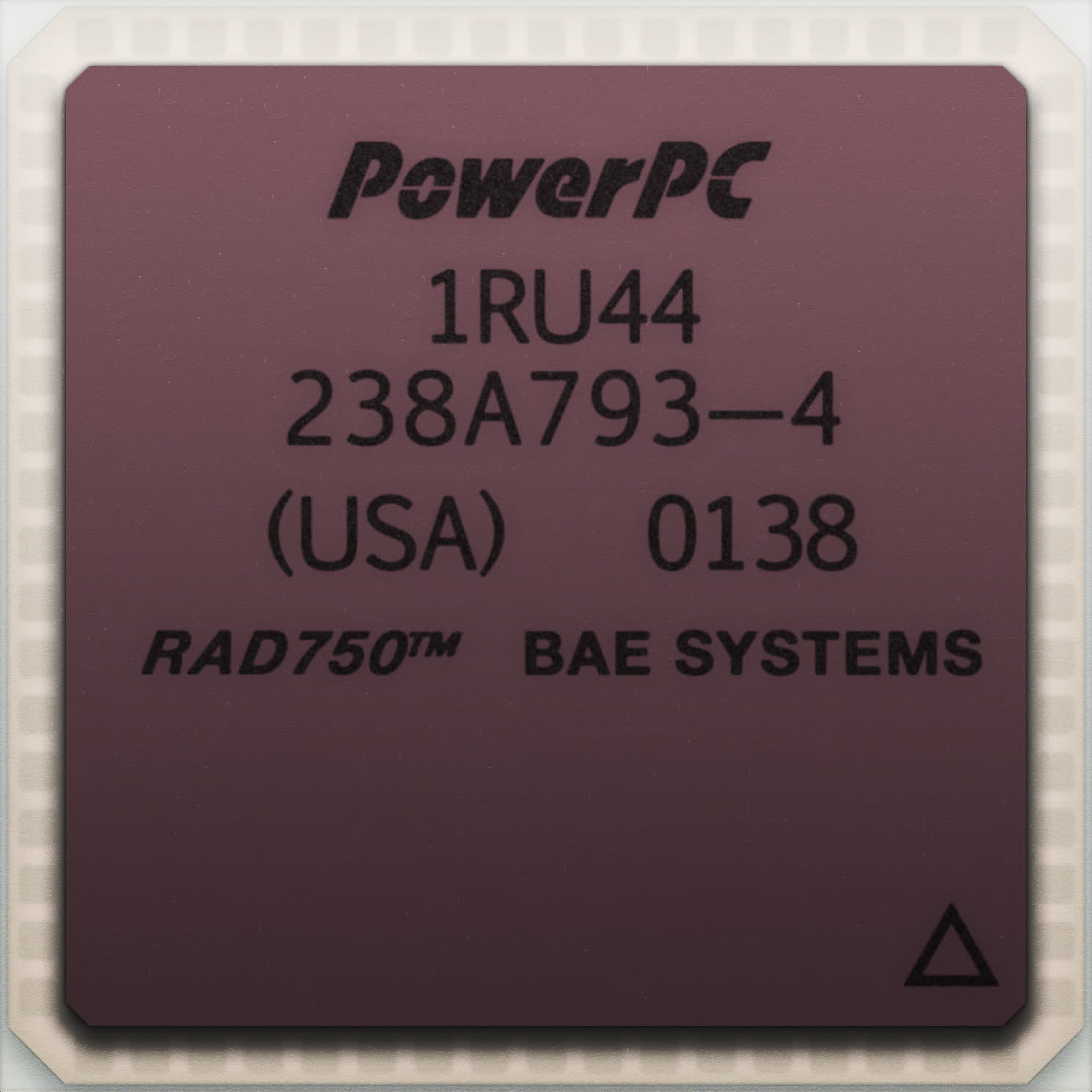

When the space agency’s Mars Perseverance rover touched down on the surface of the Red Planet last month, it did so with a modified PowerPC 750 processor on board. If you recall, that’s the same chip that came equipped in Apple’s iconic iMac G3 way back in 1998.

Why outfit the rover with a CPU that is over two decades old? Because it has to work, that’s why.

The processor inside the Perseverance rover is a RAD750, a radiation-hardened variant of the PowerPC 750 that is made by BAE Systems. It packs just 10.4 million transistors and operates at up to 200MHz. Critically, it can withstand up to a million rads of radiation and operating temperatures between -55C and 125C.

Because Mars’ atmosphere is different than what is found here on Earth, and it is further away from the Sun, anything sent to the planet is susceptible to damage from radiation and extreme temperatures.

The RAD750 is also a proven performer, having been used successfully in more than 150 spacecraft including the Kepler space telescope and the Curiosity rover.

https://www.techspot.com/news/88796-nasa-mars-perseverance-rover-powered-processor-1998.html