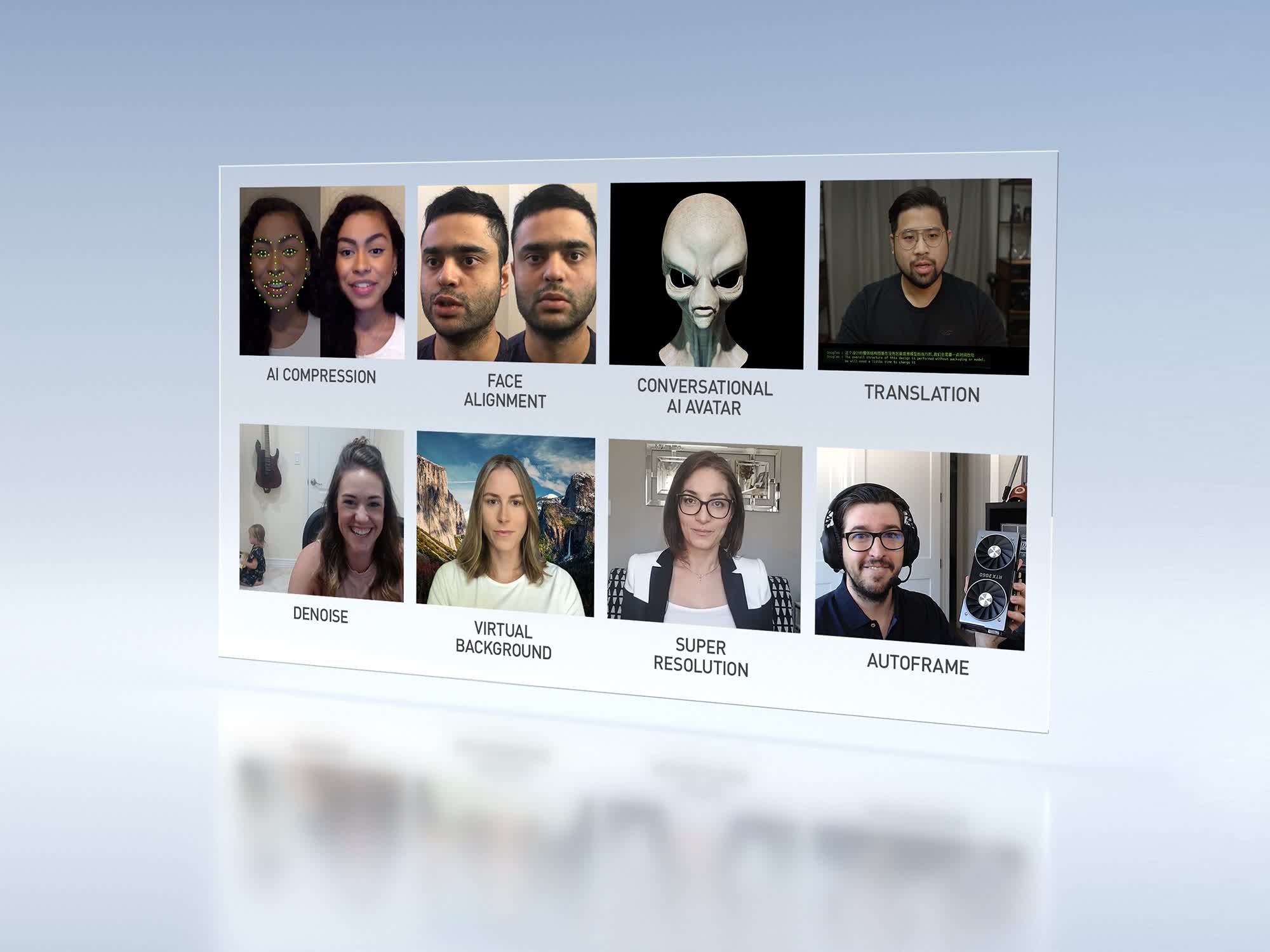

Forward-looking: With video conferencing becoming ever prevalent, Nvidia thinks it can improve the video and sound quality of those daily conference calls. The company announced Maxine, a cloud-based artificial intelligence platform that developers can use to improve their video conferencing software. Features include resolution scaling, real-time translation, reducing bandwidth, closed captioning, auto-framing, and background noise removal.

To reduce bandwidth, Nvidia says that it uses the AI to analyze "key facial points" and then re-animate the face in the video. This avoids having to stream all of the pixels for your face. The company claims this cuts bandwidth consumption down to one-tenth using H.264 compression. This could very helpful for people who have limits on how much data they can use.

Nvidia's Maxine tools also include the ability to enable gaze correction and face alignment. This lets people appear to face each other in a call and simulate eye contact even if the person's camera isn't aligned with their screen. Developers can add animated avatars that move based on the person's voice and emotional tone. Finally, virtual assistants can be integrated into the conference call to do tasks such as take notes, set action items, and even audibly answer questions in a human-like voice.

“Video conferencing is now a part of everyday life, helping millions of people work, learn and play, and even see the doctor,” said Ian Buck, vice president and general manager of Accelerated Computing at Nvidia. “Maxine integrates our most advanced video, audio and conversational AI capabilities to bring breakthrough efficiency and new capabilities to the platforms that are keeping us all connected.”

Owners of RTX graphics cards recently received Nvidia Broadcast, which is a suite of tools that use specific RTX features to blur camera backgrounds and eliminate noise from incoming and outgoing audio.

Some of the features such as gaze detection, closed captioning, real-time translation, and even animated faces have already been implemented by some companies. However, Nvidia's pitching this is a way for any developer to use its cloud-based tools without having to buy expensive GPUs to take advantage of advanced AI tools. For now, only Avaya has signed up to used Maxine.

https://www.techspot.com/news/86989-nvidia-announces-maxine-cloud-based-ai-platform-improve.html