Nvidia on Wednesday launched its new GeForce 800M line of notebook GPUs, a complete overhaul of its mobile graphics line that promises to deliver more processing power while consuming less power. Key to the latter bit of that promise is Battery Boost, a driver-level governor of sorts capable of doubling battery life while gaming.

Nvidia’s new lineup consists of GPUs based on a variety of architectures. The GTX 880M and 870M are built on Kepler, the same one used in the beastly GeForce GTX Titan Black for desktops. The 860M will be available on either architecture depending on OEM needs while the 850M is Maxwell-only.

Two lower-end GPUs, the 840M and 830M, also use Maxwell while the 820M is based on the older 28nm Fermi architecture.

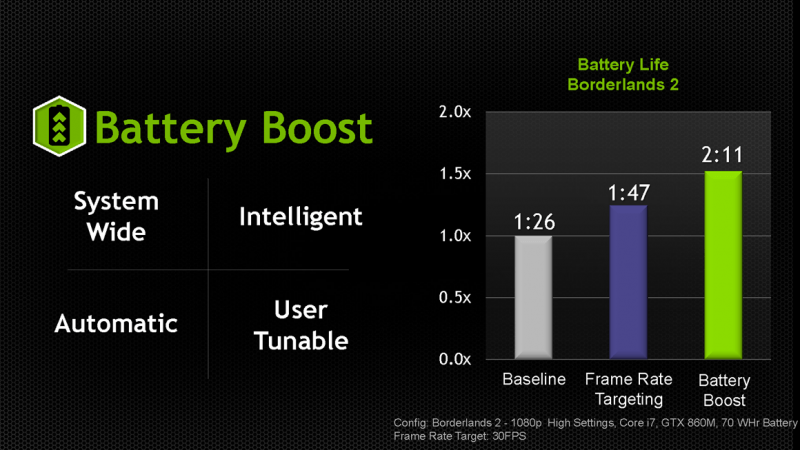

Battery Boost is activated when a user fires up a game while the system is running on battery power. It allows players to lock in a target frame rate – let’s say 30FPS as an example – and by taking control of the GPU, CPU and memory systems, ensures that frames never exceed the target. This prevents components from using more power than needed and in turn, extends battery life. It sounds pretty clever but we’ll reserve final judgment until we can get a system in and test it firsthand.

Another feature coming to notebooks with the launch is ShadowPlay. This allows gamers to capture in-game footage to share with others or broadcast their gaming exploits to Twitch with virtually no performance impact.

Elsewhere, GameStream support will allow gamers to stream gameplay from their notebook to a GameStream-compatible device like the Nvidia Shield.

The 800M can be found in a growing number of new notebooks from various manufacturers like the refreshed Razer Blade Pro.