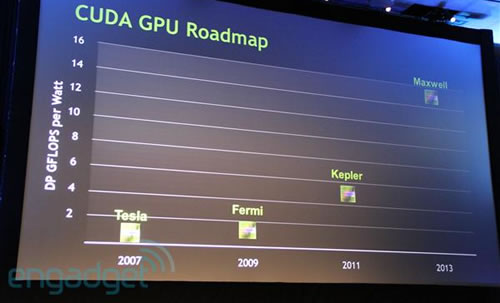

During its GPU Technology Conference today, Nvidia offered a glimpse at its plans for upcoming graphics hardware. Based on the shared CUDA GPU roadmap, it seems the company intends to launch a new architecture every two years, but CEO Jen-Hsun Huang wouldn't outwardly commit to that.

Codenamed Kepler, the next-gen chip should enter production this year and ship sometime in 2011. It uses 28nm fabrication tech, and will boast three to four times in the performance per watt compared to today's Fermi-based GeForce 400 products.

Further down the line, Nvidia expects to ship another architecture sometime in 2013. Known as "Maxwell" internally, the GPU will again triple Kepler's speeds per watt and bring a sixteen-fold increase in parallel graphics-based computing.

In addition to sharing its mainstream GPU roadmap, the company said it has partnered with The Portland Group (TPG) to develop a CUDA C compiler for x86 platforms, which will be demonstrated in November at the SC10 Supercomputing conference.

https://www.techspot.com/news/40350-nvidia-teases-fermi-successor-plans-for-cuda-x86.html