Something to look forward to: Now that we’re fully clued up on Nvidia’s RTX 2080 Ti, 2080, and 2070, the focus has turned to what will presumably be the next entry in its 2000-series lineup: the RTX 2060. Little is known about the card, but we’ve just been given an idea of its performance, thanks to Final Fantasy XV.

First noticed by Tom’s Hardware, the game’s benchmark database has featured unreleased graphics cards in the past, including AMD’s recent Radeon RX 590.

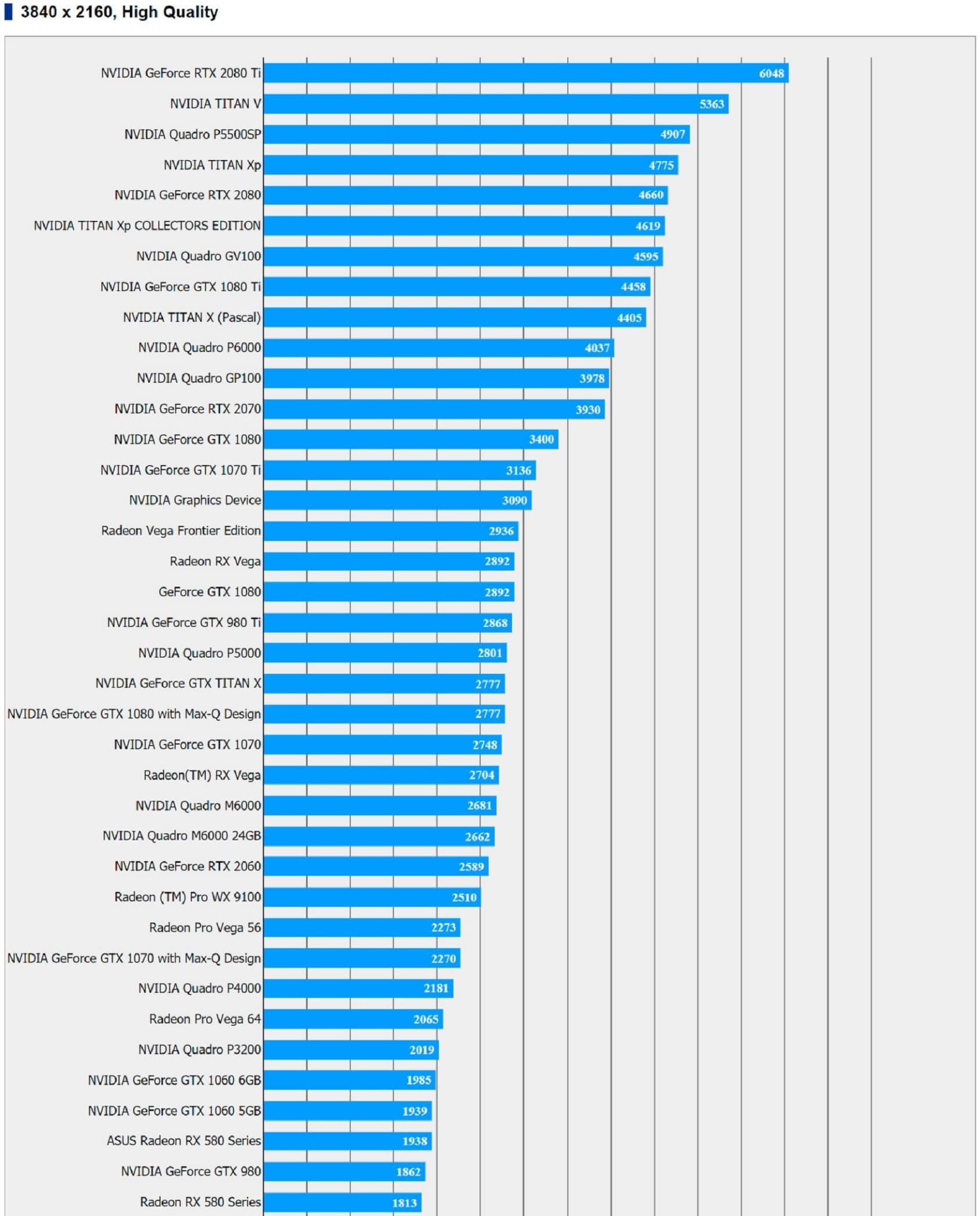

The benchmark was performed at a 3840 x 2160 resolution with the graphics set to ‘High Quality.’ The RTX 2060 scored 2589 points, which makes it 30.43 percent faster than its Pascal equivalent, the GTX 1060 (6GB), which scores 1985. The upcoming card’s score is only 6 percent slower than the GTX 1070, which is still an excellent product that offers great value for money. The RTX 2060 is also faster than the RX 590.

It’s important to note that the 2060 used in these benchmarks was most likely an engineering sample, so the final product will likely have a slightly better score. But that might not matter if Nvidia prices it too high—something the company’s been accused of doing with the RTX 2070 and 2080s.

With it being more of a mid-range card, we don’t know for certain whether the 2060 will feature the same Ray Tracing tech found in the 2000-series’ high-end offerings. If not, it might not even use the RTX moniker.

The potential release date of the 2060 is also a mystery, but it could take a while to arrive. Nvidia has an excess of GTX 1060 cards following the sharp decline of the cryptomining industry, and it’s unlikely to launch their Turing equivalent until the majority of this stock has been sold off, which could take between three to six months.

https://www.techspot.com/news/77548-nvidia-rtx-2060-shows-up-gaming-benchmark-almost.html