Forward-looking: Tech demos and trailers for upcoming games have given players tantalizing previews of Unreal Engine 5's capabilities. So far, however, the only two released products using the engine are a Matrix-themed demo and recent Fortnite updates. EA's Immortals of Aveum looks to be one of the earliest signs of what kind of PC you'll need to experience UE5 features like Lumen and Nanite.

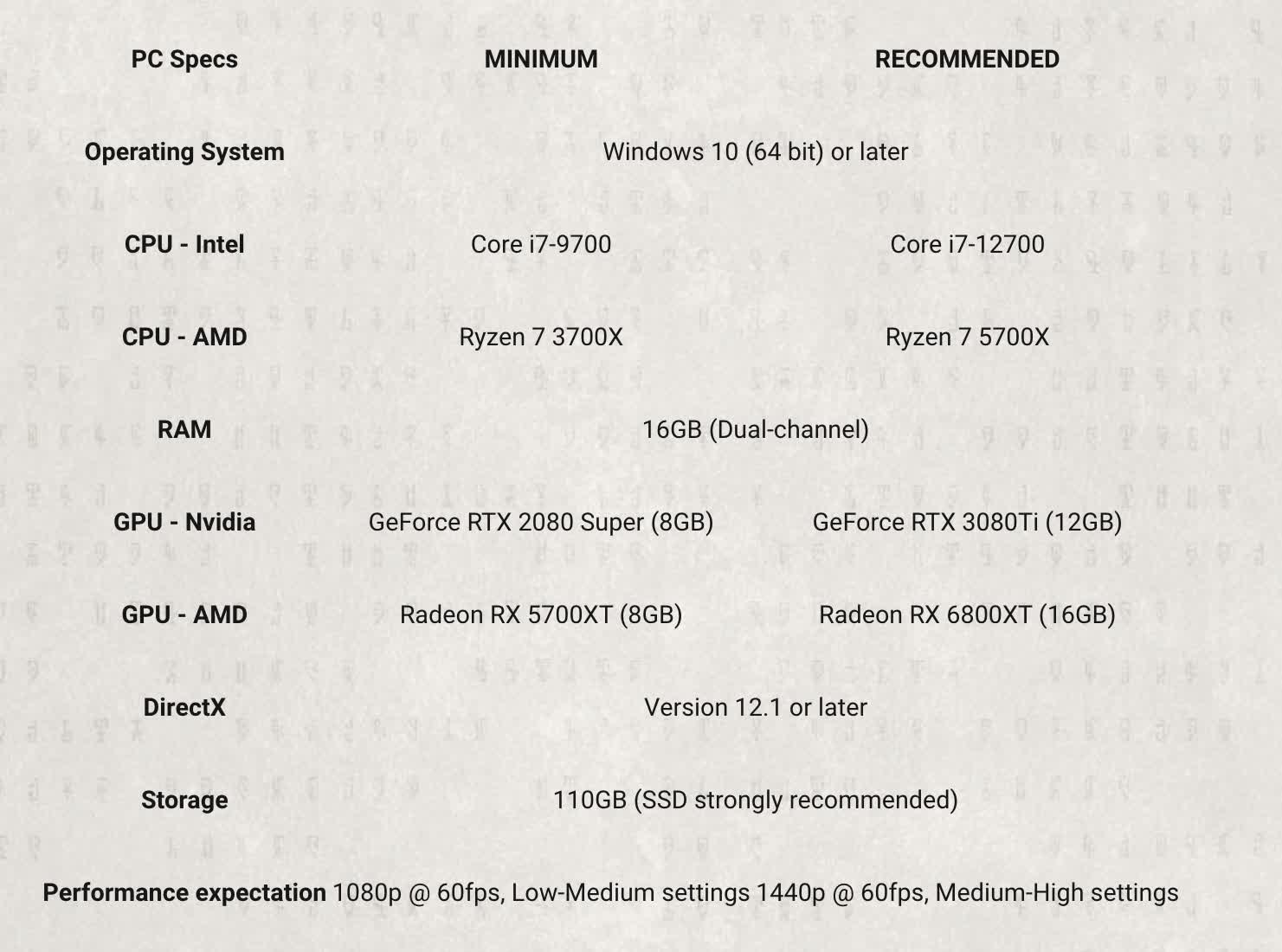

Immortals of Aveum, an upcoming magic-themed FPS from EA Originals, will require a GeForce RTX 2080 Super at minimum for 1080p 60fps gameplay at low or medium settings. As one of the first Unreal Engine 5 games to see release when it launches this July, its system specs certainly make a statement for what AAA games may require going forward.

Some recent and upcoming PC versions of games that left behind the PlayStation 4 and Xbox One list the weaker 2070 Super under recommended specs, including Star Wars Jedi: Survivor, The Last of Us Part 1, and Returnal. Unreal Engine 5 may prove more demanding than those already visually impressive games.

What's odd, however, is the noticeable gap between the 2080 Super and the GPU Aveum lists for the AMD equivalent – the RX 5700 XT. The suggestion implies that Nvidia cards like the 2070 Super, 3060 Ti, or GTX 1080 Ti might suffice, but Aveum developer Ascendant Studios specifically told The Verge that players will at least want either a 2080 Super, a 3070, or a 5700 XT.

Video memory, which has recently become a controversial topic due to the lack of it in recent Nvidia GPUs, doesn't explain the discrepancy, as the 2080 Super and 5700 XT only have 8GB of it. Ascendant said that, for some reason, the 12GB 3060 wouldn't cut it.

The company noted ray tracing as an important element in Aveum and its system requirements, but the 5700 XT features no RT cores. The game will likely include software-based ray tracing – which doesn't use RT cores – owing to its implementation of Lumen.

Lumen, one of the main UE5 features Epic Games has hyped, incorporates ray tracing and other techniques to simulate how light bounces around environments. Fortnite, the only released UE5 PC game so far, lets players engage Lumen through software or hardware, the latter being more realistic with a higher performance cost.

The recommended spec for Aveum – 1440p gameplay at 60fps on medium-to-high settings – lists an RTX 3080Ti or RX 6800 XT. What's interesting is that, unlike some recent high-end games, Aveum doesn't recommend 32GB of system RAM. You'll be okay with 16. However, the game does follow an ongoing trend of gargantuan storage requirements – 110GB in this case.

The minimum CPU requirements list an i7-9700 or Ryzen 7 3700X, but Ascendant said an i5-10600, 11500, 12400, or 13400 should also work. The recommended tier suggests an i7-12700 or Ryzen 7 5700X, but a 5700G or 7700 is also fine.

Unreal Engine 5's other headline element, Nanite, is also in Aveum. The feature dynamically adjusts geometric detail in objects and environments based on their distance from view, only rendering what players will be able to see.

World Partition – UE5's technique for streaming massive environments without loading screens – is also in. It makes 20-30km-long levels possible, though the developer says Aveum isn't an open-world game.

Despite how extreme Aveum's system requirements seem, they appear very close to Fortnite's "Epic" quality requirements, which likely refers to playing the game with Lumen and Nanite enabled. Although that makes for a sample size of only two games, it could suggest a system requirement range to expect from future UE5 titles.

Immortals of Aveum releases for PC, PlayStation 5, and Xbox Series Consoles on July 20. Ascendant is still optimizing the game, so the system requirements could change before then.

Other UE5 games likely coming out in 2023 include Tekken 8 and the remake of Silent Hill 2, but it's unclear whether they'll use Lumen, Nanite, or World Partition.

https://www.techspot.com/news/98397-one-first-commercial-unreal-engine-5-games-immortals.html