Rumor mill: With the RTX 3080 Ti’s announcement and launch supposedly just a few weeks away, a purported image showing one of MSI’s cards has leaked. The MSI RTX 3080 Ti SUPRIM X in the photo has come from a distribution center and is the final retail product.

Rumors that an RTX 3080 Ti card was in the works have been circulating since November. Alleged details of the card have changed since then; once believed to feature 20GB of GDDR6X, it seems Nvidia has instead opted for 12GB of GDDR6X at 19 Gbps. Virtually every month of 2021 has seen a predicted launch date, but it looks increasingly likely that it will land in May.

A photo of what’s claimed to be pallets of MSI cards, including the GeForce RTX 3080 Ti Ventus 12G OC, was published earlier this month. Now, a VideoCardz source has provided an image of the MSI RTX 3080 Ti SUPRIM X from one of the distribution centers that have received the next Ampere release.

The SUPRIM X is the series’ flagship model, offering the best performance and materials. The RTX 3090 version features a nickel-plated copper baseplate and precision-machined heat pipes.

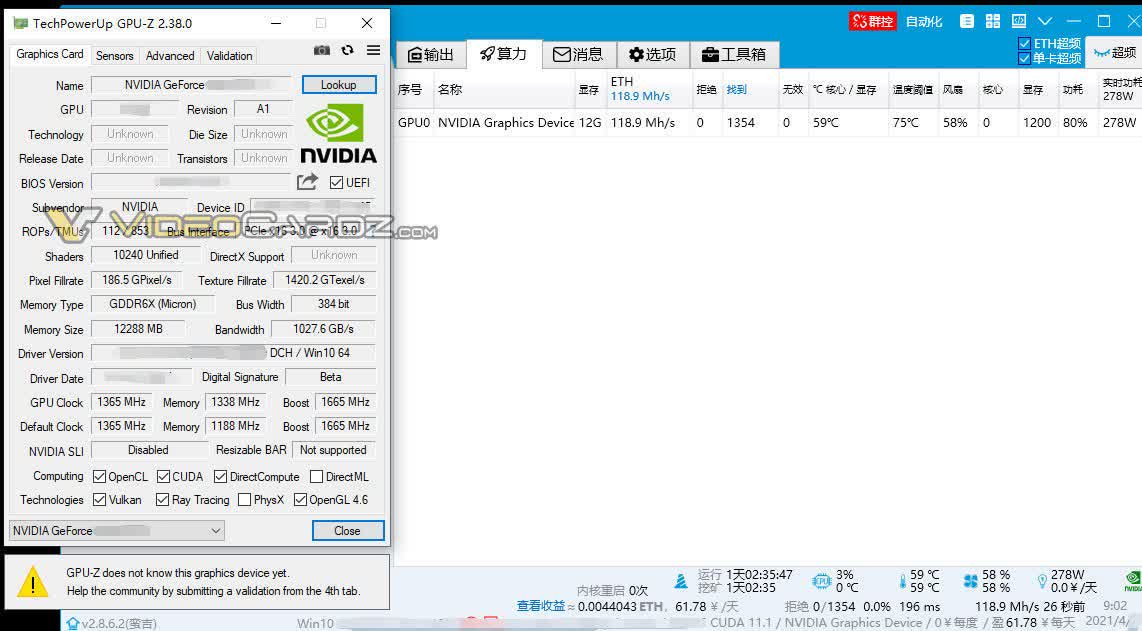

A recent GPU-Z screenshot of the RTX 3080 Ti showed it with 10,240 CUDA cores, a 384-bit memory bus, a 1,365 MHz base clock, and a 1,665 MHz boost. It’s expected to come with a mining limiter and is thought to have an MSRP of $1,099. Rumors put the announcement date at May 18, with a release on May 26. Just don’t expect it to be any easier to buy than any other graphics card right now.

https://www.techspot.com/news/89504-alleged-photo-msi-geforce-rtx-3080-ti-suprim.html