The big picture: Details about Intel's upcoming Raptor Lake series have slowly been leaking over the past few months. After today's review of a retail unit of the i9-13900K, the only piece missing from the puzzle is pricing, which will hopefully be competitive against Team Red's Zen 4 lineup.

Hardware leakers ECSM and OneRaichu recently posted an in-depth review of a retail unit of Intel's upcoming Core i9-13900K flagship CPU. They have removed the article since then (likely at the request of Intel), but the charts and results are luckily still circulating the web.

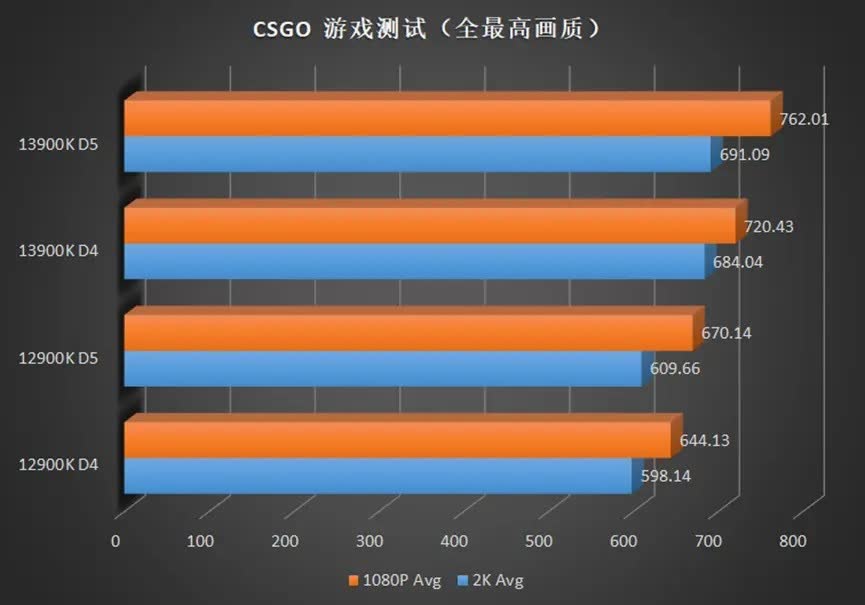

First up, a few notes about the test systems used. The reviewers compared the Raptor Lake processor with its predecessor, the i9-12900KF, and tested them with both DDR5-6000 CL30 and DDR4-3600 CL17 memory. A beefy 360mm AIO cooler kept temperatures (somewhat) under control, while an AMD Radeon RX 6900 XT was used for the gaming benchmarks.

ECSM tested the CPUs with their power limits disabled in the BIOS, which saw the i9-13900K draw up to 343W during AIDA64's FPU test (vs. the i9-12900KF's 236W). Users wanting to manually overclock the i9-13900K will likely require sub-ambient cooling solutions.

Apart from the core count and frequency increases we've talked about before, the reviewers noted the Raptor Lake CPU has a new ring bus design working at a higher frequency. The i9-13900K has more L2 and L3 cache, with bandwidth and latency also seeing improvements.

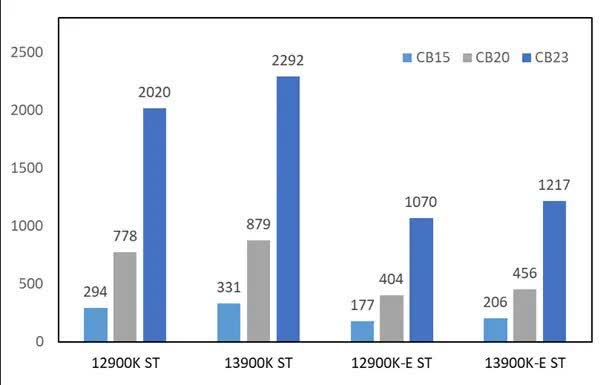

In Cinebench R23's single-threaded test, the i9-13900K's P-core (Raptor Cove) is 13 percent faster than its predecessor using the Golden Cove architecture, with the E-cores also seeing a similar uplift. Meanwhile, multithreaded performance was, on average, 42 percent higher on the 13th-gen chip. The new CPU provides decent gains in games as well, with CSGO seeing the biggest improvement, especially when it comes to minimum framerates.

Intel will officially announce the Raptor Lake lineup at its Innovation event next week, with retail availability starting in late October. They will compete with AMD's Zen 4 series launching on September 27.

https://www.techspot.com/news/96027-retail-core-i9-13900k-cpu-reviewed-provides-impressive.html