Why it matters: An RTX 3070 with 16 gigabytes of VRAM will likely only exist in the hands of a few hardware enthusiasts, and that includes crazy mods like an RTX 3070 with a physical switch for memory capacity. There is a Taiwanese company that has created a specialized 16GB RTX 3070 model for digital signage companies, but it's not something gamers will be able to buy from a retail store.

VRAM capacity on modern video cards is one of the hottest topics of discussion among PC enthusiasts, and for good reason – Nvidia has made spacious frame buffers a luxury with the RTX 40 series. For instance, an RTX 4070 with 12 gigabytes of GDDR6X memory is priced at around $600, and Team Green only recently bowed to public pressure with the announcement of an RTX 4060 Ti with 16 gigabytes of VRAM for a suggested price of $499.

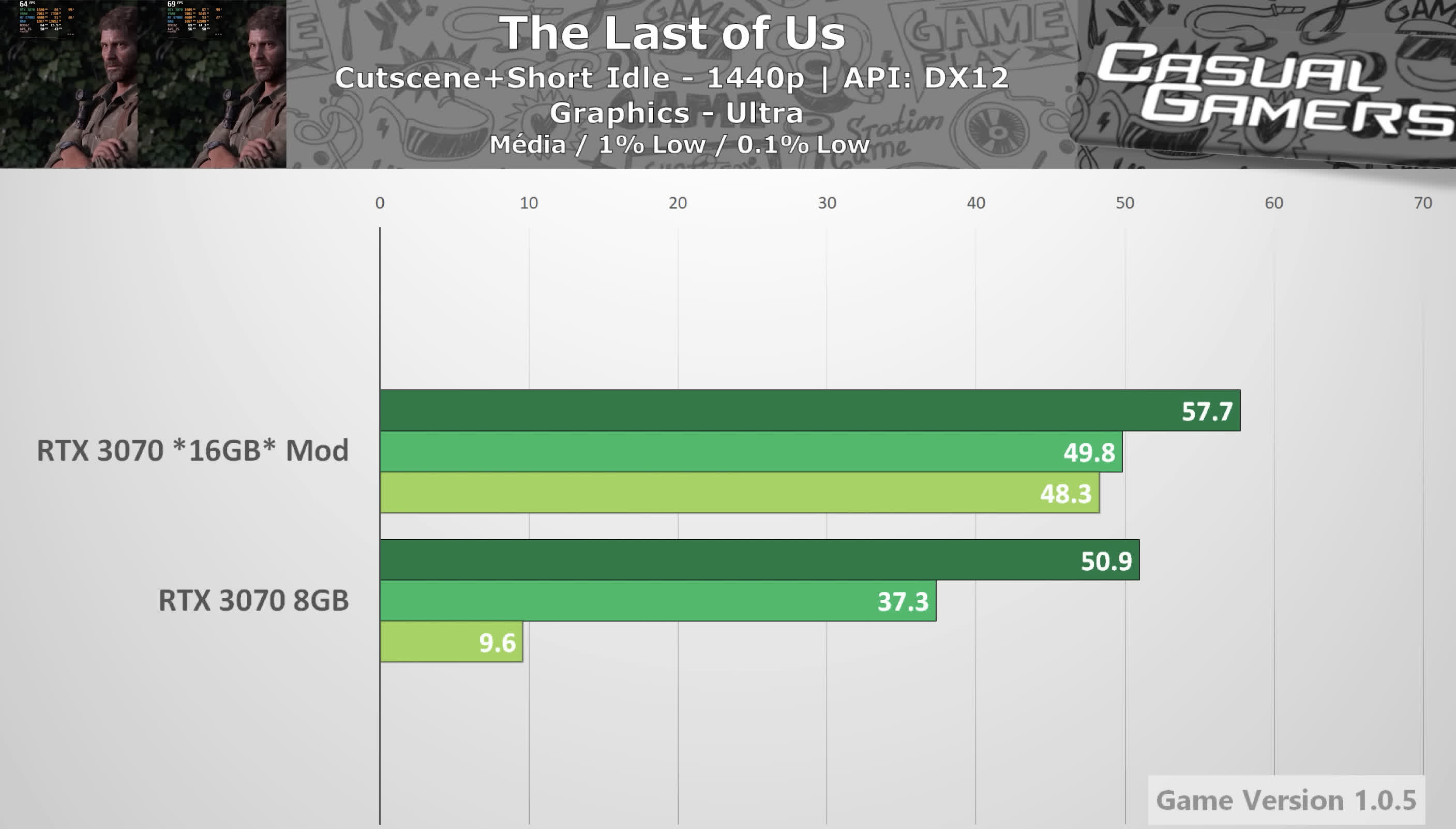

The modding community has recently come up with interesting projects that show Nvidia could have easily equipped some RTX 30 series cards with more memory. In the case of the RTX 3070, which normally comes with 8 gigabytes of VRAM, playing newer AAA titles with Ultra settings and high-resolution textures is an exercise in frustration. That said, an experiment around doubling the VRAM capacity showed the card is certainly capable of respectable frame rates even as it inches towards its third anniversary.

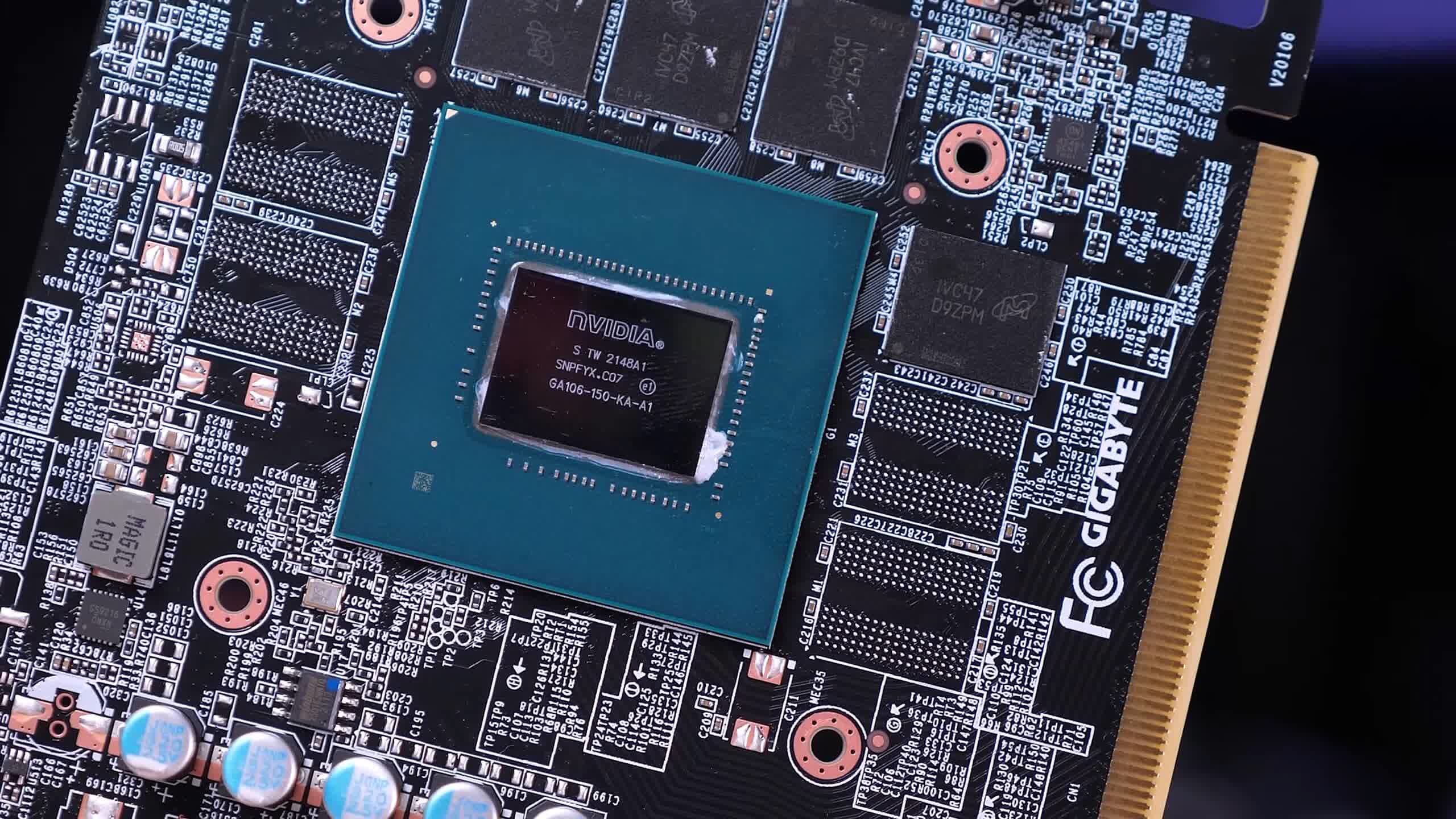

A more recent project reveals a similar alteration for the RTX 3070. YouTuber Paulo Gomes teamed up with Casual Gamers to create what is likely the only Nvidia card with a physical switch for memory capacity. The switch makes no sense for gamers who simply want the higher VRAM capacity, but the mod itself is an idea that could prove useful to reviewers looking to limit-test a graphics card. It would certainly be less expensive than, say, testing the 16GB RTX A4000 against the 8GB RTX 3070 like our own Steven Walton did earlier this year.

Otherwise, the new mod once again confirms that an RTX 3070 with 16 gigabytes of GDDR6X is perfectly capable of running resource-intensive games like The Last of Us Part I at 1440p using the Ultra quality preset. As you can see from the graph above, the card can achieve a slightly higher average frame rate as well as significantly better one percent and 0.1 percent lows.

Nvidia might never officially release a 16-gigabyte RTX 3070, but that won't stop some custom board vendors from trying. An interesting tidbit from Computex this year is that a small Taiwanese company called Gxore seems to have done just that.

That said, Gxore isn't listed as an official Nvidia partner, so this is likely a low-volume product targeting Chinese digital signage providers. A dead giveaway is that this unusual RTX 3070 model has no fewer than eight mini-DisplayPort outputs, which should come in handy for driving video walls.

H/T: Paulo Gomes | Casual Gamers

https://www.techspot.com/news/98912-rtx-3070-mod-adds-physical-switch-vram-capacity.html