In brief: The ability to create fabricated video footage has persisted for decades but has historically required a great deal of time, money and skill to pull off in a convincing fashion. These days, deepfake systems make it trivially easy to produce stunningly accurate doctored footage and thanks to Samsung’s latest research, now even less effort is required.

Modern deepfakes traditionally require a large amount of source imagery – training data – to work their magic. Samsung’s new approach, dubbed few- and one-shot learning, can train a model on just one single still image. Accuracy and realism improve as the number of source images increases.

For example, a model trained on 32 photographs will be more convincing than a version trained with a single image – but still, the results are stunning.

As exhibited in a video accompanying the researchers’ paper, the technique can even be applied to paintings. Seeing the Mona Lisa, a work that exists solely as a single still image, come to life is quite fascinating and fun.

The downside, as Dartmouth researcher Hany Farid highlights, is that advances in these sorts of techniques are bound to increase the risk of misinformation, fraud and election tampering. “these results are another step in the evolution of techniques ... leading to the creation of multimedia content that will eventually be indistinguishable from the real thing,” Farid said.

That’s great if you’re watching a fictional movie but not so much when tuning in to the evening news.

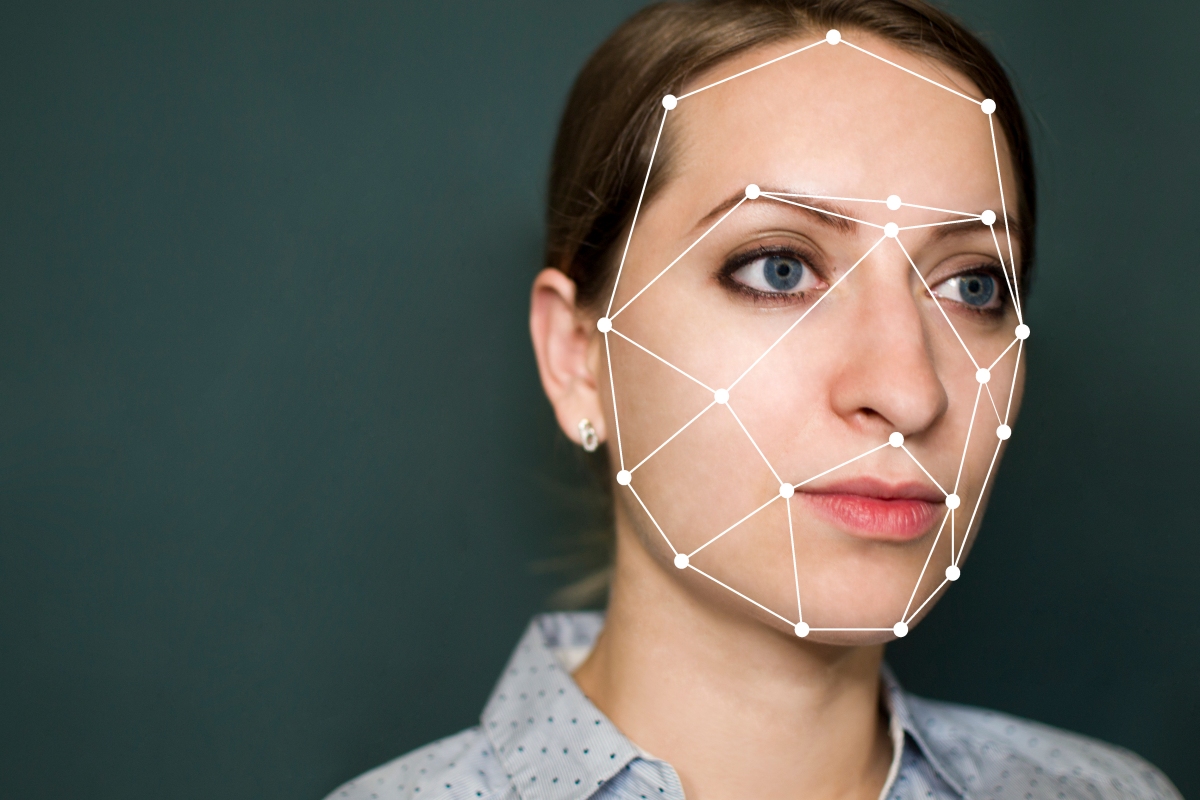

Image credit: Face Recognition of a woman by Mihai Surdu