Perceptual computing seems to be on a lot of people’s minds lately. From a quest to find the Ultimate Coder to the worldwide Intel Perceptual Computing Challenge to a free Perceptual Computing SDK, there are plenty of opportunities for developers to get involved in this cutting-edge exploration of how humans interact with computers.

This is a guest post by Wendy Boswell, technical blogger/writer at Intel. She's also editor for About Web Search, part of the New York Times Company

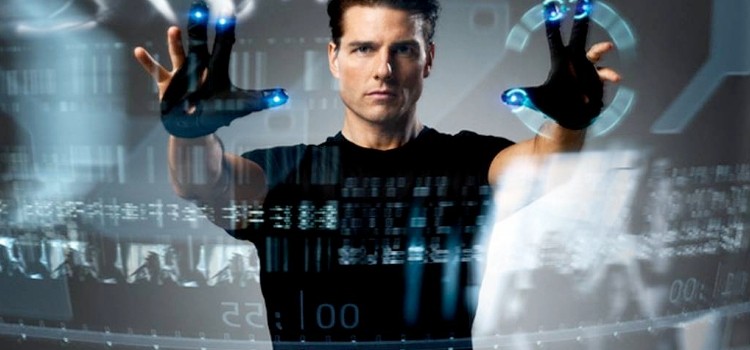

If you can visualize controlling your computer merely by using your voice or a wave of your hand, rather than a mouse, a keyboard, or even a touchscreen, then you can see just the beginnings of what perceptual computing is capable of. Perceptual computing focuses on natural human interactions with machines in addition to those familiar control apparatuses many of us have literally grown up with: facial recognition, voice commands, gesture swiping, etc. Responsive computing that is individually tailored to an individual’s unique needs is really what perceptual computing is all about.

There’s a lot of really exciting stuff going on in this space, and in this article, we’re going to focus on just a few of some of the more inspiring and innovative explorations that developers are taking on.

My, Oh Myo

One of the more interesting waves of development that is coming out of the perceptual computing movement is new user interfaces. How about a wearable armband that tracks your arm’s muscle movements and controls your computer via a series of gestures? Watch the video of Thalmic Labs’ Myo device below:

As you can see from the video, the Myo works with devices that you already have in your home or office. Presentations can be controlled with a flick of the wrist, video games reach a whole new level of interaction, and browsing the web and watching videos is a completely different experience. More about this intriguing device:

What sort of precision does the MYO have?

The MYO detects gestures and movements in two ways: 1) muscle activity, and 2) motion sensing. When sensing the muscle movements of the user, the MYO can detect changes down to each individual finger. When tracking the position of the arm and hand, the MYO can detect subtle movements and rotations in all directions!

How quickly does it detect gestures?

Movements can be detected very quickly - sometimes, it even looks like the gesture is recognized before your hand starts moving! This is because the muscles are activated slightly before your fingers actually start moving, and we are able to detect the gesture before that happens.” – Myo FAQ

Use the Force, Luke

Ever wanted to control something with your thoughts, Jedi-style? A team of researchers at the University of Minnesota has put together a four-blade helicopter that can be controlled with the electrical impulses generated by our minds:

More about this amazing technology and how it works:

“The team used a noninvasive technique known as electroencephalography (EEG) to record the electrical brain activity of five different subjects. Each subject was fitted with a cap equipped with 64 electrodes, which sent signals to the quadcopter over a WiFi network. The subjects were positioned in front of a screen that relayed images of the quadcopter's flight through an on-board camera, allowing them to see the course the way a pilot would. The plane, which was driven with a pre-set forward moving velocity, was then controlled by the subject's thoughts.” – LiveScience.com, “Tiny Helicopter Piloted by Human Thoughts”

The lead researcher on this project, Bin He, realizes that there are many more uses for this new human-computer interface, including helping those suffering from paralysis:

"Our study shows that for the first time, humans are able to control the flight of flying robots using just their thoughts, sensed from noninvasive brain waves," said Bin He, lead scientist behind the study and a professor with the University of Minnesota's College of Science and Engineering. "Our next goal is to control robotic arms using noninvasive brain wave signals….with the eventual goal of developing brain-computer interfaces that aid patients with disabilities or neurodegenerative disorders."

Gesture recognition and Wi-Fi

You’ve probably heard of smart houses, right? Researchers in the University of Washington department of computer science and engineering are going a few steps further with that concept, linking existing wireless technology that most people have in their homes with gesture recognition:

"Forget to turn off the lights before leaving the apartment? No problem. Just raise your hand, finger-swipe the air and your lights will power down. Want to change the song playing on your music system in the other room? Move your hand to the right and flip through the songs. University of Washington computer scientists have developed gesture-recognition technology that brings this a step closer to reality. They have shown it's possible to use Wi-Fi signals around us to detect specific movements without needing sensors on the human body or cameras. By using an adapted Wi-Fi router and a few wireless devices in the living room, users could control their electronics and household appliances from any room in the home with a simple gesture." – Washington.edu, “Wi-Fi signals enable gesture recognition throughout entire home”

Watch a video of this technology in action below:

This technology, called WiSee, doesn’t require a complex installation of cameras or sensors in every room; it merely uses existing technology (wireless signals) in a completely new and innovative way. All sorts of household tasks could conceivably be simplified using this technology.

What’s next in perceptual computing?

Innovating on existing technology seems to be a theme with all three of the projects highlighted in this article, with new ways for humans and computers to interact at the forefront.

Recently, Valve’s Gabe Newell sat down with the Intel video team to talk about his impressions of perceptual computing as related to game development. Check out the video below for his views on how new kinds of input – like measured heart rate, perceived emotional state, contextual environmental cues, hand and eye movements, etc. – are shaping where new gaming experiences are going.

What do you think is coming next in perceptual computing? Share with us in the comments.

https://www.techspot.com/news/53209-the-next-wave-of-computing-is-perceptual.html