In context: Taiwan Semiconductor Manufacturing Company (TSMC) is on track to start volume producing chips based on its 3nm manufacturing process by the second half of 2022. If true, we could be on the cusp of a major advancement in mobile processing technology.

According to a recent report from DigiTimes, the semiconductor foundry “has been engaged in the installation of 3nm process fabrication lines and related facilities” and is still on schedule to complete the project as originally planned. TSMC will reportedly be ready for risk production next year ahead of the aforementioned volume production the second half of 2022.

Should these dates hold, it potentially means that one of TSMC’s biggest clients – Apple – could have its first 3nm-based SoC ready in time for inclusion in 2022 model iPhones.

In the interim, TSMC is said to be volume producing 5nm chips and is working on enhanced process variants. Apple’s next-gen iPhone, tentatively dubbed the iPhone 12, is expected to ship with a 5nm-based SoC that could be called the Apple A14 Bionic. Early benchmarks of the alleged A14 look promising and with 3nm on the horizon, we could soon be entering a new era in terms of power and efficiency previously unseen.

3nm is approaching the barrier of what might be possible in terms of die shrinks, at least for the foreseeable future. That said, it was reported last year that TSMC was looking into 2nm production. Intel, meanwhile, has a roadmap that involves 1.4nm production although not until 2029.

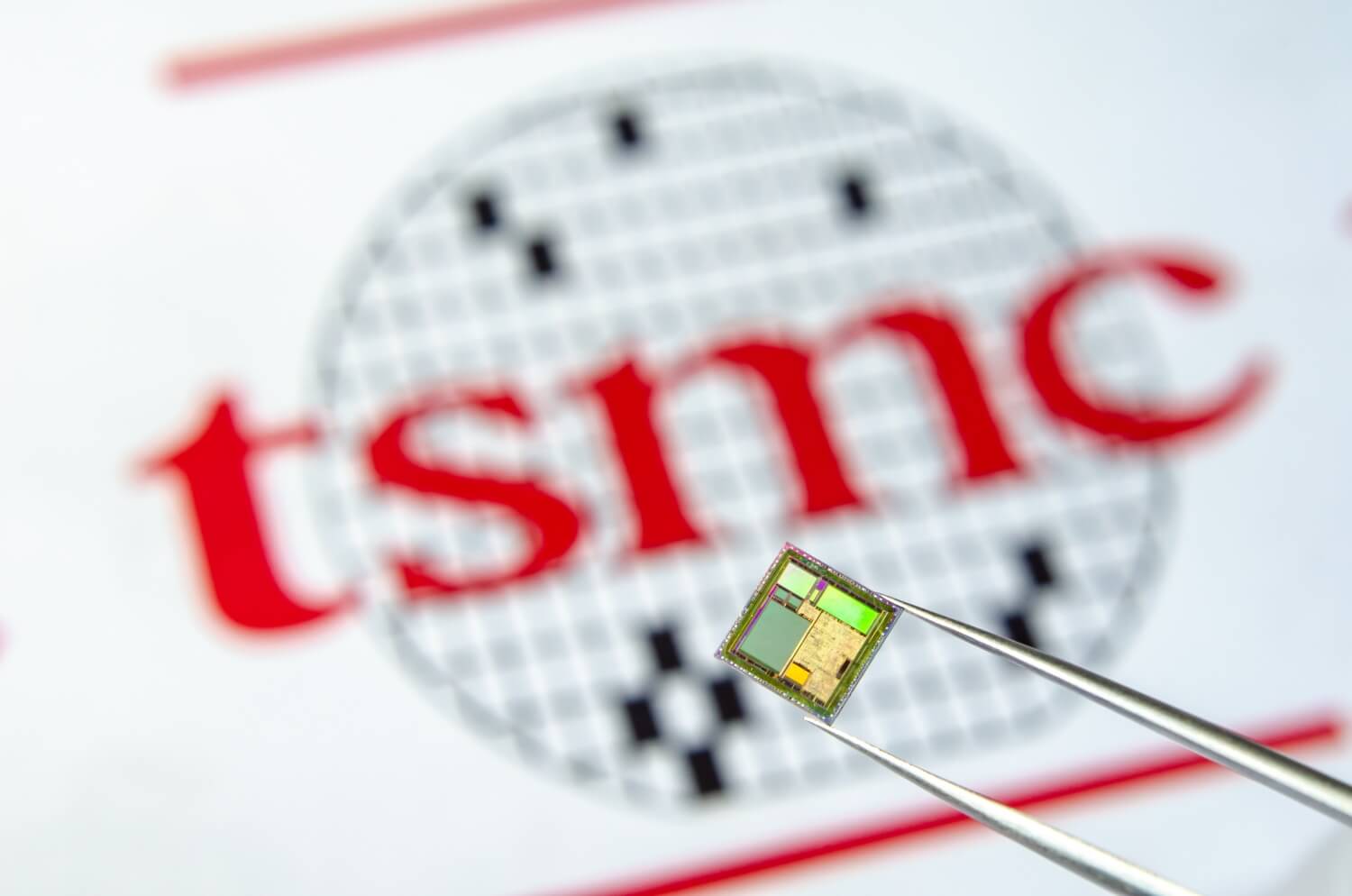

Masthead credit: Ascannio