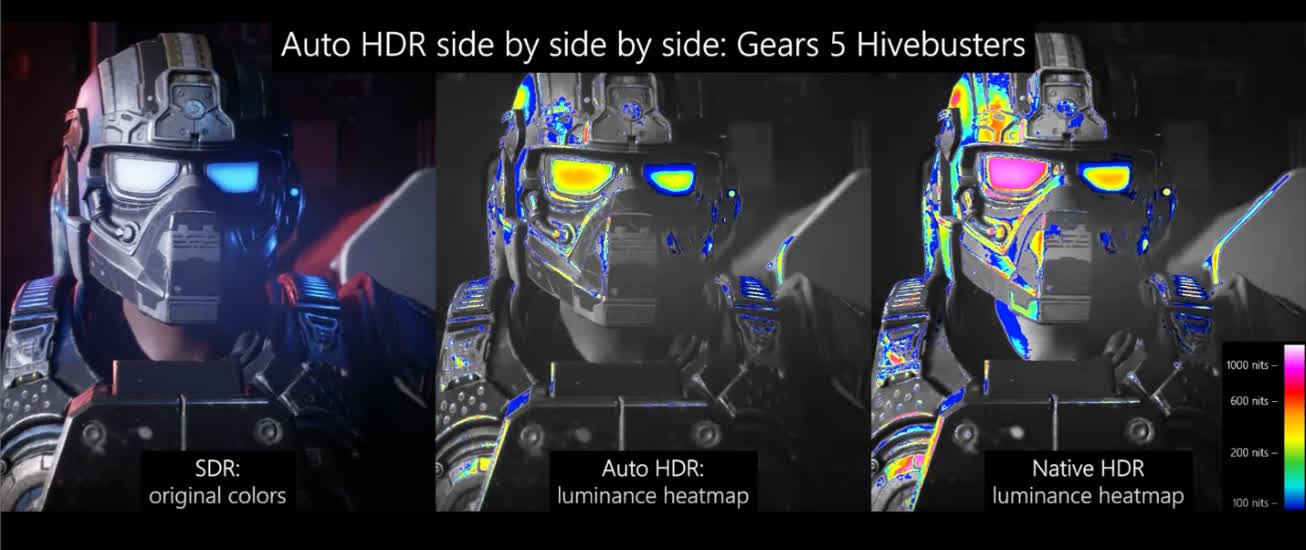

In brief: One of Windows 11's most attractive features is the ability to add HDR to over 1,000 DirectX 11 and DX12 games that don't officially support it. Now, Microsoft gives users more control over Auto HDR and makes it easier to access.

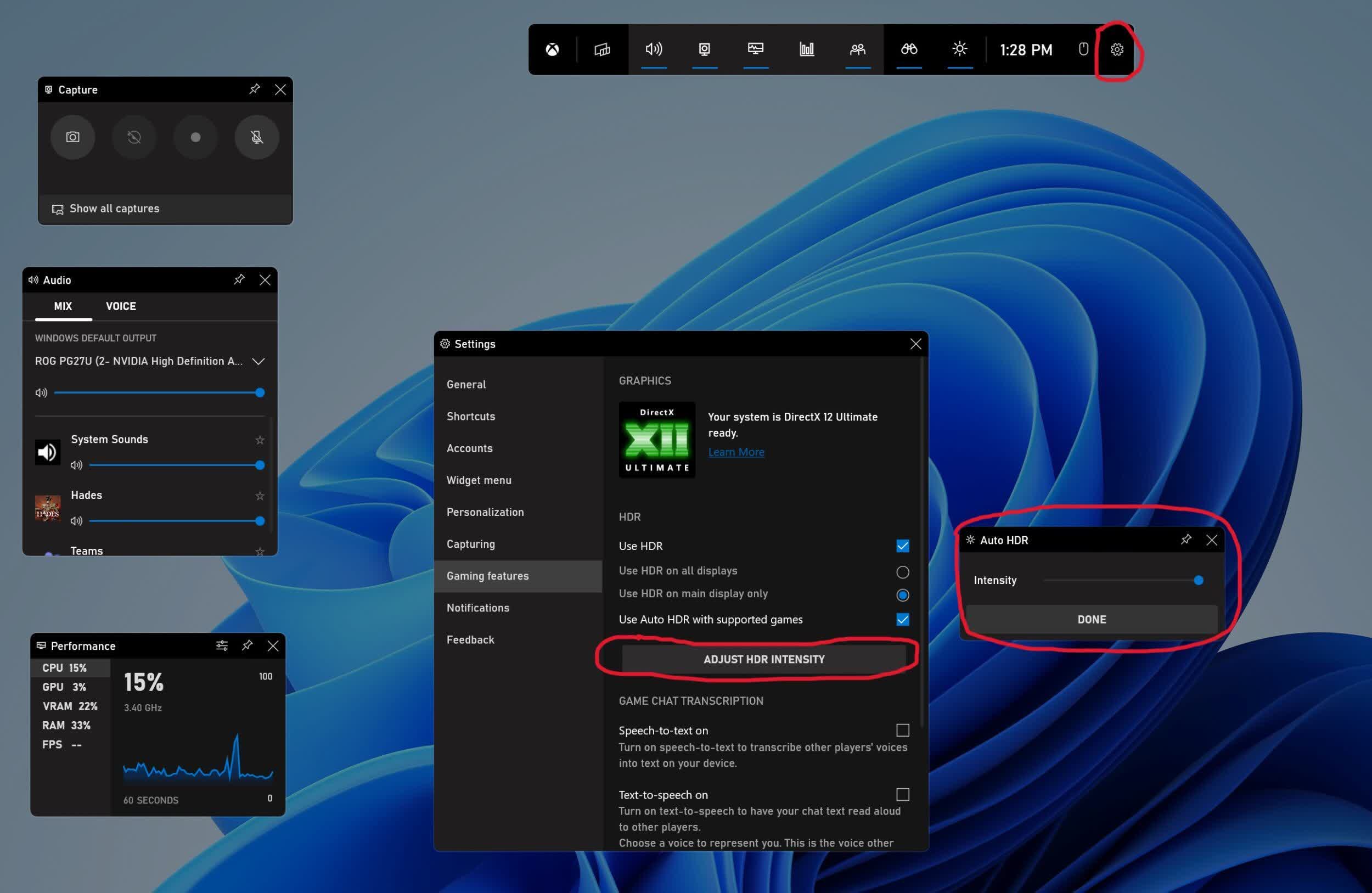

Microsoft recently announced an update to the Windows 11 Game Bar, which adds an Auto HDR Intensity slider. The feature will remember the setting for each game. The update also makes the on/off toggle easier to reach. The slider comes with Game Bar version 5.721 and above. Users can update it through the Windows Store app.

Games with their own HDR implementation usually come with adjustment settings. Unfortunately, using Auto HDR on other games in Windows 11 only involved an on/off switch until now. This one-size-fits-all solution isn't equally effective across the over 1000 games Auto HDR supports.

Getting to the slider is a trivial matter — first, press Win+G to bring up the Game Bar overlay. Once there, the slider is under Settings > Gaming Features > Adjust HDR Intensity. Clicking the adjust button brings up the slider in a separate Window, which users can pin to the Game Bar. Auto HDR's on/off toggle is right above the adjust button.

Microsoft also gave Windows Insiders the ability to switch off Auto HDR notifications, acknowledging that some users found them excessive. The switch is under Settings > System > Notifications. Users can also tell Auto HDR to send notifications without playing sounds or showing banners.

https://www.techspot.com/news/94454-windows-11-users-can-now-adjust-auto-hdr.html