Forward-looking: A recent Instagram post from Meta CEO Mark Zuckerberg announced the company's plans to invest heavily in Nvidia hardware and other AI initiatives by the end of 2024. Zuckerberg outlined Meta's intent to intensify efforts for an open-source artificial general intelligence capable of supporting everything from productivity and development to wearable technologies and AI-powered assistants.

Zuckerberg highlighted Meta's upcoming infrastructure investment, stating that the company will acquire around 350,000 Nvidia H100 units, or approximately 600,000 H100 equivalents when including other planned GPU purchases. In case you were wondering – that's a lot of compute power.

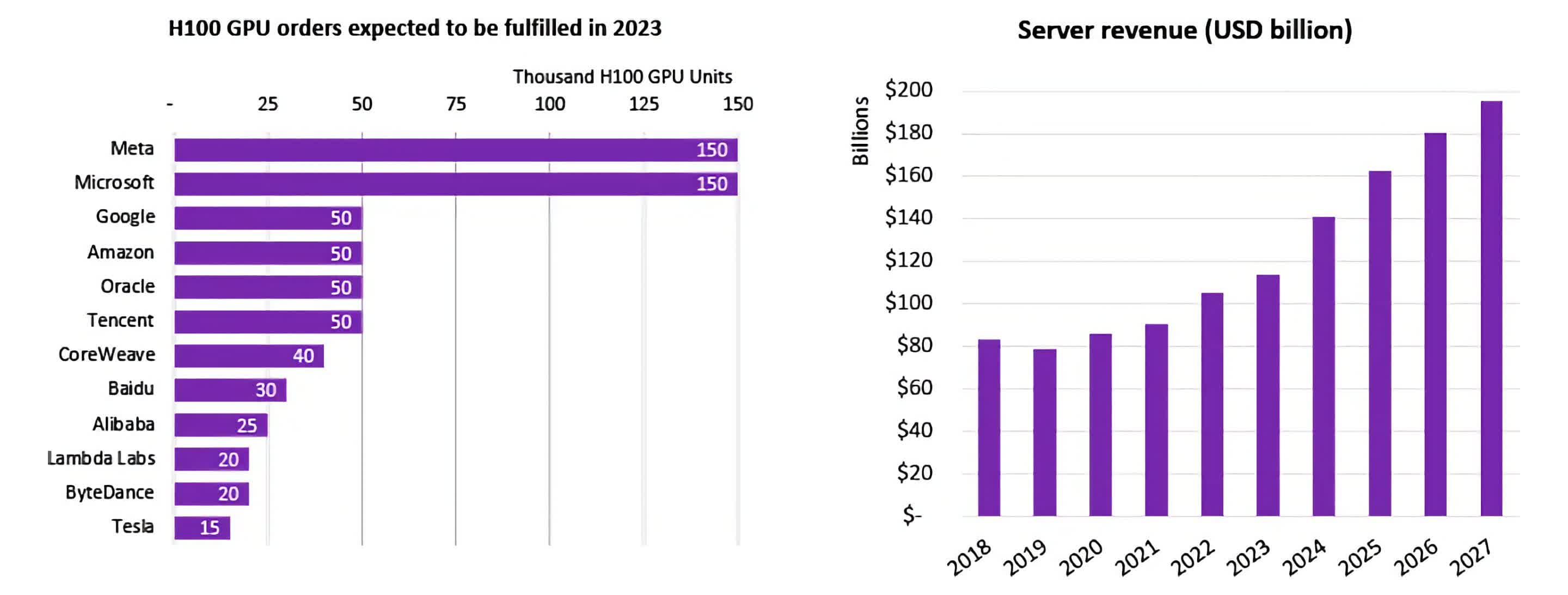

According to market analysis company Omdia, last year's H100 orders were dominated by Meta and Microsoft, both of which individually purchased more units than Google, Amazon, and Oracle combined. Adding another 350,000 units will undoubtedly allow Meta to remain one of Nvidia's biggest customers.

Zuckerberg says Meta's AI infrastructure investment is required to build a complete artificial general intelligence (AGI) capable of providing reasoning, planning, coding, and other abilities to researchers, creators, and consumers around the world.

The company intends to "responsibly" develop the new AI model as an open-source product, ensuring the highest levels of availability to users and organizations of all sizes.

The high demand for the H100 has resulted in long lead times that could put some companies awaiting fulfillment behind their competition. Omdia reports that Nvidia H100 order lead times have ranged from 36 to 52 months due to the increasing demand for cutting-edge AI hardware.

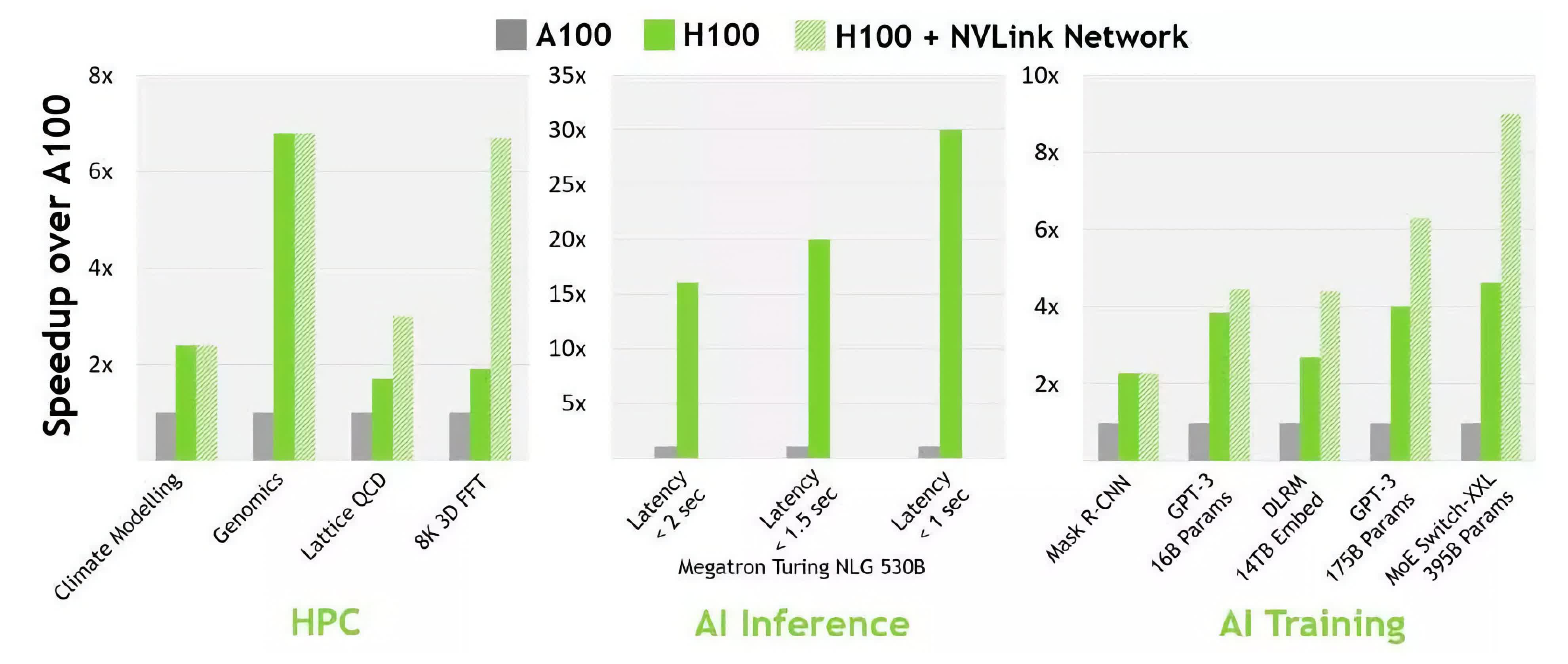

The increase in computing power and processing speed is the biggest driver for Meta and other companies looking to procure the AI resource. According to Nvidia, the H100 with InfiniBand Interconnect is up to 30 times faster than the Nvidia A100 in mainstream AI and high-performance computing (HPC) models.

The units also feature three times the IEEE FP64 and FP32 processing rates when compared to the previous A100. The increase is attributed to better clock-for-clock performance per streaming multiprocessor (SM), additional SM counts, and higher clock speeds.

Near the end of the post, Zuckerberg discussed the growing need for wearable devices that will allow users to interact with the Metaverse throughout their day. He said that glasses are the "...ideal form factor for letting an AI see what you see, hear what you hear, so it's always available to help out."

To no surprise, the statement walked directly into a plug for Ray-Ban's Meta-enabled smart glasses.

At $30,000 to $40,000 per GPU, the investment in the H100 is no small move and is one that makes Meta's intentions to advance its AI capabilities abundantly clear. It doesn't just want to exist in the AI space, it wants to lead the charge for future AI innovation.