A hot potato: For the second time in just over six months, Microsoft has issued a warning about China's use of generative AI to sow disruption in the United States during this election year. State-sponsored groups, working with the backing of North Korea, are also expected to target elections in South Korea and India having launched a similar campaign during Taiwan's presidential election in January.

Microsoft's report, titled East Asia threat actors employ unique methods, notes that Chinese campaigns have continued to refine AI-generated or AI-enhanced content, creating videos, memes, and audio, among others. While their influence might not be too impactful right now, it may prove increasingly effective down the line as it becomes more sophisticated, Microsoft said.

Back in September, analysts from the Microsoft Threat Analysis Center highlighted a campaign by Chinese operatives that used generative AI to create content (see example below) for social media posts focusing on politically divisive topics, including gun violence and denigrating US political figures and symbols.

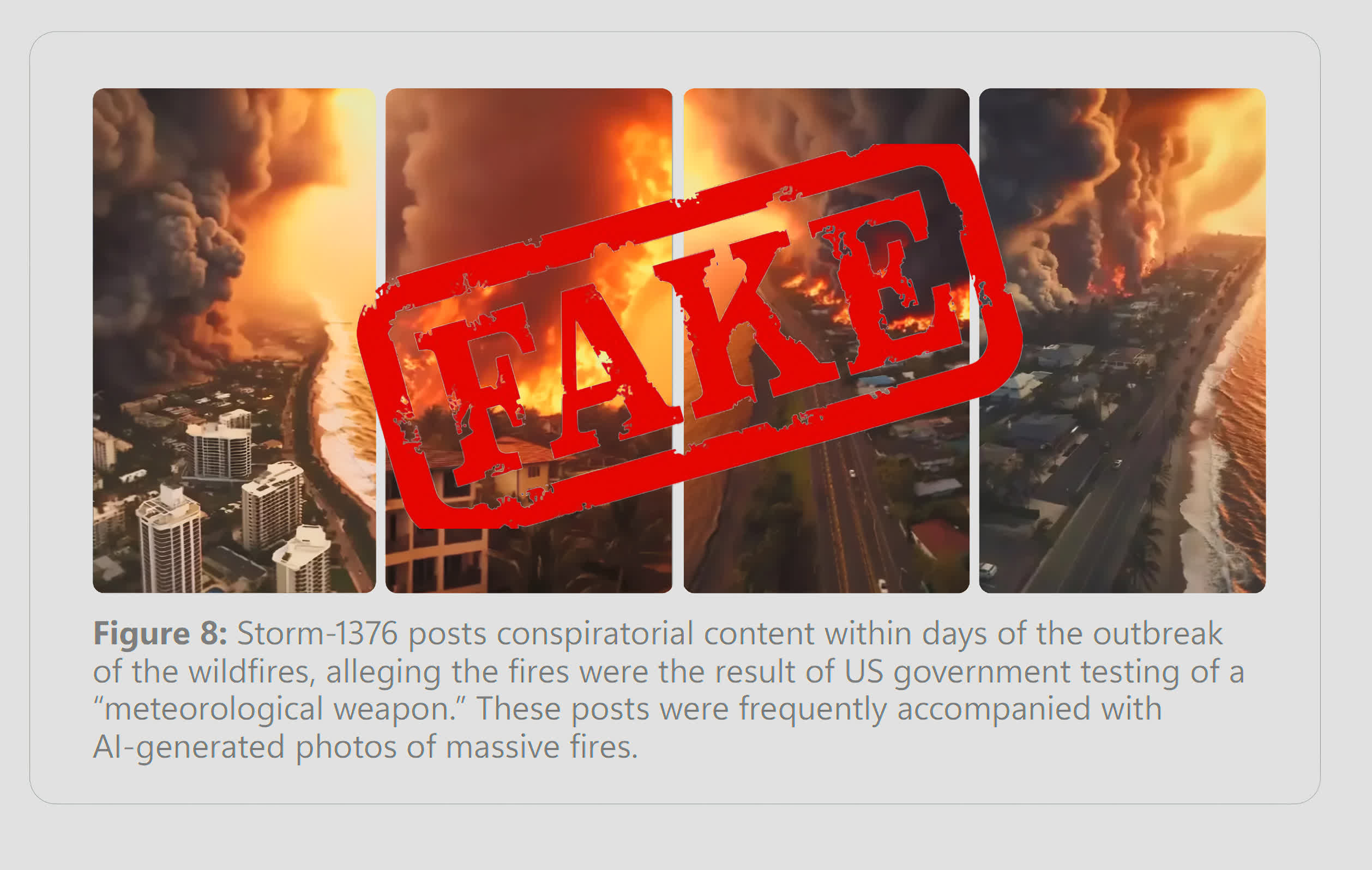

The latest report examines some of the conspiratorial content created using generative AI pushed by Chinese social media accounts. This includes a claim from Beijing-backed group Storm 1376 in August 2023 that the US government had deliberately started the Hawaii wildfires using a military-grade weather weapon. The same group published conspiracy theories relating to the Kentucky rail derailment in 2023 and tried to stoke discord in Japan and South Korea.

Storm 1376 was highly active during the Taiwanese elections in January, which is being referred to by Microsoft as a "dry run" for the upcoming elections in other countries. It posted fake audio of Foxconn founder Terry Gou, who had dropped out of the race in November, endorsing another candidate. The group also posted several AI-generated memes about eventual winner William Lai, who is pro-sovereignty. There was also an increased use of AI-generated news anchors, created by TikTok owner ByteDance's CapCut tool, in which the fake presenters made unsubstantiated claims about Lai's private life, including that he fathered illegitimate children.

One of the biggest problems with fighting realistic generative AI being used to spread misinformation is that many people refuse to believe it's fake, especially when this content conforms to their beliefs and values.