A hot potato: One of the many fears surrounding generative AI is its ability to create disinformation that is then spread online. But while companies and organizations are working against this worsening phenomenon, it seems the biggest challenge they face is the people who refuse to believe something is fake.

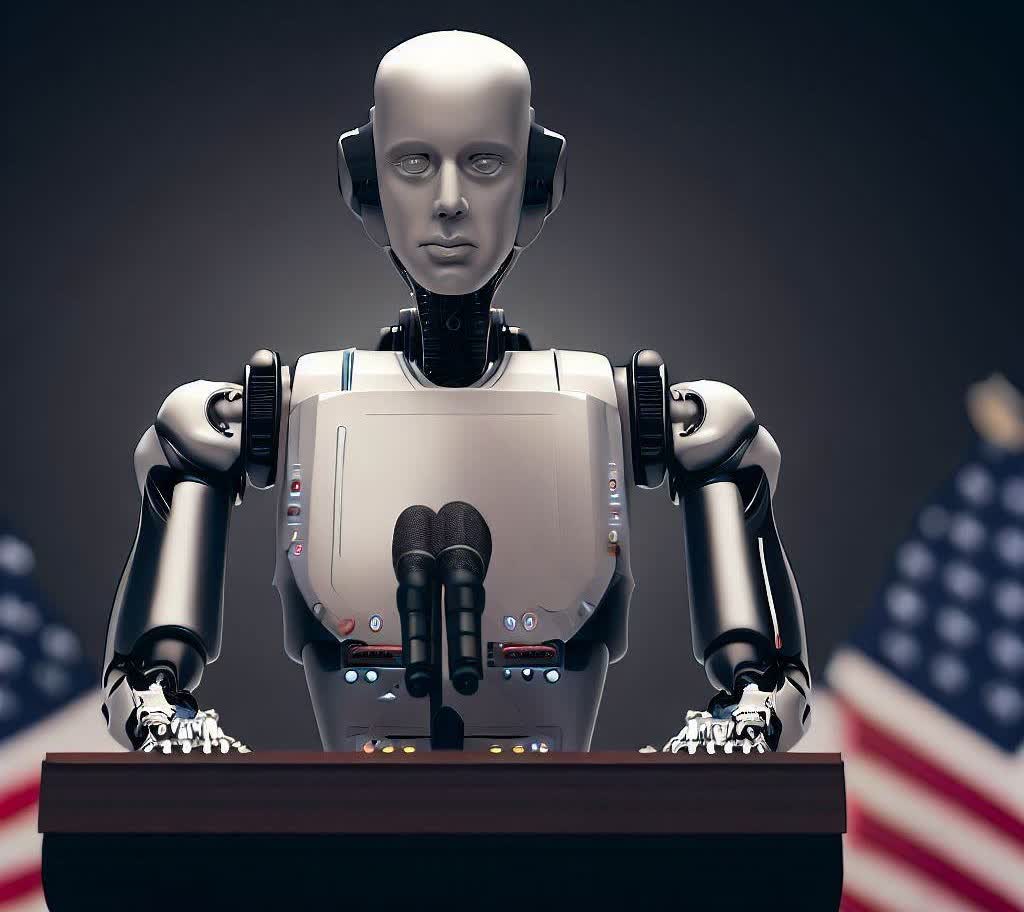

Artificial intelligence has been used to create disinformation for years, but the new wave of generative AI has brought advancements few could have imagined. Convincing images, videos, and audio clips can all be created and used to influence or reinforce the public's opinions.

Part of the problem is that many people distrust those institutions that can confirm something is fake. One only has to look at social media to see all the accusations of nefarious influences at work, along with the line "Of course they want you to believe it's not real." The situation isn't helped by many users being unable to spot these fakes.

Hany Farid, an expert in deepfake analysis and a professor at the University of California, Berkeley, told Bloomberg, "Social media and human beings have made it so that even when we come in, fact check and say, 'nope, this is fake,' people say, 'I don't care what you say, this conforms to my worldview.'"

"Why are we living in that world where reality seems to be so hard to grip?" he said. "It's because our politicians, our media outlets and the internet have stoked distrust."

One of the biggest concerns over misinformation created by generative AI is anything related to next year's election. Microsoft warned last month that Chinese operatives have been using the technology for this purpose, creating images and other content focusing on politically divisive topics, including gun violence and denigrating US political figures and symbols.

The spread of this sort of election misinformation in the US has been minimal so far, but expect to see it increase. It's especially bad on X/Twitter, which the EU says is the worst platform for disinformation; a designation that came just as X disabled a feature that allows users to report misinformation related to elections.

Ultimately, people are usually the biggest obstacle when it comes to fighting AI-generated misinformation. Many share this content because they simply don't know it's fake, and with video and images becoming increasingly realistic, it can be hard to convince them otherwise.