WTF?! One of the many fears about AI use becoming widespread is that people can now alter images – sometimes convincingly – without any technical skills. An example of this surfaced recently when an Airbnb guest said a host manipulated photos in a false £12,000 ($9,041) damage claim.

The incident took place earlier this year when a London-based woman booked a one-bedroom apartment in New York's Manhattan for two-and-a-half months while she was studying, reports The Guardian. She decided to leave the apartment early because she felt unsafe in the area.

Not long after she left, the host told Airbnb that the woman had caused thousands of dollars in damage to his apartment, including a cracked coffee table, mattress stained with urine, and a damaged robot vacuum cleaner, sofa, microwave, TV, and air conditioner.

The woman denied the claim and said she had only two guests during the seven weeks she was in the apartment. She argued that the host, who is listed as a "superhost" on the Airbnb platform, was making the claim as payback for her ending the tenancy early.

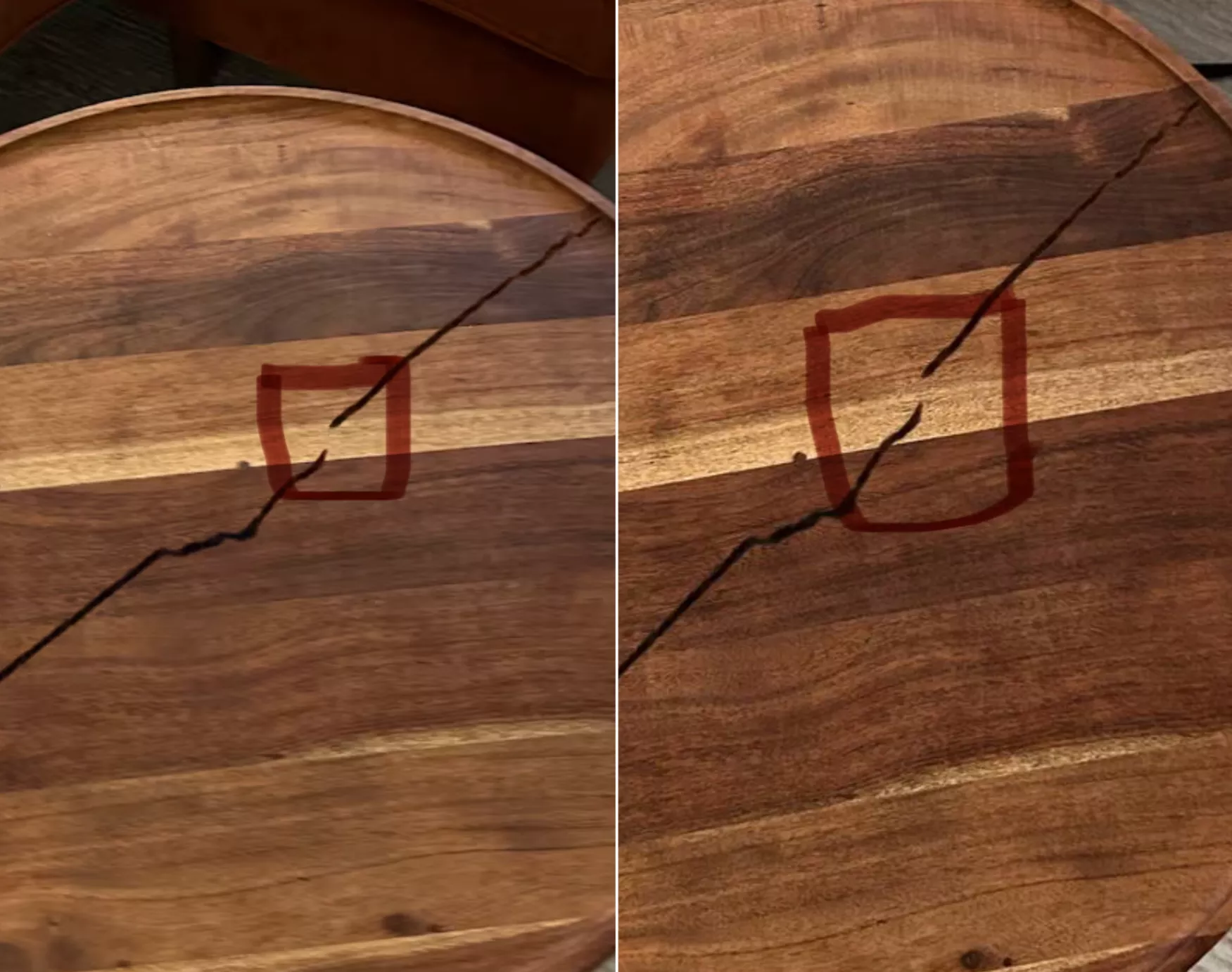

Part of the woman's defence were two photos of the allegedly damaged coffee table. The crack appears different in each image, leading the woman to claim they had been digitally manipulated, likely using AI.

Airbnb initially said that after carefully reviewing the photos, the woman would have to reimburse the host £5,314 ($7,053). She appealed the decision.

Five days after Guardian Money questioned Airbnb about the case, the company accepted her appeal and credited her account with £500 ($663). After the woman said she would not use its services again, the firm offered to refund a fifth of the cost of her booking (£854, or $1,133). She refused this, too, and Airbnb apologized, refunded her the full £4,269 ($5,665) cost of her stay, and took down the negative review that the host had placed on her profile.

"My concern is for future customers who may become victims of similar fraudulent claims and do not have the means to push back so much or give into paying out of fear of escalation," the woman says.

"Given the ease with which such images can now be AI-generated and apparently accepted by Airbnb despite investigations, it should not be so easy for a host to get away with forging evidence in this way."

Airbnb told the host that it could not verify the images he submitted as part of the complaint. The company said he had been warned for violating its terms and told he would be removed if there was another similar report. It is also carrying out a review into how the case was handled.

AI is being used to manipulate images and videos in a wide range of false claims, including vehicle and home insurance claims. The tools' cheapness and ease of use have made this practice incredibly popular. It also means it's even harder to believe anything you see online these days is real.