Forward-looking: Like it or not, robots are becoming more and more prevalent. They fill many work environments, and before long, will be standard fixtures in homes --- at least that's what Facebook is betting on. The social media giant's AI Research Division is preparing artificial intelligence to be used in robots designed for the home by creating photorealistic virtual dwellings.

The simulation is called Habitat. The researchers published a paper on it back in April with Cornell University, but there is a more layman-friendly explanation of the study posted on the Facebook AI blog.

"AI Habitat enables training of embodied AI agents (virtual robots) in a highly photorealistic & efficient 3D simulator, before transferring the learned skills to reality. This empowers a paradigm shift from 'internet AI' based on static datasets (e.g., ImageNet, COCO, VQA) to embodied AI where agents act within realistic environments, bringing to the fore active perception, long-term planning, learning from interaction, and holding a dialog grounded in an environment."

Using machine learning to train a robot to navigate and perform tasks in a physical dwelling could take years. However, as we have seen with other artificial intelligence systems like OpenAI, hundreds of years worth of experience can be gain in a matter of days by rapidly running simulations.

Habitat itself is not a simulated world. Instead, it is a type of platform that can host virtual environments. According to the researchers, Habitat is already compatible with several 3D datasets, including MatterPort3D, Gibson, and SUNCG. It is optimized so that other researchers can run their simulated worlds hundreds of times faster than the real world.

To make the environments more realistic, Facebook created a database for Habitat called "Replica." It is a collection of photorealistic rooms that were created using photography and depth mapping of actual dwellings. Everything from the living room to the bathroom has been recreated as 3D modules that can be put together in any number of configurations, each with appropriate furnishings.

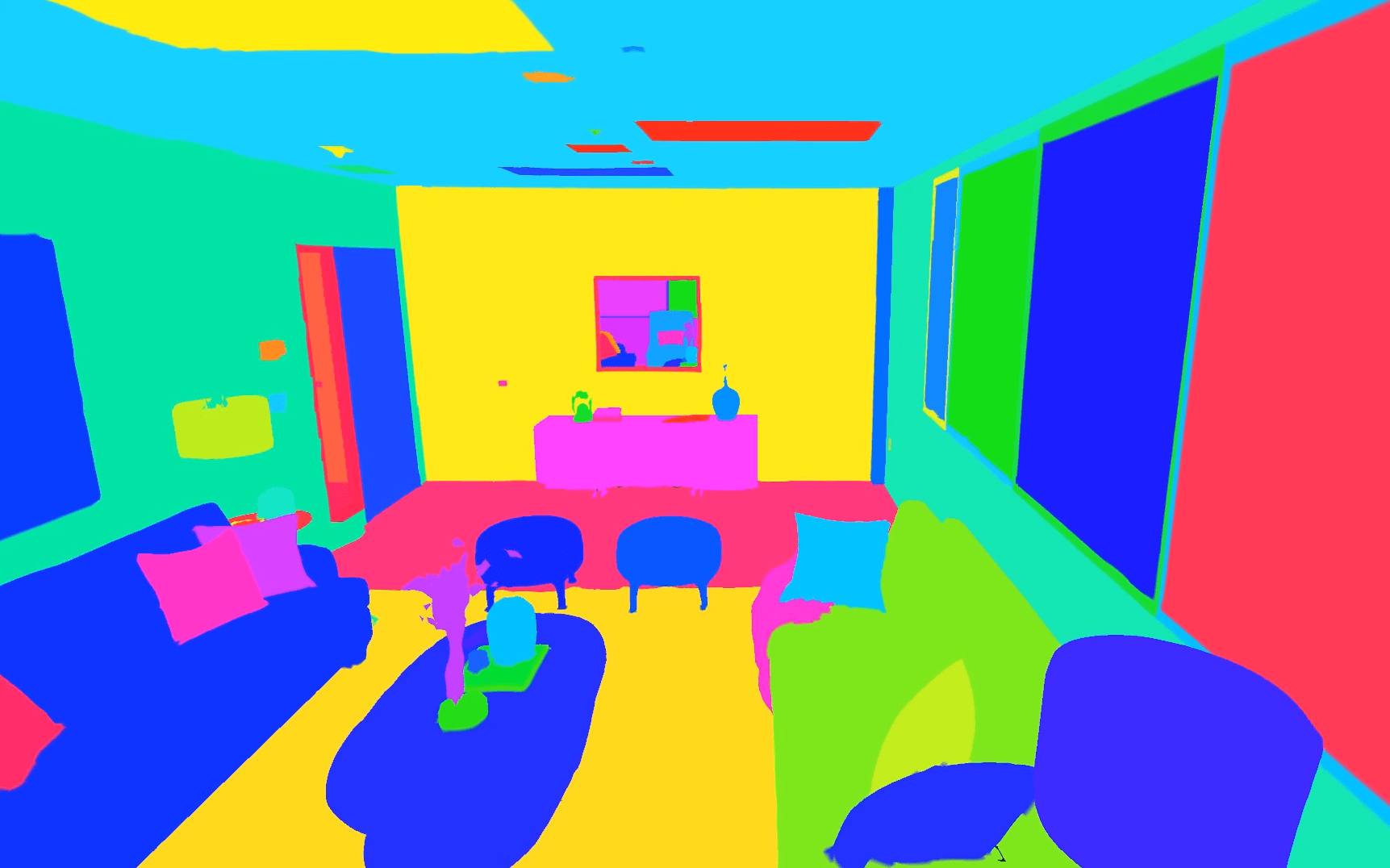

All objects are labeled semantically so the AI can know whether something is "on top of," "next to," "rough," or "blue." Each semantic is given its own color and the result looks something like the above picture to the AI. This enables the agent to follow complex commands like, "Go to the bedroom and tell me what color the box on the dresser is." The researchers posted a pretty cool video showing what trained AI agents can do.

It is worth mention that these worlds are only visual. There are no physics, and AI agents cannot interact with objects. In other words, the AI can "see" and learn that there is a magazine on the coffee table, but it cannot pick it up. It is just a piece of scenery. This lack of the ability to interact with the environment is the only thing that is lacking, but other simulators can do that just without the visual realism. The researchers hope to combine the two concepts soon.

Image credit: Facebook AI Research