In brief: Online bullying is a familiar issue that plagues every social network, but the Facebook-owned Instagram is fighting to protect the sanity of the platform with an AI that recognizes and flags toxic interactions such as insulting, shaming and disrespectful comments. The company is also rolling out the ability for users to restrict offending accounts without making the ban obvious to them - similar in a way to shadow bans used by moderators on Reddit.

Instagram has been working on training an AI to deal with bullying on its platform for years now, which is a never-ending battle to keep it sanitary and prevent situations where impersonators are able to harass victims of mass shootings. Company head Adam Moseri recently announced the next step in that process, with a feature that is set to stop offenders long before they get their fix, while also leaving them seemingly unaware of what's happened to their accounts.

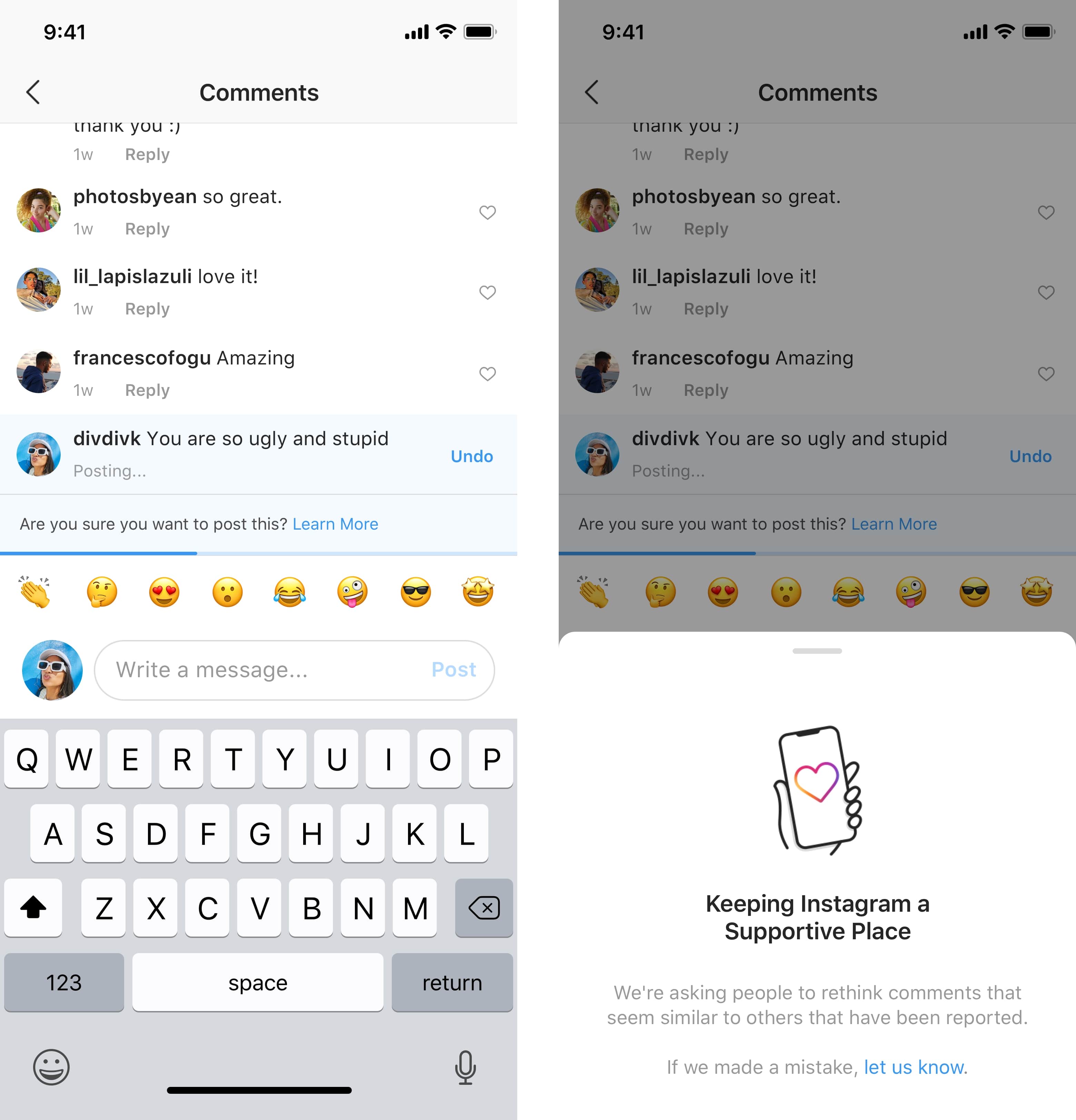

The company is infusing AI moderation into the platform, which Moseri says has proven quite effective during early tests. This is the first line of defense, where you previously had to manually flag offensive comments, photos and videos after seeing them. Now the AI sends a notification to the offender and you won't get to see that piece of content unless they change it to something that won't trigger another red flag.

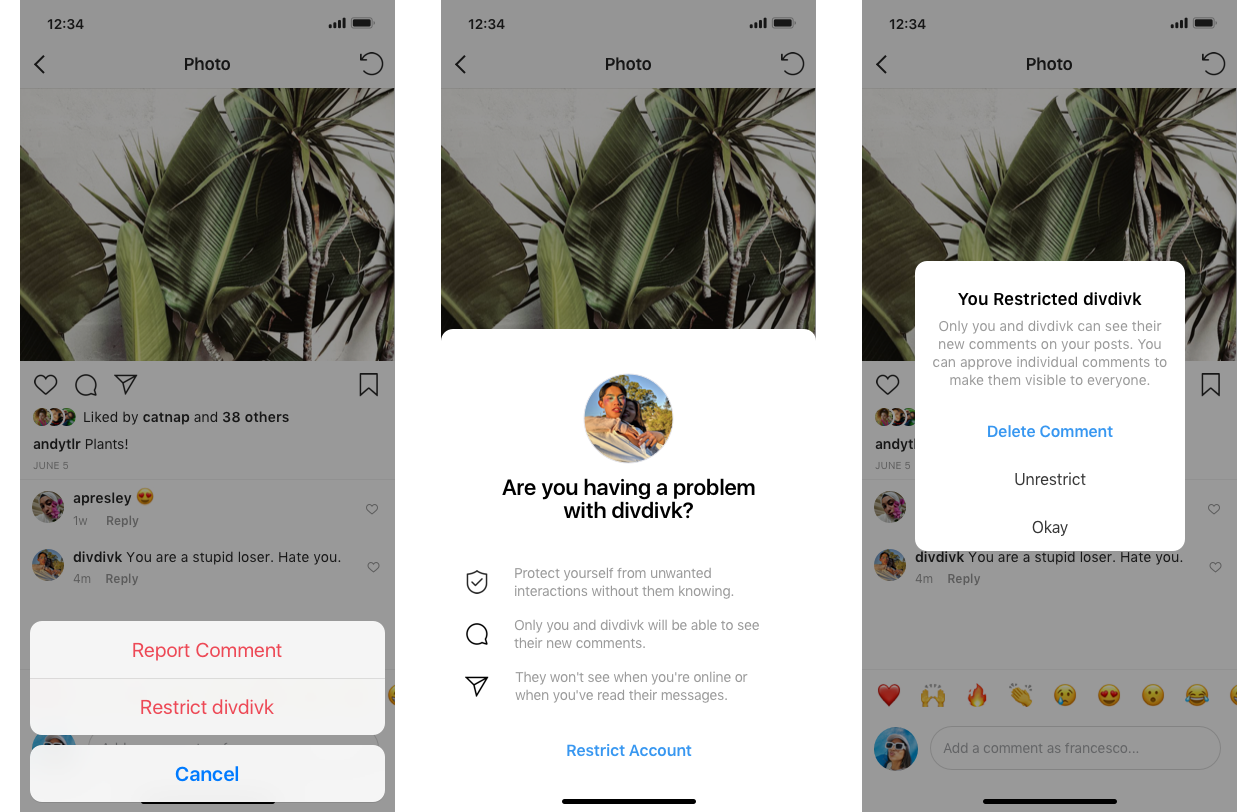

It's likely that users can still find ways around the AI by using alternative spellings or symbols, which is why Instagram is introducing Restrict - a feature that allows you to essentially shadow ban someone from your account. Moseri says feedback has proven teenagers are less likely to unfollow or block the bullies' accounts, as such actions are visible to the offenders and can worsen the problem.

The new feature allows users to review comments and decide their visibility before they're displayed. If you choose to delete a comment, the bully won't have any indication that anything has changed, and also won't be able to see when you're online or you've read their direct messages. In an interview conducted by Time, Moseri explained that users won't have to read the offensive comments, as they'll be covered by what the company calls a "sensitivity screen."

While it may seem like a softer approach, this will put more power into the hands of those who experience harassment on the platform, who are flocking from the morally bankrupt Facebook to Instagram. The bigger challenge is making its one billion users aware that they have several moderation tools at their disposal, some of which have been available since 2017, such as the ability to use specific tags to filter offensive comments and spam.