In brief: Someone was able to achieve remarkable results in upscaling a very old, low-resolution, black and white video from 1896 to a crystal-clear 4K video at 60 frames per second. This is one of many experiments that prove AI tech can be used to improve content that would normally seem impossible to "enhance" in any way.

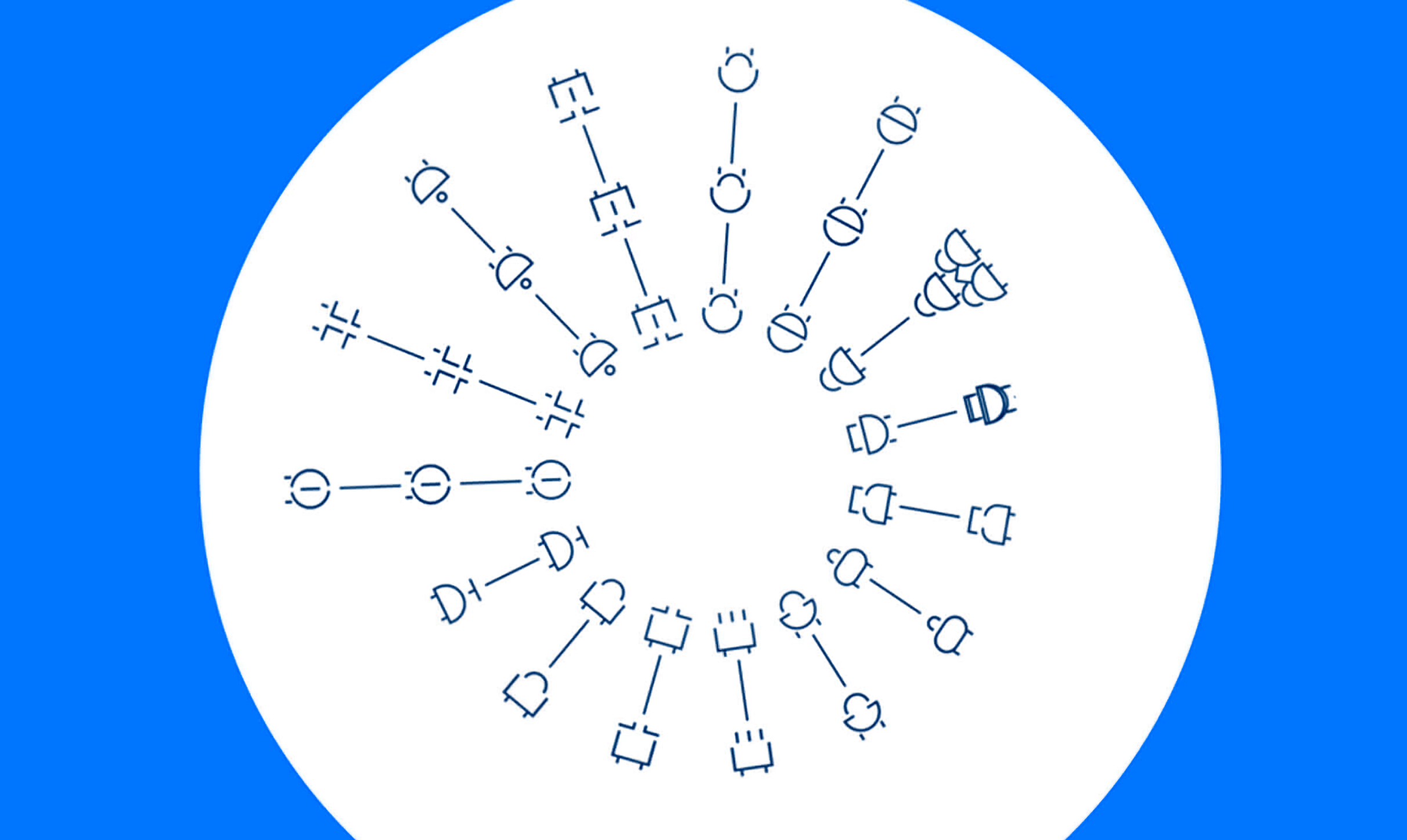

One of the hot topics in tech for the last few years has been AI, with business leaders throwing lots of buzzwords around - machine learning, deep learning, reinforcement learning, neural networks, computer vision, natural language processing.

These are all different approaches that companies implement and mix together to create intelligent services and breathe additional "smarts" into consumer electronics. Examples abound, but you may have heard about that AI developed by Google that is able to detect breast cancer, or that other one that Instagram is using to fight abuse on its platform.

AI's complicated history can be traced back to software developed several decades ago with the purpose of imitating how the human brain learns. Today, many companies use something called neural networks, which are essentially layers of densely interconnected nodes that can be "trained" to recognize patterns by throwing large amounts of data at them and fine-tuning their parameters.

If you're wondering what can be done with them other than say, improving accuracy in autonomous cars, look no further than the above video by YouTuber Denis Shiryaev, who did a cool experiment of turning the famous Lumière Brothers' 1896 short clip "Arrival of a Train at La Ciotat" into a 4K, 60 fps video that looks a lot better than your standard upscaled content.

The original is a modest 640 by 480 video at just 20 frames per second, and is the first example of a number of common cinematic techniques, not to mention one of the earliest attempts at 3D film. As you can see, it's not exactly impressive by today's standards, so Denis used a mix of neural networks from Gigapixel AI and a technique called depth-aware video frame interpolation to not only upscale the resolution of the video, but also increase its frame rate to something that looks a lot smoother to the human eye.

Update: As pointed out by many readers, the upscaled video shown above did not use the original film as the source, but an already upscaled, digitalized version of the same film that had been curated. Here's also a "deoldified" version of the video that adds color.

Supposedly, there is a myth that people who had the privilege of watching it in theaters at the time were startled by the sight of a fast-approaching train that seemed to almost come out of the screen. However, the upscaled version made by Denis showcases this effect quite well, even though it's miles ahead of the original in terms of subjective quality.

The Gigapixel AI used for the upscaling process is developed by Topaz Labs and is being trained to "fill in" information in an image using patterns and structures from a large pool of source images that are downscaled so that results can be compared to the originals. Traditional wisdom is that upscaled content usually loses sharpness and clarity, and also doesn't have any added detail since you're limited by the source image, but Gigapixel AI doesn't rely on interpolation like standard techniques to add in the missing pixels.

It can be argued that this new method might introduce erroneous details and is also much slower (according to Topaz Labs) than conventional techniques (bi-cubic, Lanczos, spline). However, the point is that neural networks can be trained to colorize images and add in various missing patterns in things like floors, windows, vegetation, etc.

There is even an experiment to teach neural networks how to paint, such as one conducted by Libre AI's Ernesto Diaz Aviles, who used PyTorch and Fast.ai libraries to achieve just that. The idea here is to get computers to draw landscapes in much the same way that Bob Ross did in his famous program "The Joy of Painting" where he taught viewers how to use simple techniques in a fun way.

And we've already seen examples of what can be achieved using neural networks to visually overhaul old games like Doom and give game characters more natural and convincing animations.

AI is so big that companies like Google, Apple, Intel, Microsoft, Amazon, and others are all racing to buy or invest in every new and obscure startup in the field to foster talent for their various projects. It's even the subject of ample discussion on the role AI in copyright and trademark law moving forward. It will be interesting to see what's in store for the next decade, and, thanks to Nvidia, we might have a hint - AI-rendered, interactive virtual worlds based on massive libraries of real-world footage.