In brief: Though the very idea frightens privacy advocates, smart speaker developers are on a mission to allow their devices to activate even without hearing their wake word. We know Google is already working on this technology, but now, the folks over at Carnegie Mellon University are following suit.

University researchers have developed a machine learning model that uses the power of AI to determine precisely what direction someone's voice is coming from.

This may not seem very important at first, but the researchers in question are planning ahead for a future in which IoT devices become "increasingly dense" throughout home and office environments.

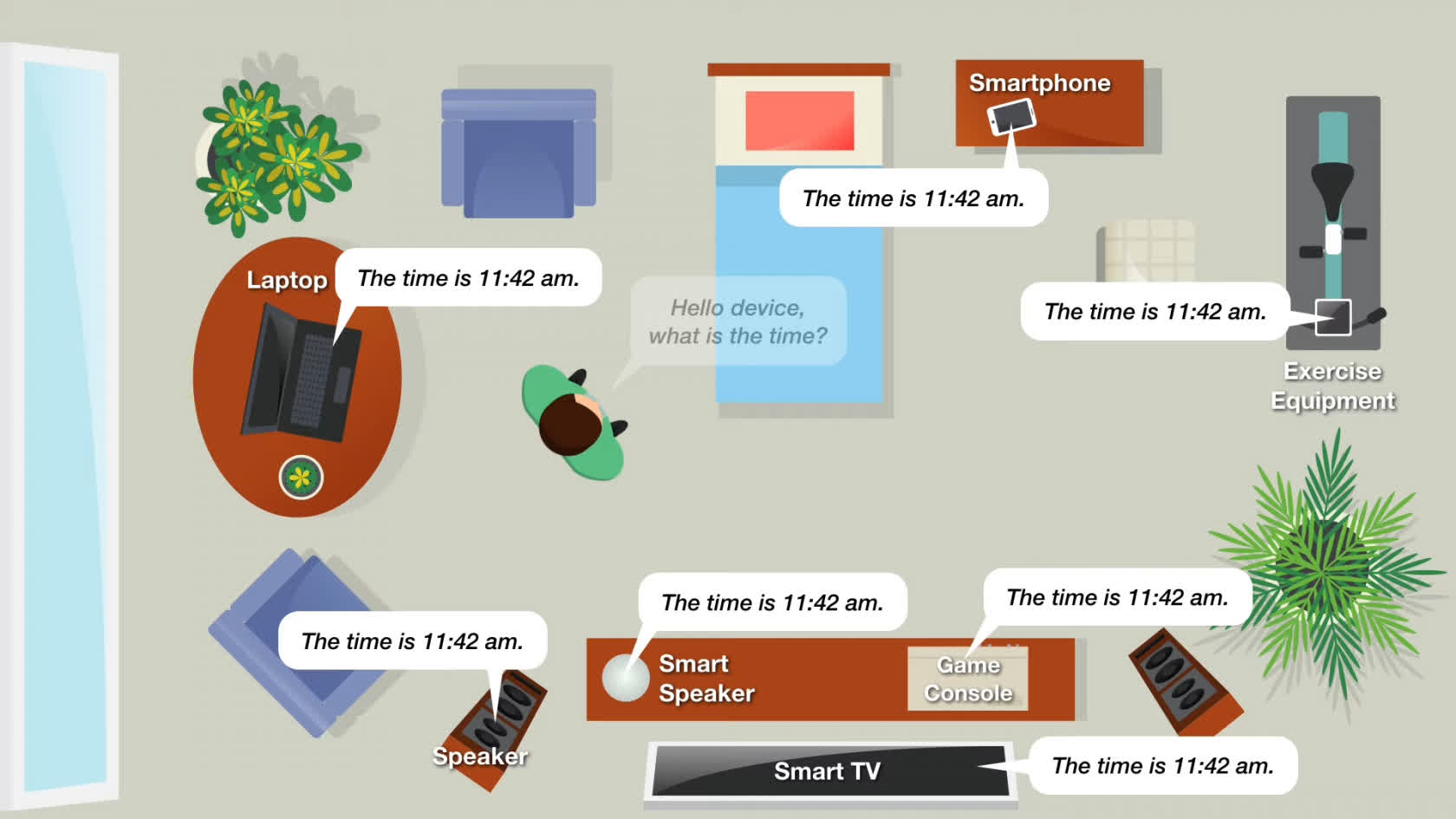

Imagine that you had an Alexa-powered smart TV, speaker, and smartphone all in one place. Speaking the wake word might very well activate command mode for every single one of them, which is hardly necessary.

In such a situation, you might want to speak to one specific smart device, not an entire room full of them – that's where this research comes into play. This approach to wake word-free commands differs from others in that it doesn't require facial recognition tech, which could be for the best.

Naturally, this system's accuracy will heavily rely on the average user's ability to adapt to the concept. Users would suddenly need to ensure they aren't accidentally talking in the general direction of a smart device when asking a question to a family member or friend, for example.

However, that's a problem for another day. This technology won't even be rolling out to the general public any time soon (though the underlying code is open to all), so focusing on