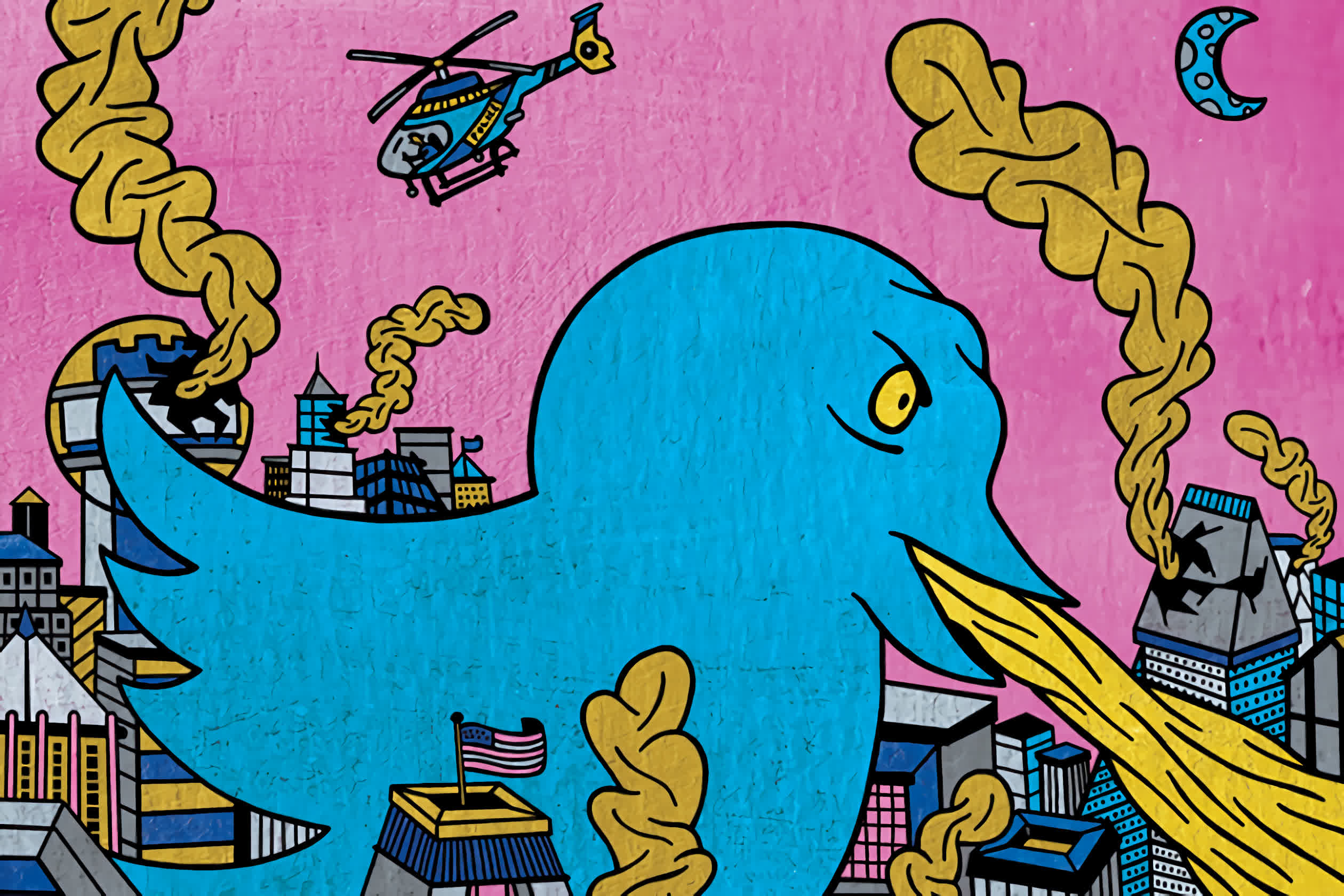

In context: The controversial Section 230 of the Communications Decency Act has been a hotly debated subject as of late, especially in the context of huge misinformation campaigns surrounding the 2020 election and the ongoing pandemic. This is why tech CEOs are about to get grilled again in a new hearing where lawmakers are looking to address the role of social media in promoting extremism and misinformation.

This week, the CEOs of Facebook, Twitter, and Google will testify before Congress through a virtual hearing that can be watched live. The main topics of the hearing are the war on misinformation and Section 230 of the Communications Decency Act. At least two of the executives are preparing to defend the liability protections afforded by Section 230 with testimonies that focus on the role of social media in the January 6 Capitol attack.

Section 230 is a piece of legislation that was passed in 1996 which says that an "interactive computer service" can't be held liable for third-party content as it isn't the publisher of that material. This was a bipartisan effort that sought to protect website owners from being sued for user-generated content, with a few notable exceptions such as pirated works. It is, however, open to misinterpretation and often used as an excuse to ignore platform-wide issues.

Facebook CEO Mark Zuckerberg will talk about the company's efforts to combat hateful content and misinformation regarding the 2020 election and Covid-19, such as disrupting economic incentives, applying machine learning to detect fraud and spam, and building partnerships with reputable organizations such as Reuters for manual fact-checking.

However, a recent analysis shows Facebook could have done a much better job, as the prime movers for most misinformation on the platform were relatively easy to identify with a more aggressive algorithm.

President Joe Biden suggested during his campaign that he would revoke or rewrite Section 230. Zuckerberg believes the latter option is a better approach, and his written testimony shows he will try to convince lawmakers to make Section 230 protections available only to platforms that "have systems in place for identifying unlawful content and removing it." He also notes that a third party should determine the criteria for evaluating those systems, except when it comes to issues like privacy and encryption, which "deserve a full debate in their own right."

In Zuckerberg's view, giant platforms shouldn't be held liable for whatever pieces of content fall through the cracks of their algorithms, as there's no practical way to guarantee that they won't. On the other hand, his proposal throws smaller platforms under the bus, as most wouldn't have the resources to build the same filtering and moderation tools. And even if they did manage to do it, they'd impact their user growth in ways that platforms like Facebook never had to until the recent past.

Google CEO Sundar Pichai and Twitter CEO Jack Dorsey don't have any proposals for how lawmakers should reform Section 230. Pichai says he's concerned that changes to the legislation could have unintended consequences for platforms and their users, and instead would like the focus to be on "ensuring transparent, fair, and effective processes for addressing harmful content and behavior."

Dorsey doesn't mention Section 230 in his written remarks, but will talk about innovations that Twitter is bringing to the table with experiments like Birdwatch and Bluesky. And just like Zuckerberg, he doesn't believe it's possible to moderate every single interaction on Twitter, which is why the company's efforts are focused towards increasing trust and transparency.

The last time tech CEOs were grilled by Congress was on antitrust issues, and it quickly devolved into a political spectacle. Chances are this time will be no different, with Republican lawmakers accusing executives of censoring conservative content on their platforms, while Democratic lawmakers will no doubt criticize them for not doing enough to curb the spread of harmful content.