In brief: In the aftermath of damning testimony from a whistleblower and an extensive leak of internal research about its online platforms, Facebook is announcing plans to introduce new functionality in Instagram that could guide teens away from potentially harmful content and even suggest taking a break from using the platform in some cases.

Instagram will bring new changes to its app that it says will "nudge" teenagers away from harmful content. The Facebook-owned company says the new functionality will look at the type of content teenagers are watching and will encourage them to take a break if they appear to be watching the same type of content over and over again.

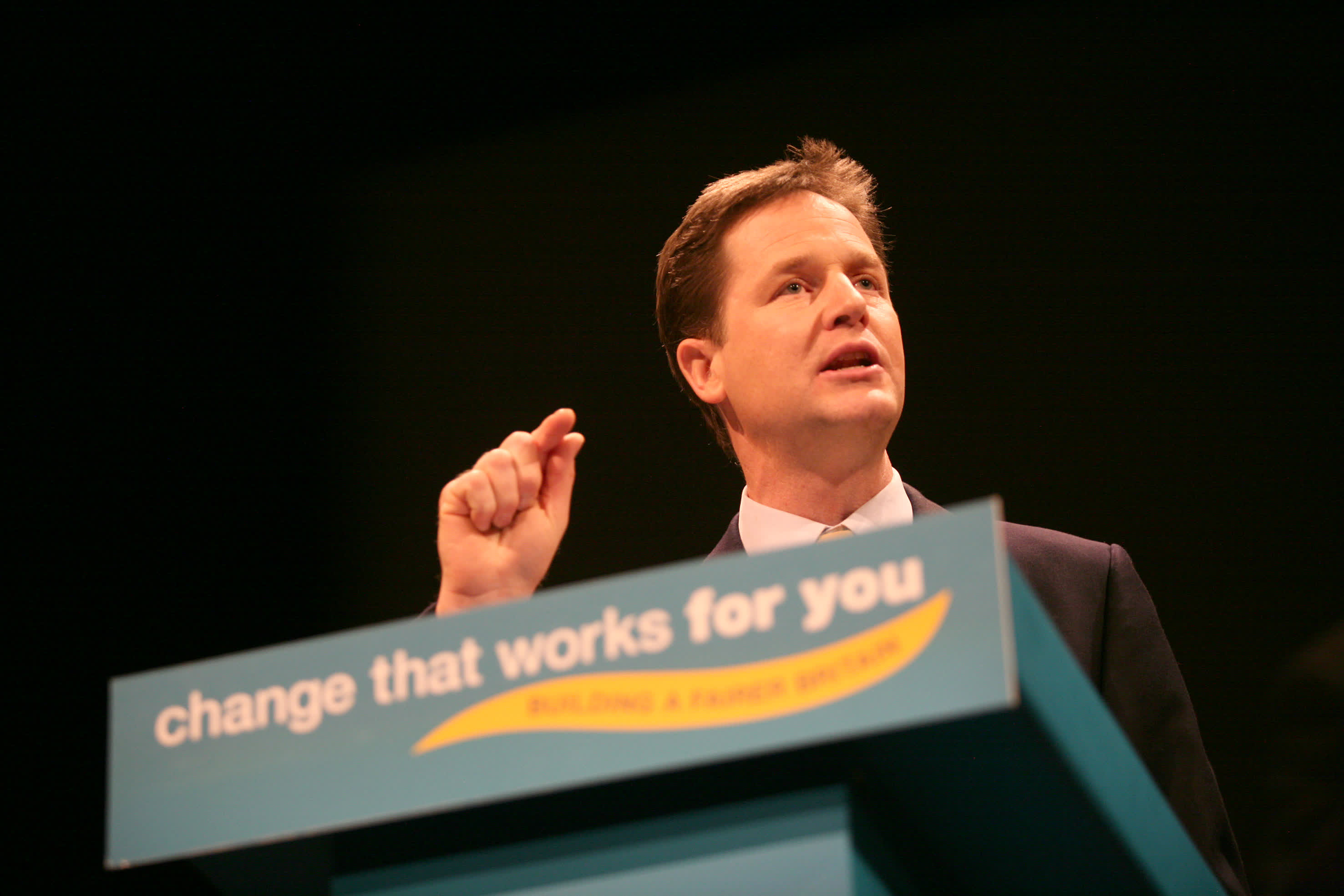

The plans were announced on Sunday by Facebook vice president of global affairs Nick Clegg during CNN's State of Union with Dana Bash. Clegg explained that the company is developing an automated system that will detect if the content teenagers are watching may not be conducive to their well-being, in which case the algorithm will suggest a different type of content to gently push them away from potentially harmful content.

Last month, Facebook paused work on its Instagram Kids app, bowing to regulatory pressure from the US Congress, as well as widespread criticism in the media following a series of damning reports about Facebook's internal research and its alleged lack of action on the results. Clegg told CNN the company is also planning to introduce a feature that will prompt teenagers to take a break from using Instagram, but he didn't go into any detail or provide a timeframe for when we can expect to see it roll out to users.

Digging deeper, Instagram chief Adam Mosseri said last month that internal research prompted the company to work on issues like negative body image and negative social comparison. One of the features proposed internally was "Take a Break," which would allow users to suspend their own account if they thought Instagram was a poor use of their time.

When pressed about Facebook's algorithms and their potential to amplify the reach of misinformation or calls to insurgency, Clegg brought up the company's extensive work on safety and security over the past five years. He also noted that Facebook has spent $13 billion --- more than three times Twitter's annual revenue --- and hired tens of thousands of people specifically to address challenges in these two key areas.

However, after seeing Facebook whistleblower Frances Haugen's testimony before a US Senate subcommittee last week, regulators are scrutinizing the company more than ever. Facebook CEO Mark Zuckerberg disputed Haugen's claims with a long explanation on why it wouldn't make sense for a company that makes most of its money from advertising to knowingly push the types of content that advertisers don't want to be associated with.

In any case, it looks like more regulation on child safety and user privacy is on the way, which, ironically, is what Zuckerberg has been calling for since 2019. More recently, he also denied claims that he had made a deal with former President Donald Trump's administration to avoid fact-checking some content in exchange for less severe regulations.

Masthead credit: Alex Folkes