In context: Games today use highly-detailed textures that can quickly fill the frame buffer on many graphics cards, leading to stuttering and game crashes in recent AAA titles for many gamers. With GPU manufacturers being stingy with VRAM even on the newest mid-range models, the onus is on software engineers to find a way to squeeze more from the hardware available today. Perhaps ironically, the most promising development in this direction so far comes from Nvidia – neural texture compression could reduce system requirements for future AAA titles, at least when it comes to VRAM and storage.

One of the hottest topics at the moment is centered around modern AAA games and their system requirements. Both the minimum and recommended specs have increased, and as we've seen with titles like The Last of Us Part I, Forspoken, The Callisto Protocol, and Hogwarts Legacy, running them even at 1080p using the Ultra preset is now posing issues for graphics cards equipped with 8GB of VRAM.

When looking at the latest Steam survey, we see that 8GB is the most common VRAM size for PCs with dedicated graphics solutions. That probably won't change for a while, especially since graphics card upgrades are still expensive and GPU makers don't seem to be interested in offering more than 8GB of graphics memory on most mainstream models.

Also read: Why Are Modern PC Games Using So Much VRAM?

The good news is Nvidia has been working on a solution that could reduce VRAM usage. In a research paper published this week, the company details a new algorithm for texture compression that is supposedly better than both traditional block compression (BC) methods as well as other advanced compression techniques such as AVIF and JPEG-XL.

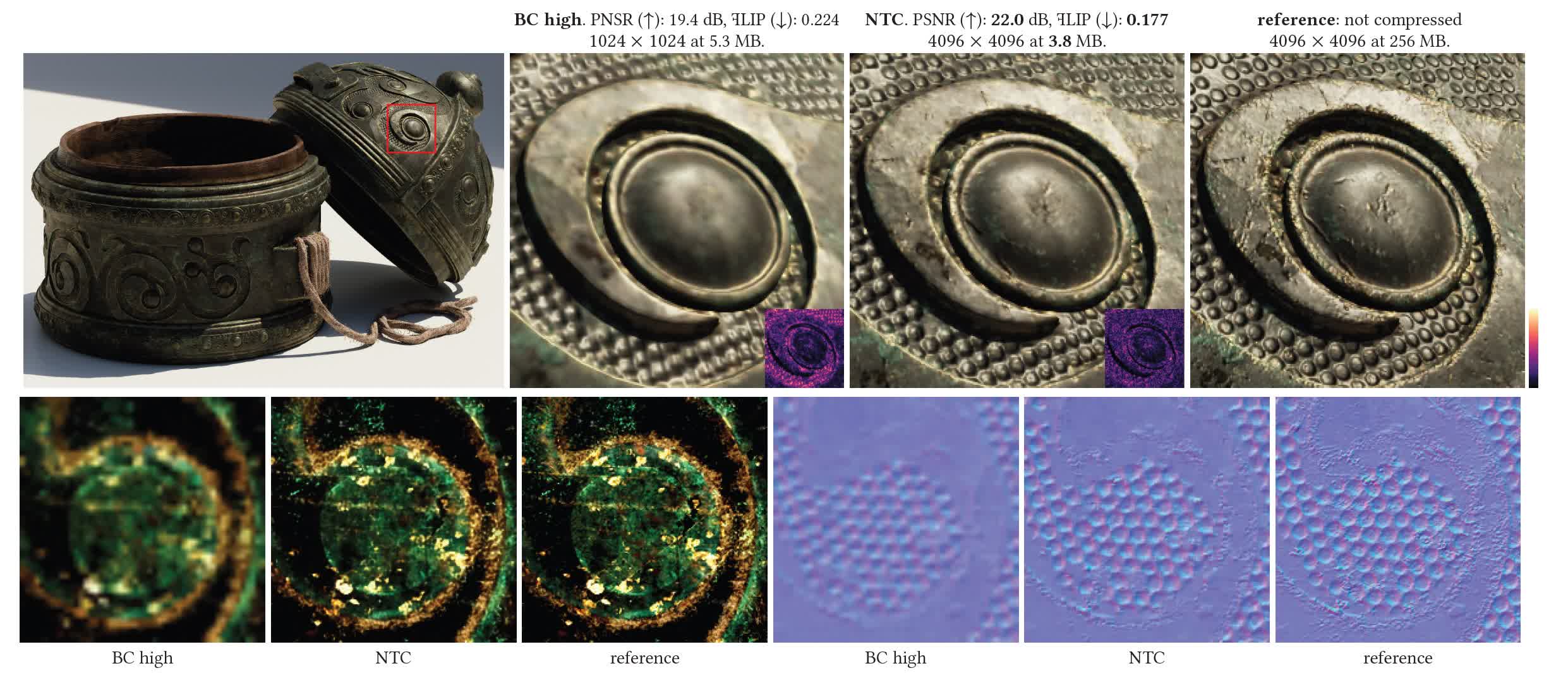

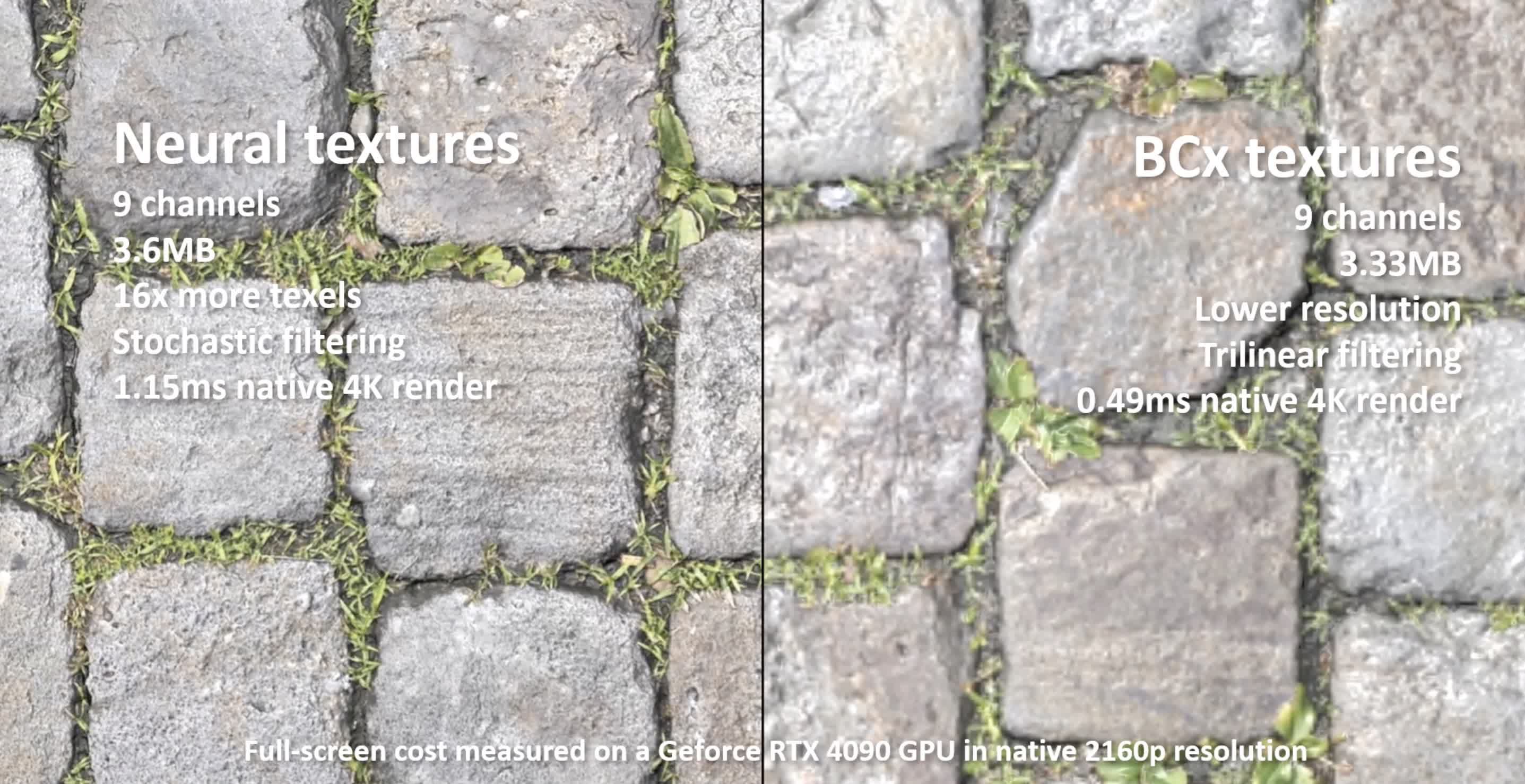

The new algorithm is simply called neural texture compression (NTC), and as the name suggests it uses a neural network designed specifically for material textures. To make this fast enough for practical use, Nvidia researchers built several small neural networks optimized for each material. As you can see from the image above, textures compressed with NTC preserve a lot more detail while also being significantly smaller than even these same textures compressed with BC techniques to a quarter of the original resolution.

In modern games, the visual properties of a material are stored in separate maps that describe how it absorbs and reflects light, and the assortment used varies from one game engine to another. Every map usually packs additional, scaled-down versions of the original map into the same file. These so-called "mipmaps" are used to optimize graphics memory usage when the full resolution of the texture isn't needed, such as when a particular object is far away from your point of view.

Also read: How 3D Game Rendering Works: Texturing

Researchers explain the idea behind their approach is to compress all these maps along with their mipmap chain into a single file, and then have them be decompressed in real time with the same random access as traditional block texture compression.

Compressing tens of thousands of unique textures for a game also takes time, but Nvidia says the new algorithm is supposedly ten times faster than typical PyTorch implementations. For instance, a 9-channel 4K material texture can be compressed in one to 15 minutes using an Nvidia RTX 4090, depending on the quality level you set. The researchers note NTC supports textures with resolutions up to 8K (8,192 by 8,192) but didn't offer performance figures for such a scenario.

An obvious advantage is that game developers will be able to utilize NTC to hopefully reduce storage and VRAM requirements for future games, or, at the very least, reduce stuttering by fitting more textures in the same frame buffer and thus reducing the need to swap them in and out when moving across a detailed environment. Another advantage is that NTC relies on matrix multiplication, which is fast on modern GPUs and even faster when using Tensor Cores on GeForce RTX GPUs.

However, NTC does have some limitations that may limit its appeal. First, as with any lossy compression, it can introduce visual degradation at low bitrates. Researchers observed mild blurring, the removal of fine details, color banding, color shifts, and features leaking between texture channels.

Furthermore, game artists won't be able to optimize textures in all the same ways they do today, for instance, by lowering the resolution of certain texture maps for less important objects or NPCs. Nvidia says all maps need to be the same size before compression, which is bound to complicate workflows. This sounds even worse when you consider that the benefits of NTC don't apply at larger camera distances.

Perhaps the biggest disadvantages of NTC have to do with texture filtering. As we've seen with technologies like DLSS, there is potential for image flickering and other visual artifacts when using textures compressed through NTC. And while games can utilize anisotropic filtering to improve the appearance of textures in the distance at a minimal performance cost, the same isn't possible with Nvidia's NTC at this point.

Graphics geeks and game developers who want a deep dive into NTC can find the paper here – it's well worth a read. Nvidia will also present the new algorithm at SIGGRAPH 2023, which kicks off on August 6.