Simply put, the AMD Radeon RX 6500 XT is one of the worst Radeon products released in the last decade, and its timing couldn't have been worse. Back in January 2022, gamers were nearing the end of a dry spell for affordable gaming GPUs, due to the cryptocurrency mining boom.

If you had a working graphics card, our advice was to wait. However, for those desperate to start gaming, the cheapest usable options, such as a Radeon 5500 XT or RX 580, were priced around $300, a hefty price to pay for low-end, aging technology. The hope was that AMD or Nvidia would release a new current-gen GPU for around $200. But Nvidia being Nvidia, it seemed our only hope was AMD, and so with the announcement of a $200 Radeon 6500 XT things were looking promising, or at least we thought.

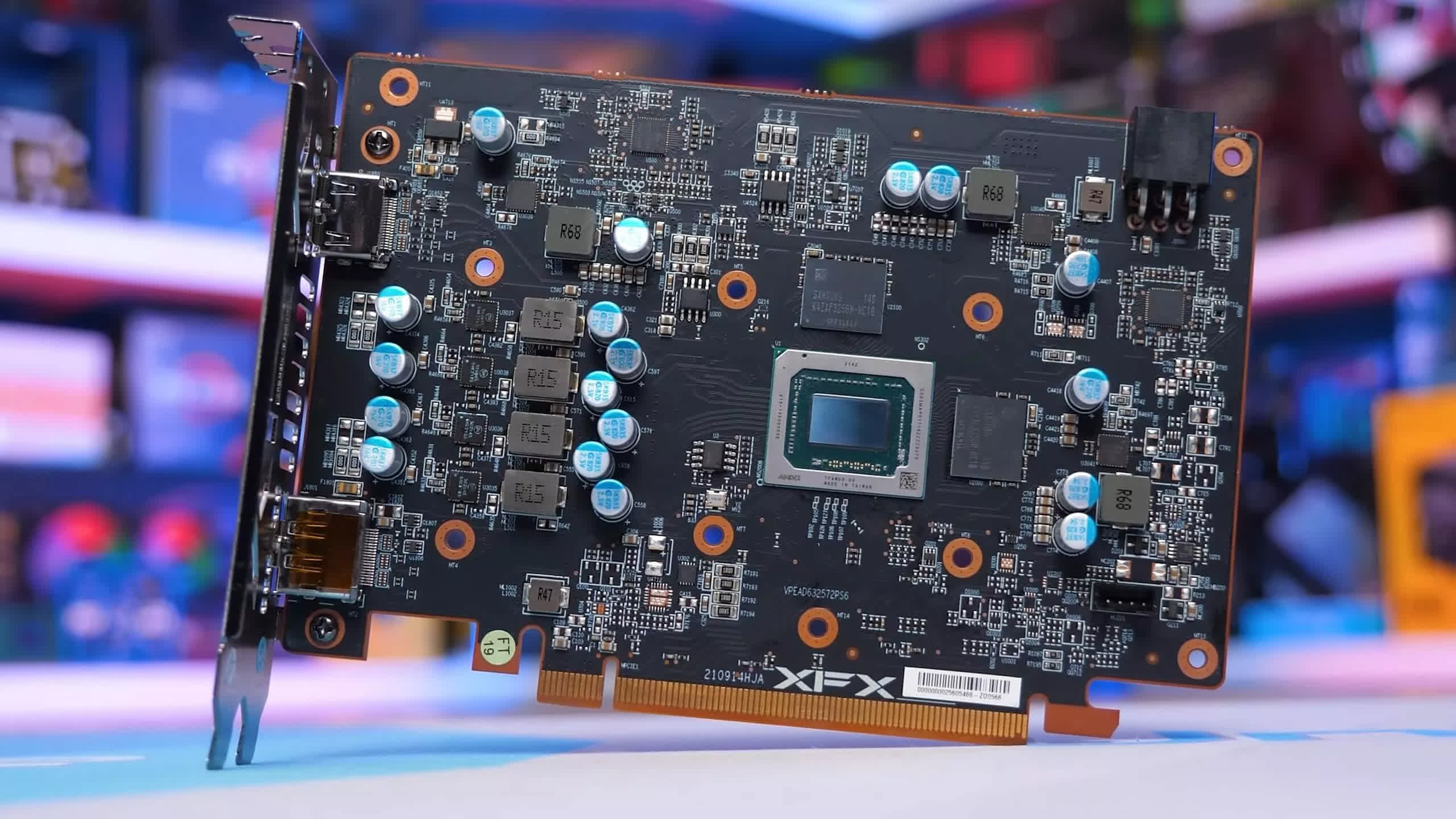

However, once we got ahold of the specs and learned that the GPU core configuration had been reduced by over 40% compared to the Radeon RX 6600, with half as much Infinity Cache and a 44% reduction in memory bandwidth, we knew it was going to be bad, but just how bad was a surprise.

This was compounded by the fact that, along with these core and memory downgrades, the PCI Express bandwidth was also halved, from x8 to x4. This is particularly problematic with just 4GB of memory, again half that of the RX 6600. The list of downgrades continues: there's no hardware encoding of any type, no AV1 decoding, and just two display outputs.

Given all these issues, the $200 MSRP seemed excessive, and the $300 retail price that the 6500 XT maintained for the first few months of availability was even more discouraging.

Our advice at the time was to ignore the Radeon 6500 XT. At $300 it was a horrifically poor choice. Even at the $200 MSRP, in the then-current crypto mining market, it was such a subpar product that you would be better off buying second-hand or, preferably, simply waiting. Cryptomining was finally slowing down around then, and the boom would end soon, though we didn't know exactly when. It turned out to be just a few months after the release of the 6500 XT, so anyone who paid $300 at launch ended up getting burnt.

Paying a high price for a product is one thing, but paying for a product that is a complete fail is another matter entirely. The GeForce RTX 4090 is priced at $2,000, an incredibly high amount for a graphics card. We don't recommend spending that much, but if you do, you're getting the best gaming experience currently available, and a product that will remain highly capable for years.

The Radeon 6500 XT, however, was a weak product at launch, and we predicted it would become nearly unusable in the near future, even with the lowest quality settings. Now, some 18 months after its release, how is the 6500 XT performing in today's games? Let's find out.

For testing, we're using our Ryzen 7 7800X3D test system with 32GB of DDR5-6000 CL30 memory. Yes, this is a high-end CPU, but please understand it doesn't matter if we tested with a Ryzen 5 5600 or a 7800X3D, the results will be the same, these lower-end GPUs always result in heavily GPU limited scenarios, so much so that the CPU is almost irrelevant. That said, we have manually changed the PCIe mode to 3.0 for all testing, with the exception of the Radeon 6500 XT which will be tested using both 3.0 and 4.0.

Products such as the GeForce GTX 1650 Super and Radeon RX 6600 deliver basically the same results using either PCIe mode, so testing both was unnecessary and would just clutter the graphs. Finally, the display drivers used were Game Ready 546.33 and Adrenalin Edition 23.12.1.

Benchmarks

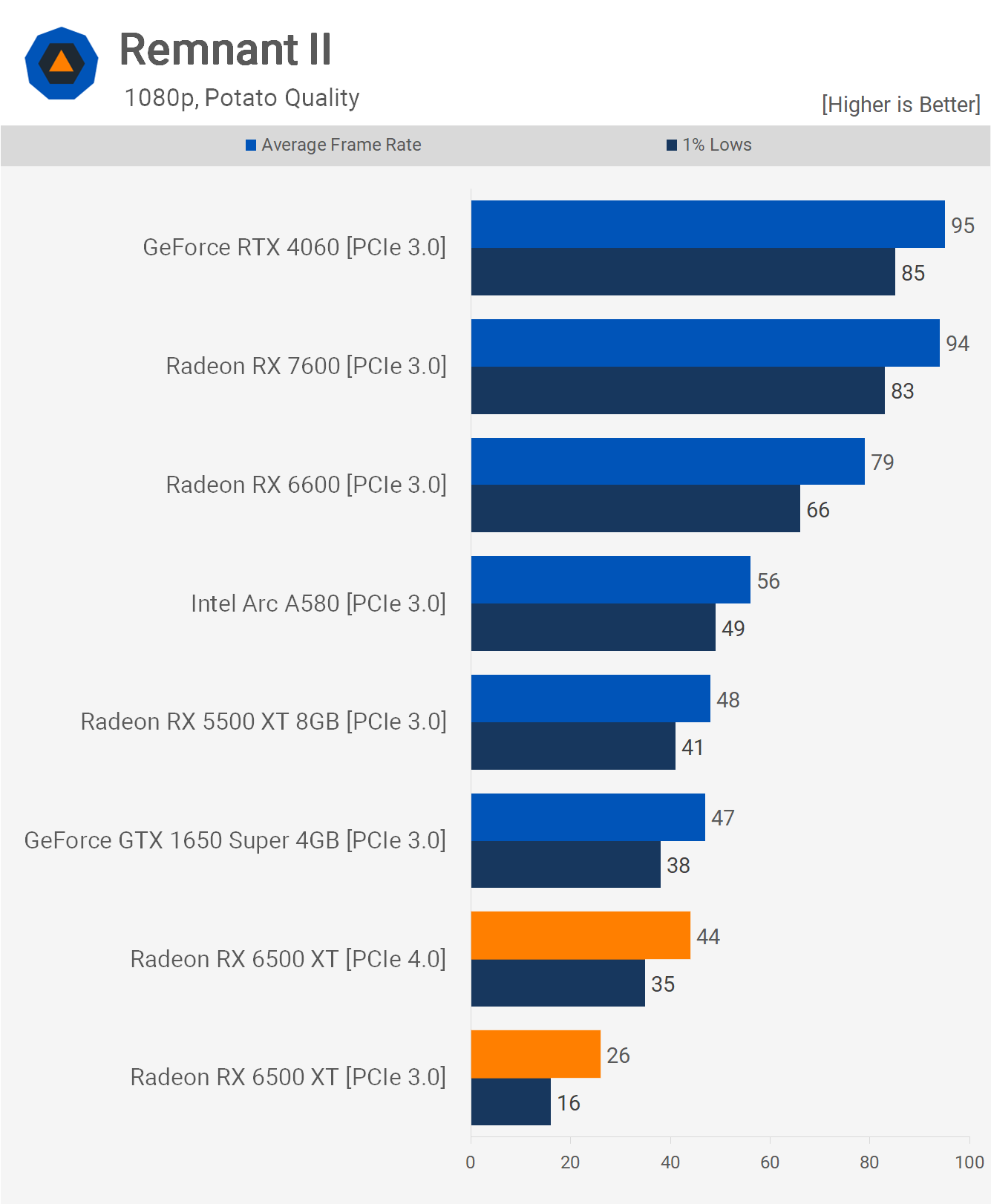

First up, we have Remnant II, and ironically, this game includes a 'Potato' preset, which is lower than low and the lowest quality option the game offers. Hilarious preset naming aside, the 6500 XT is a real disappointment in this one. With a PCIe 4.0 enabled system, the game is playable, though performance is lower than older models such as the 1650 Super and 5500 XT, which were tested using PCIe 3.0.

The big problem arises with PCIe 3.0 for the 6500 XT, where it struggles significantly, failing to even average 30 fps, which is widely considered unplayable. Even when the 6500 XT uses PCIe 4.0, the RX 6600 is 80% faster, yet at MSRP, it costs just 65% more and includes a host of additional features.

However, the main issue is that we have a $200 previous generation GPU that can't deliver 60 fps at 1080p in Remnant II, even using the lowest quality settings. That's concerning.

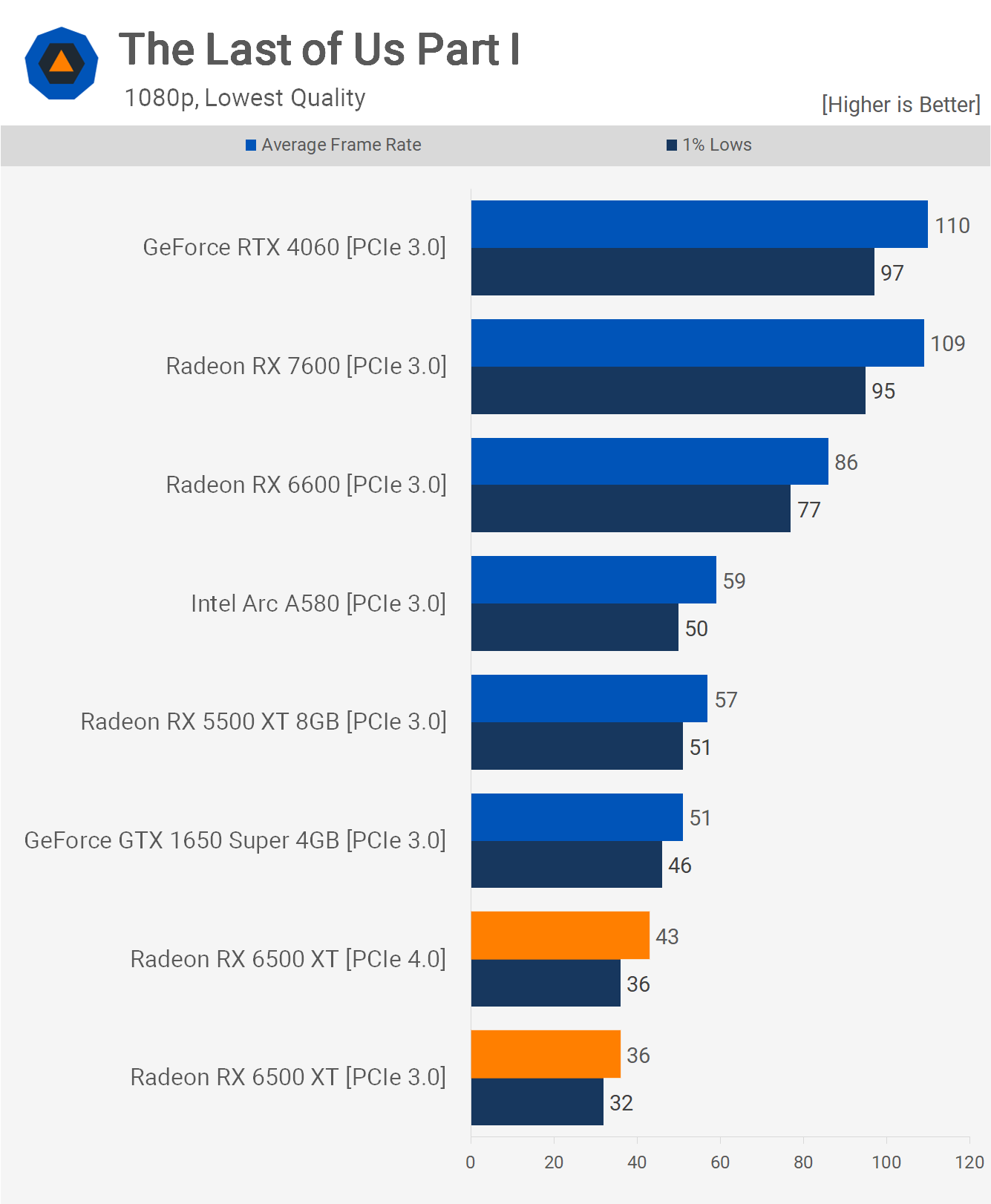

Next, we tested The Last of Us Part I, again at just 1080p using the lowest quality preset. The 6500 XT fails to approach 60 fps and is significantly slower than parts such as the 1650 Super and, embarrassingly, the 5500 XT.

In this case, the RX 6600 was 100% faster, or almost 140% faster when the 6500 XT is limited to PCIe 3.0. It's also noteworthy that the 5500 XT, also using PCIe 3.0, is almost 60% faster than the 6500 XT on the same PCIe spec. So, The Last of Us Part I is another game where the 6500 XT falls short of 60 fps at 1080p on the lowest settings.

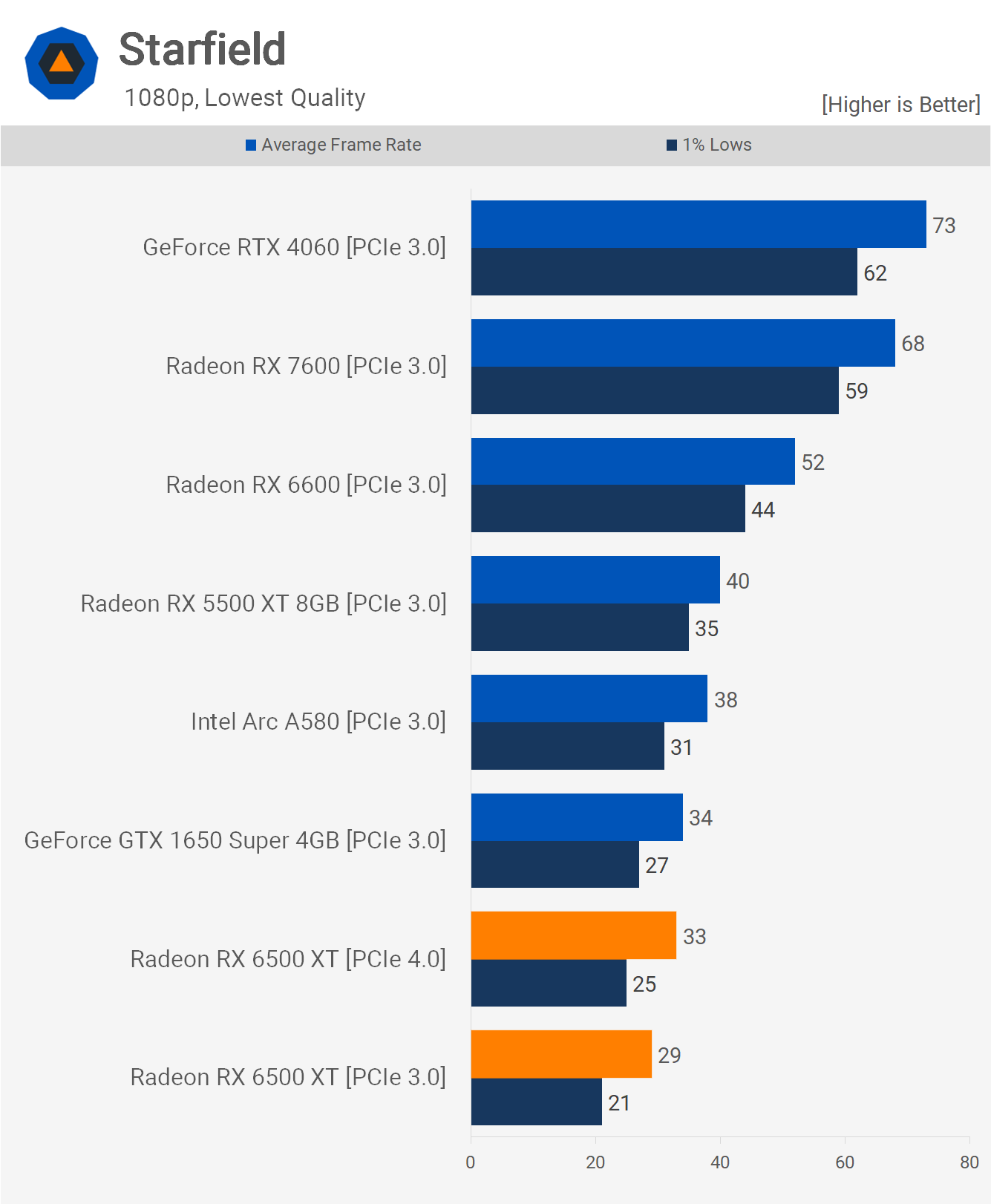

In Starfield, the 6500 XT also delivers disappointing results. When limited to PCIe 3.0, the GTX 1650 Super was 17% faster, while the 5500 XT was a significant 38% faster. Again, the RX 6600 outperforms by 80%. The RX 6600's initial $330 price was underwhelming, but even ignoring competitors, the 6500 XT's performance is lacking, hovering around 30 fps at best at 1080p on the lowest settings.

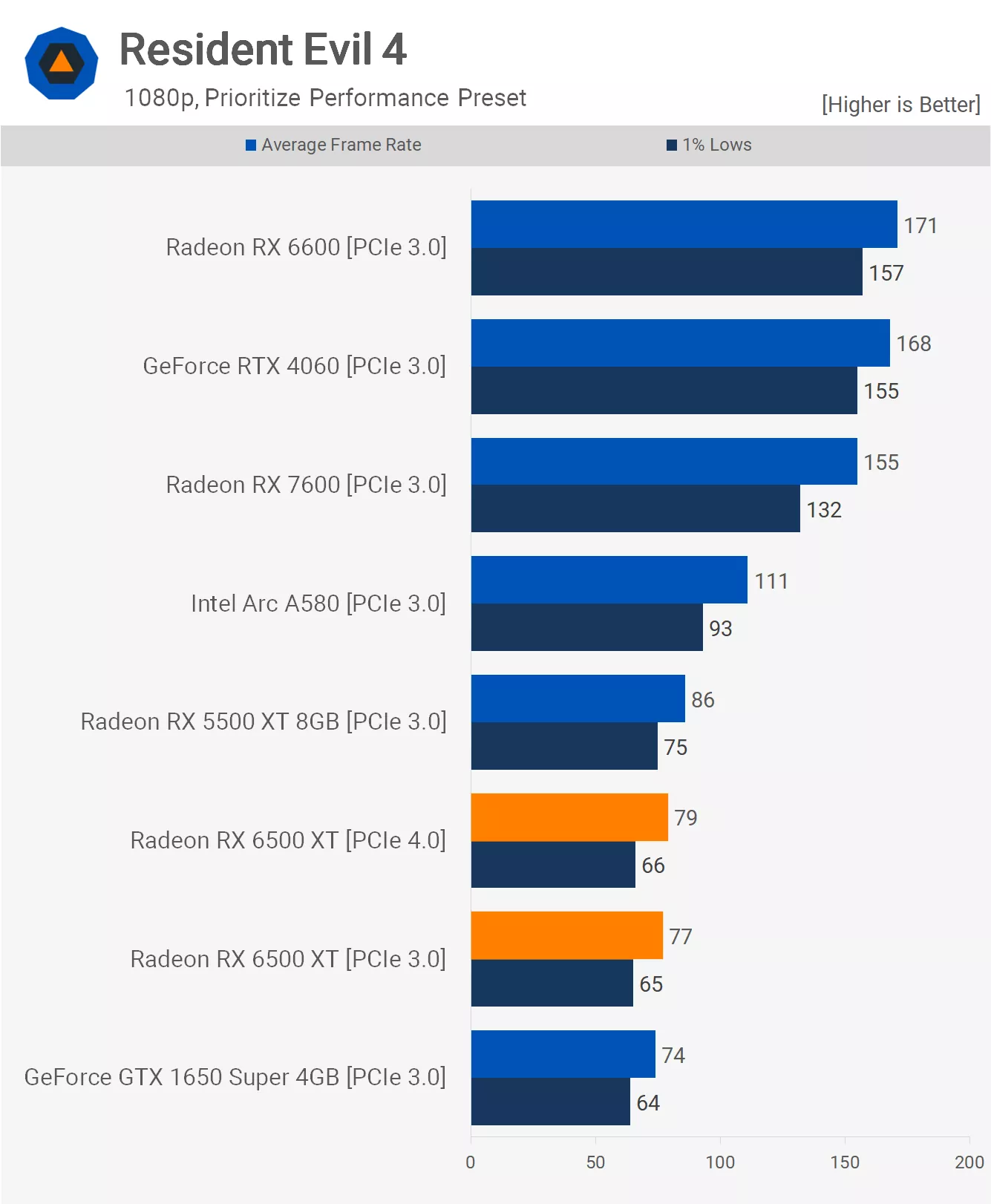

In Resident Evil 4, the 6500 XT's performance aligns more with what's expected from a previous generation entry-level product. At 1080p using the lowest quality preset, the 6500 XT managed over 70 fps, comparable to the GTX 1650 Super but slower than the 5500 XT.

Despite the playable performance, the RX 6600 was still 122% faster, offering the potential for much higher visual quality.

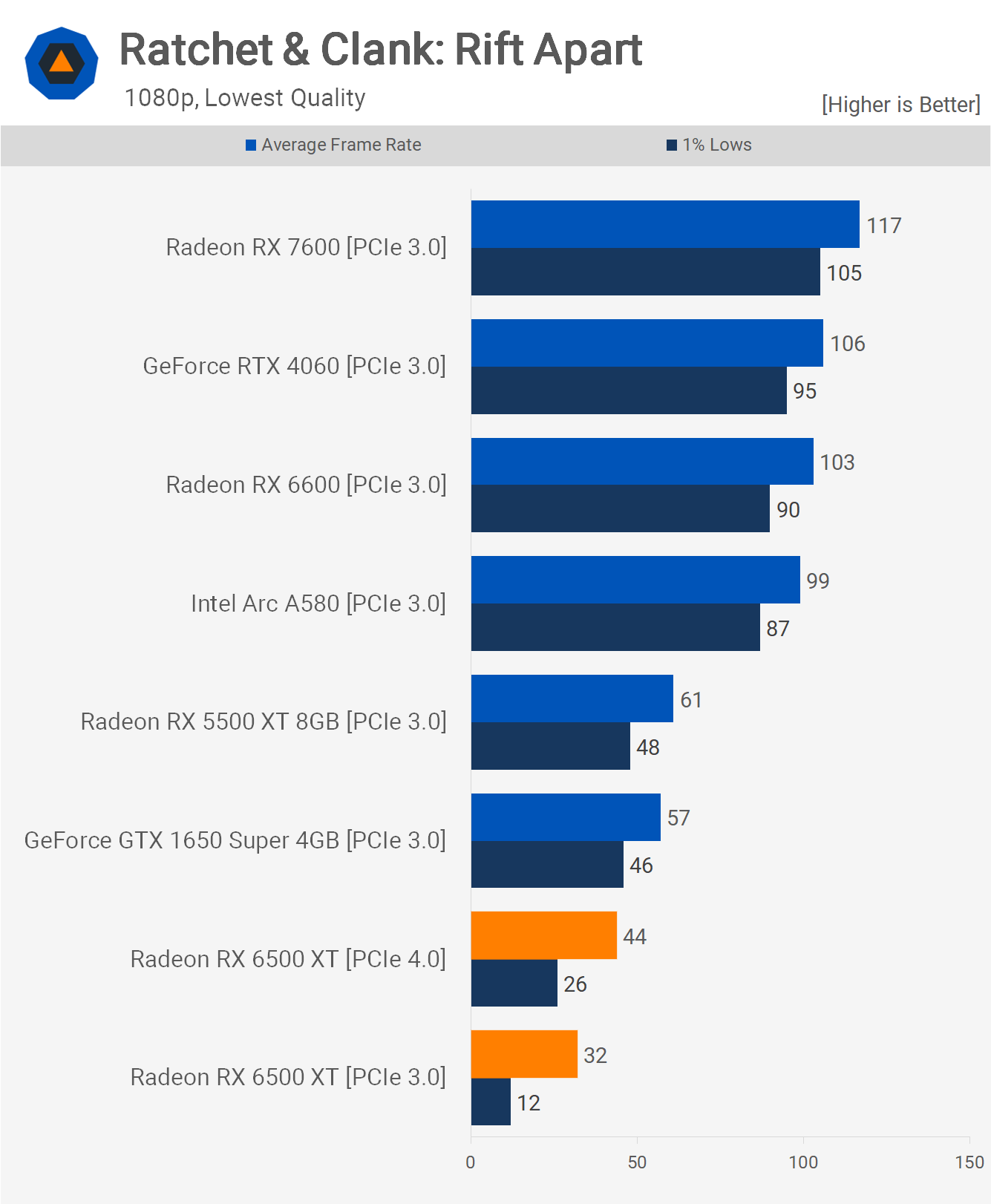

In Ratchet & Clank: Rift Apart, the 6500 XT performed worse than expected. Using PCIe 3.0, performance was hampered by not just a low average frame rate but also constant and very noticeable frame stuttering, likely due to low VRAM and limited PCIe x4 bandwidth.

Switching to PCIe 4.0 improved performance to 44 fps, but frame stuttering remained an issue. This seems to be related to PCIe bandwidth, as the 1650 Super, another 4GB card, didn't experience the same problem. The 5500 XT was also better, benefiting from more PCIe bandwidth and crucially, 8GB of VRAM.

In this case, the RX 6600 was 134% faster and provided smooth gameplay, whereas the 6500 XT was unable to offer smooth gameplay and fell well short of 60 fps at 1080p on the lowest settings.

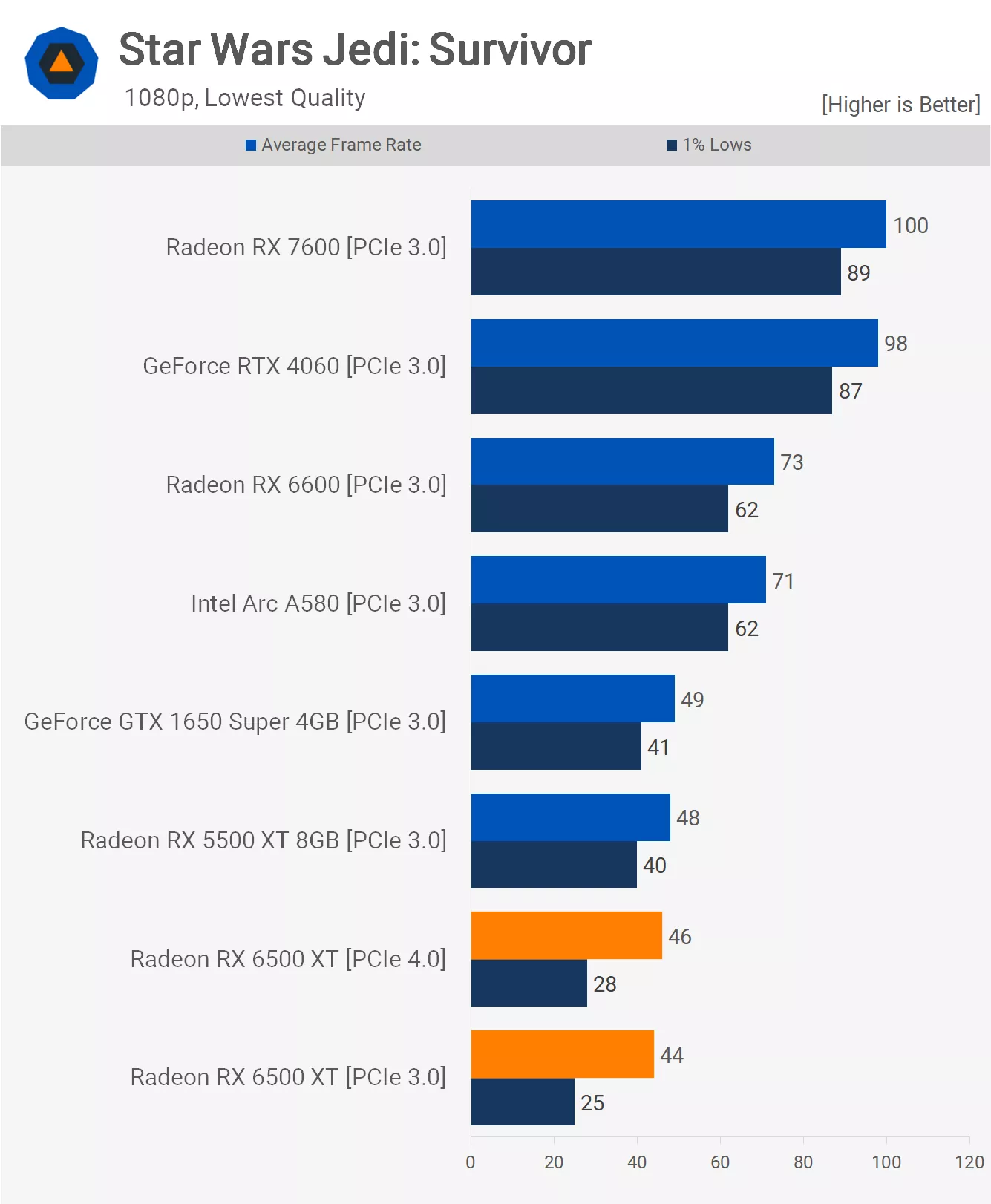

The performance in Star Wars Jedi: Survivor was very poor. Despite delivering over 40 fps on average, the gameplay was plagued with constant stuttering. In contrast, the 5500 XT and 1650 Super performed flawlessly and even avoided traversal stuttering in this title, despite the sub-60 fps frame rate.

We have yet another example where the 6500 XT fails to achieve 60 fps at 1080p using the lowest quality settings.

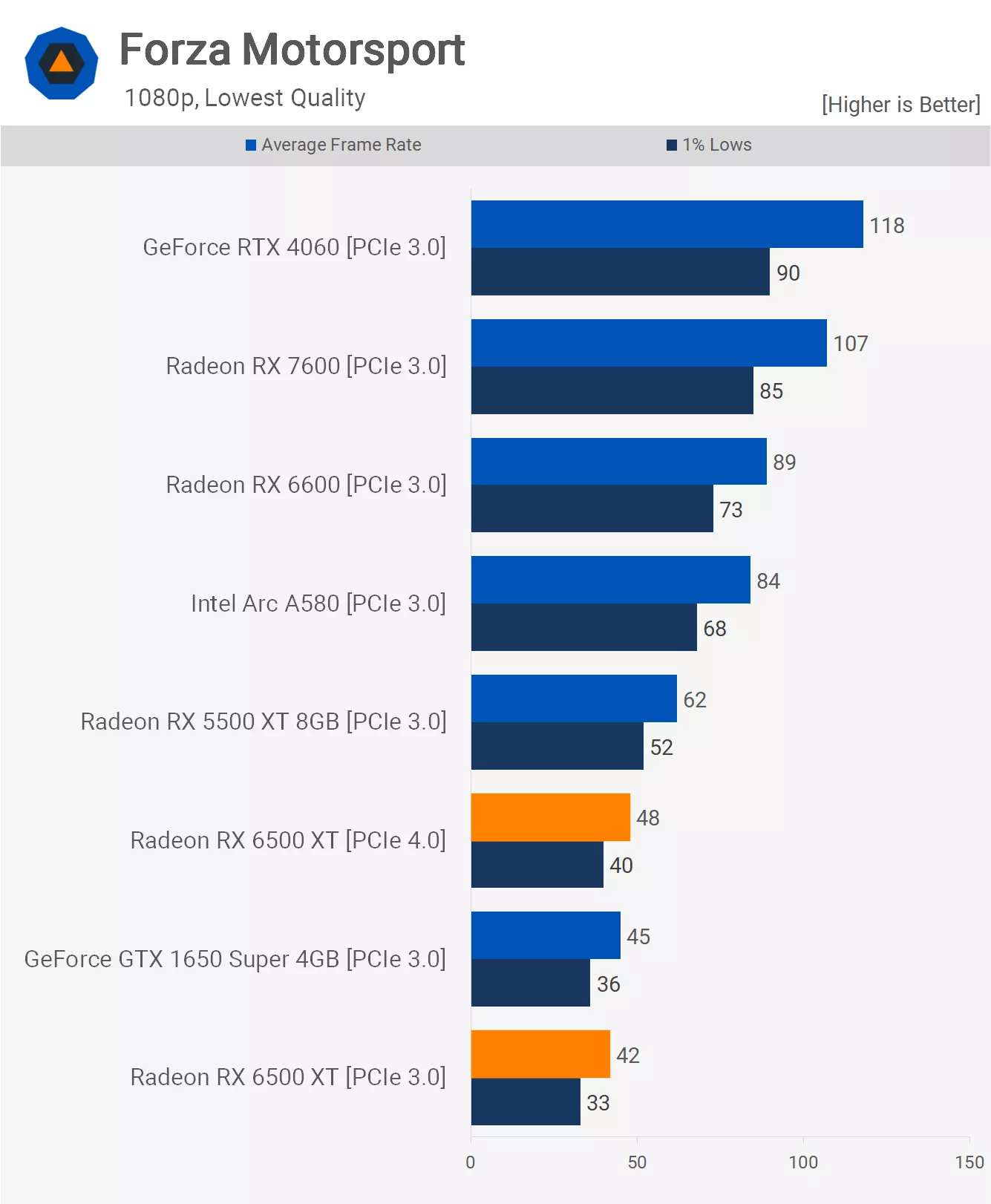

Next, we have Forza Motorsport, where the 6500 XT performs comparably to the GTX 1650 Super, achieving 48 fps using PCIe 4.0 and 42 fps with PCIe 3.0. This results in playable performance, though it's far from ideal and significantly below 60 fps, which is concerning since we're testing at 1080p using the lowest possible preset.

More worrisome is that, comparing the PCIe 3.0 data, the 5500 XT was 48% faster, and the RX 6600 was 112% faster.

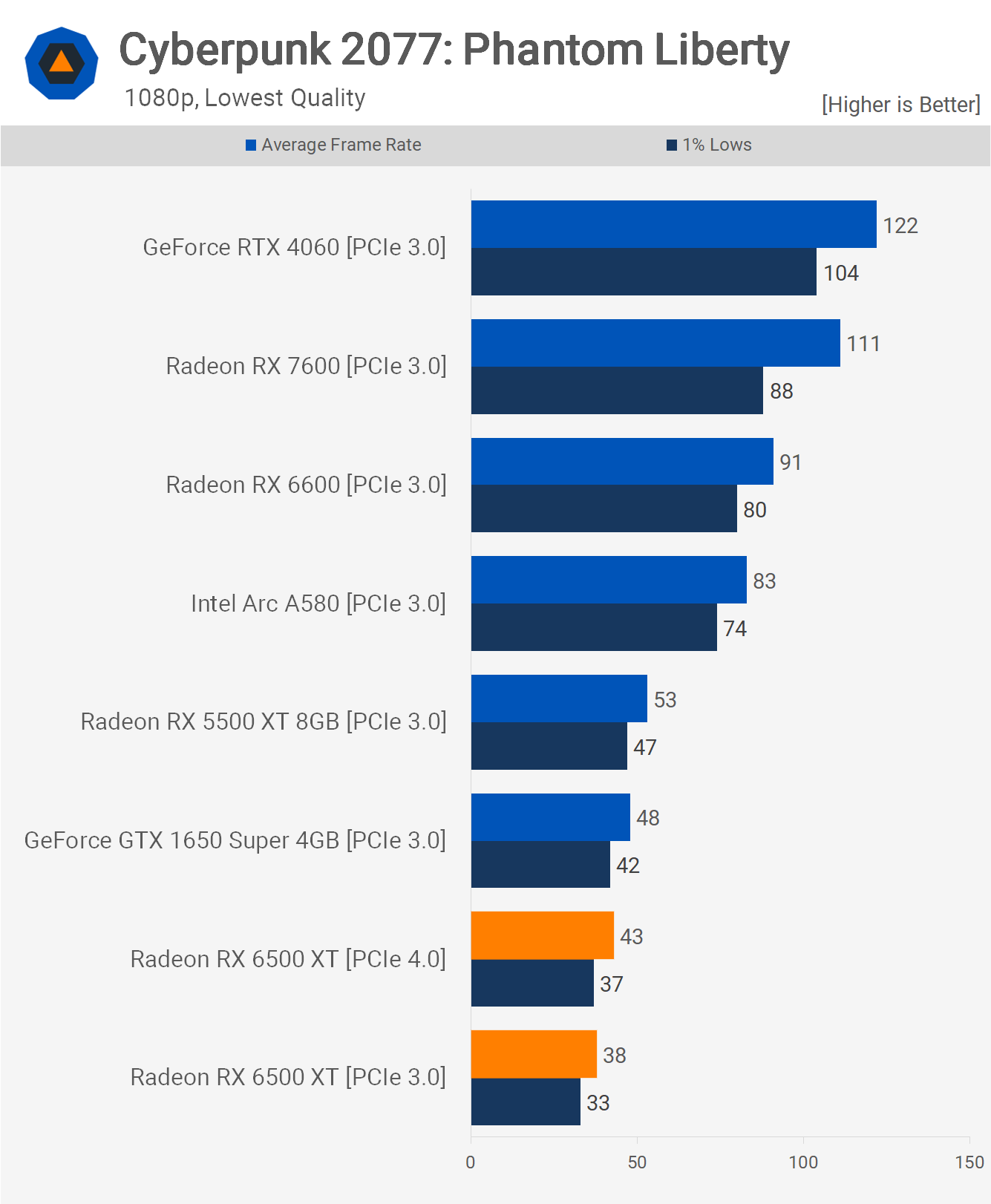

In Cyberpunk 2077: Phantom Liberty, the 6500 XT lags behind the 1650 Super and significantly behind the 5500 XT. Most importantly, it falls well short of 60 fps at 1080p using the lowest quality preset. This also means that, when comparing PCIe 3.0 data, the RX 6600 is 139% faster.

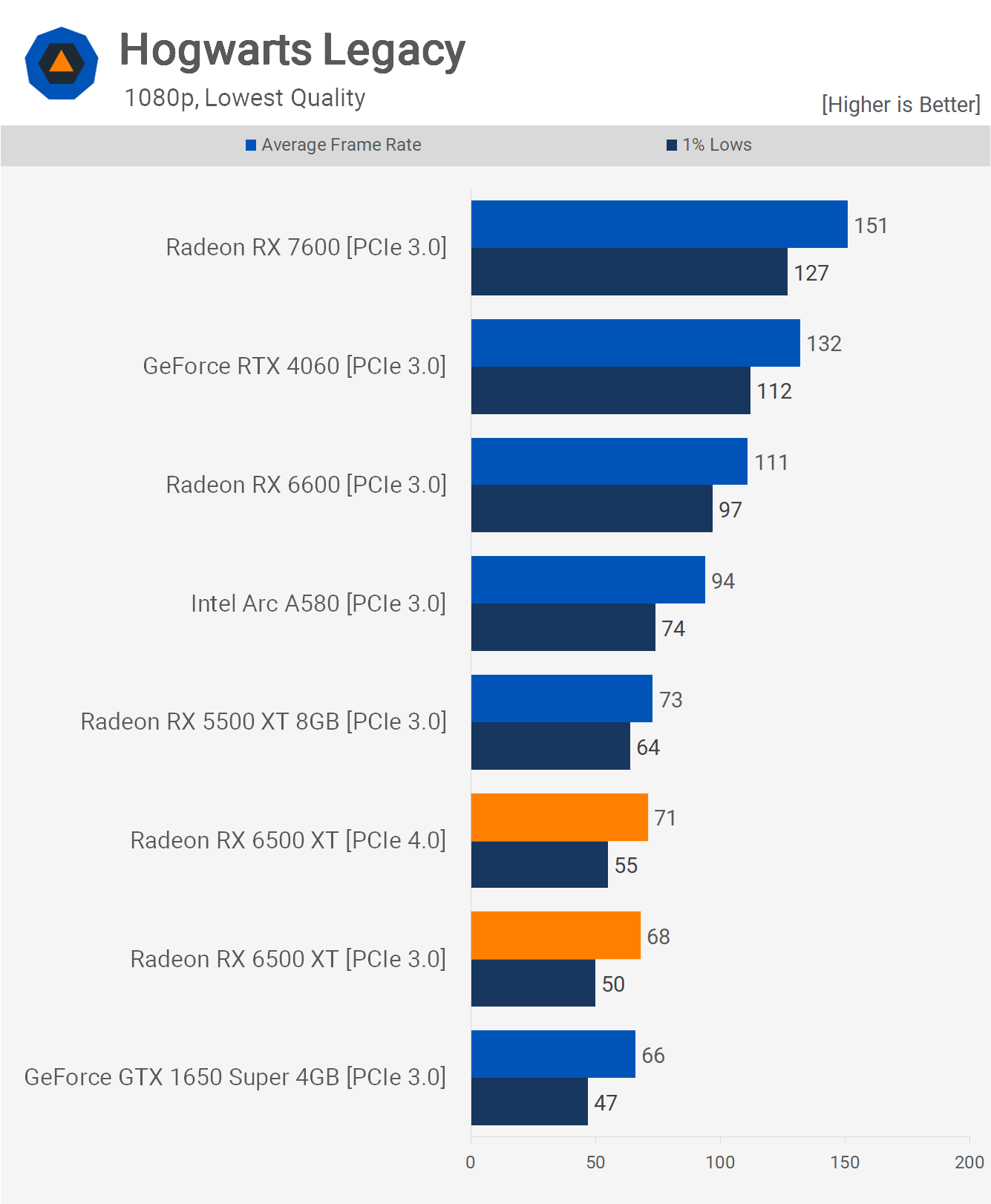

The performance in Hogwarts Legacy is surprisingly good, achieving over 60 fps, again at 1080p using the lowest quality preset. However, this still places the 6500 XT on par with the 1650 Super and slightly behind the 5500 XT, though frame time performance was noticeably better with the 8GB 5500 XT.

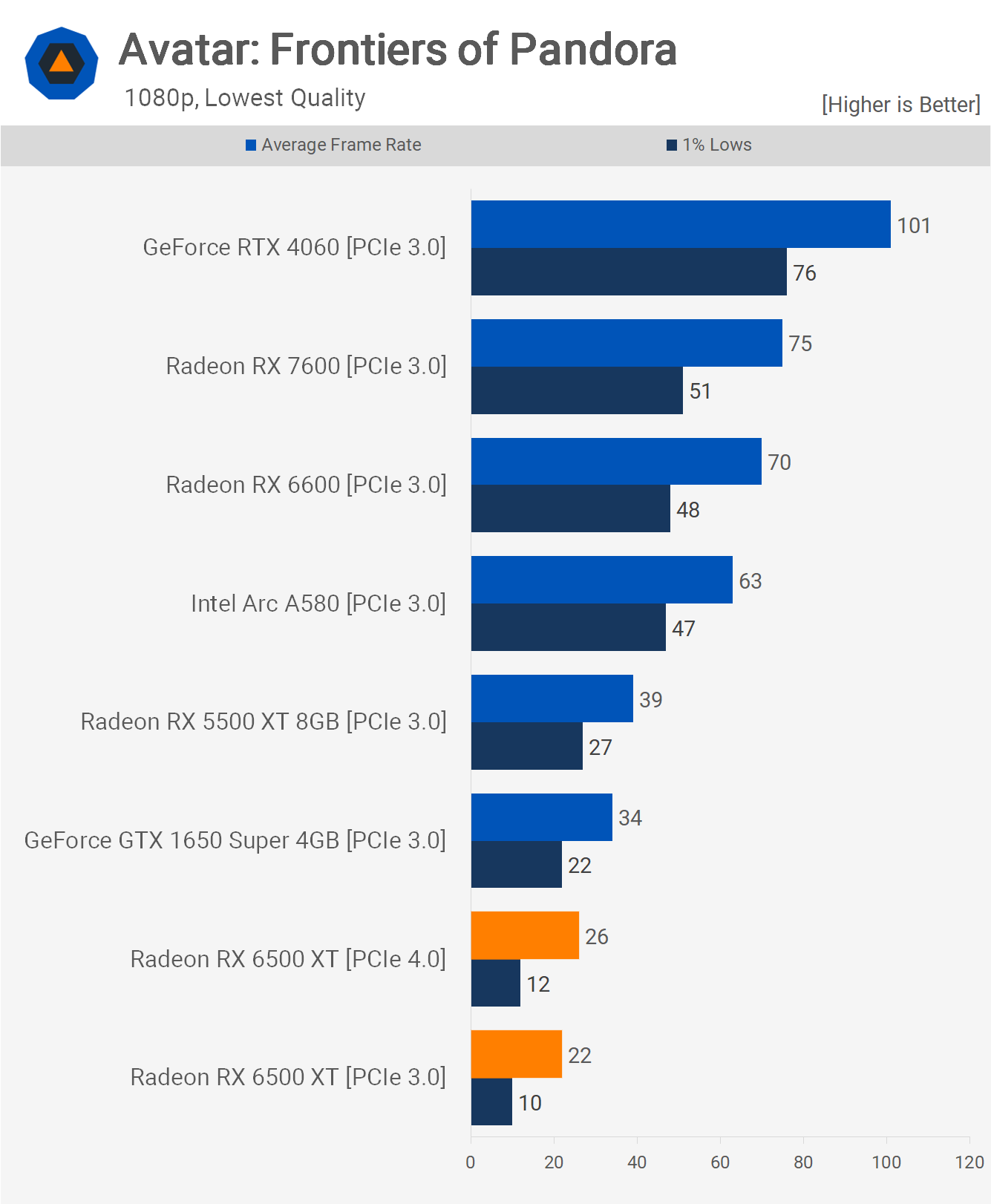

Moving to Avatar: Frontiers of Pandora, we can somewhat excuse the 6500 XT, as no low-end GPUs from previous generations deliver playable performance in this title. However, it falls short of 30 fps, while the 1650 Super manages 34 fps and the 5500 XT 39 fps, making the 6500 XT's performance comparatively poor. Additionally, choosing the RX 6600 would have resulted in around 170% better performance in this title.

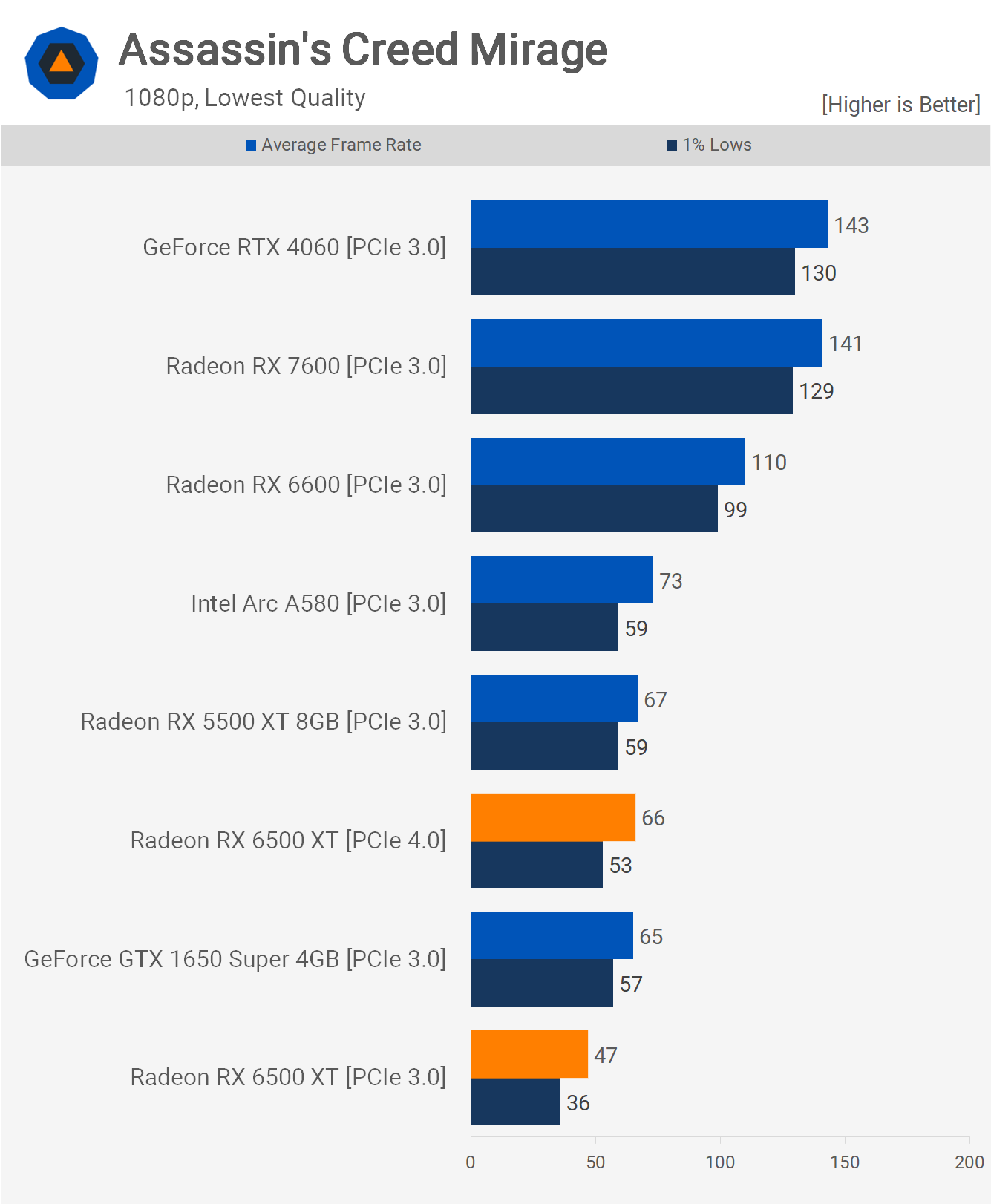

Assassin's Creed Mirage plays quite well on the 6500 XT, provided you have a PCIe 4.0 enabled system, as this allows for an average of 66 fps at 1080p using the lowest quality settings. This makes the 6500 XT comparable to the 1650 Super and 5500 XT, though both of these models were using PCIe 3.0. When comparing PCIe 3.0 data, these models are about 40% faster.

This also means that, with PCIe 3.0, the RX 6600 is 134% faster than the 6500 XT. Furthermore, the 6500 XT falls short of 60 fps when installed in a PCIe 3.0 system.

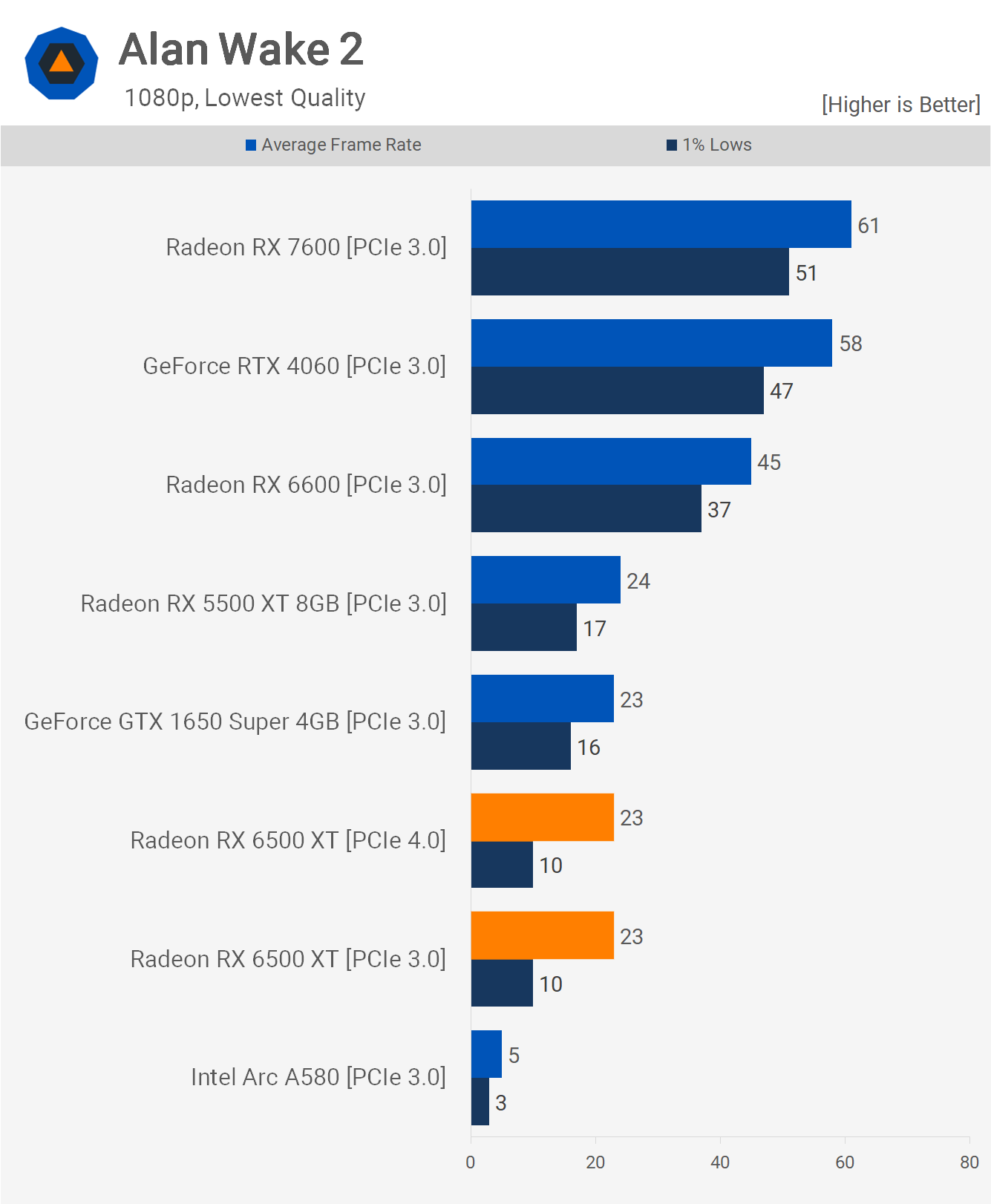

Like Avatar, Alan Wake 2 is another game that overwhelms low-end GPUs, as well as any Arc GPU. Nevertheless, the RX 6600 was almost twice as fast as the 6500 XT, delivering a playable 45 fps.

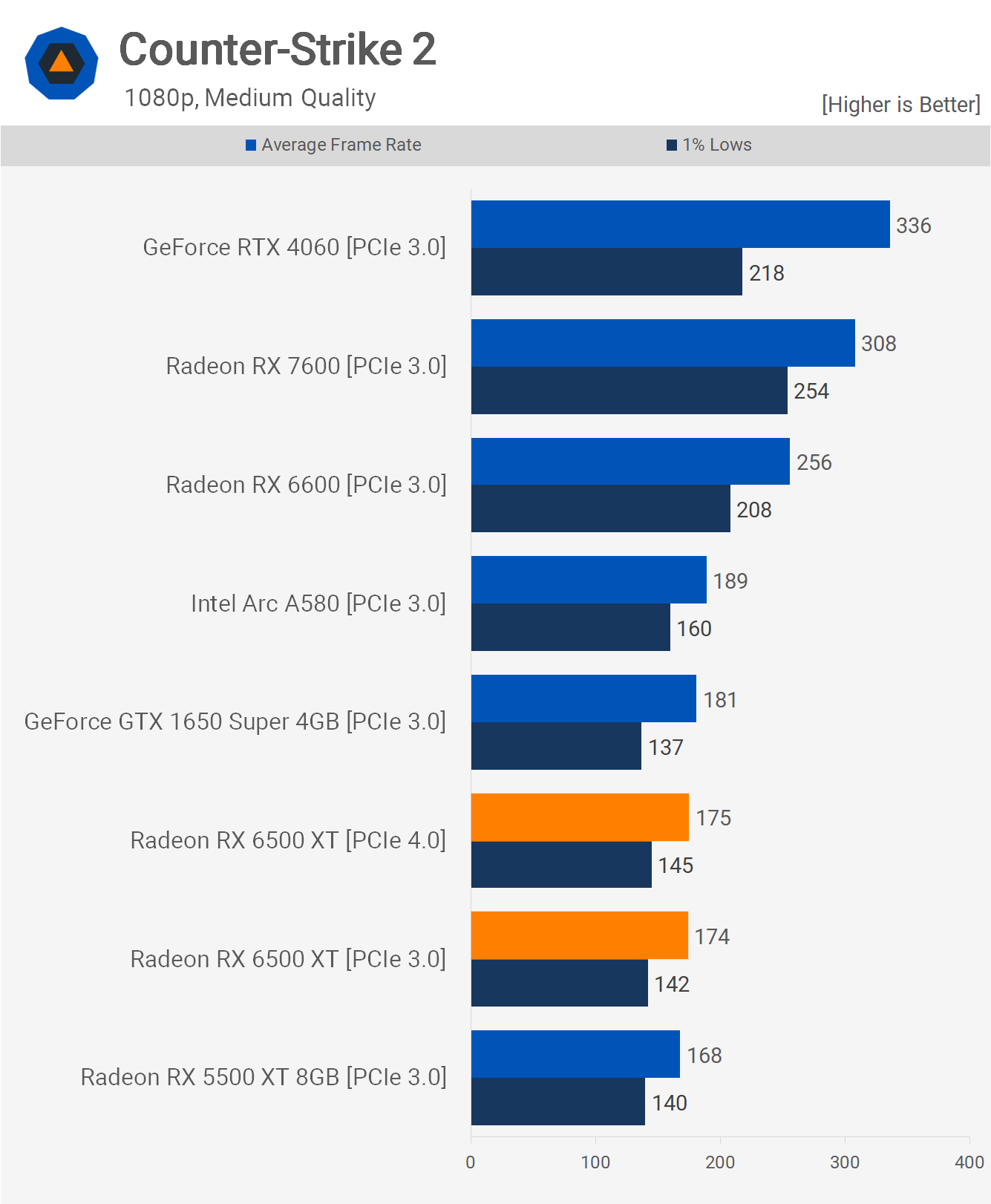

The 6500 XT could be considered adequate for esports titles, which are typically less graphically demanding. However, it's no better than older products such as the 5500 XT or 1650 Super, which often perform better in other titles. In our tests with Counter-Strike 2, we observed just over 170 fps using medium settings at 1080p, comparable to the 5500 XT and 1650 Super. The RX 6600 was only 47% faster in this case, as the limited PCIe bandwidth and 4GB memory buffer did not significantly impact the 6500 XT in this title.

Average Performance

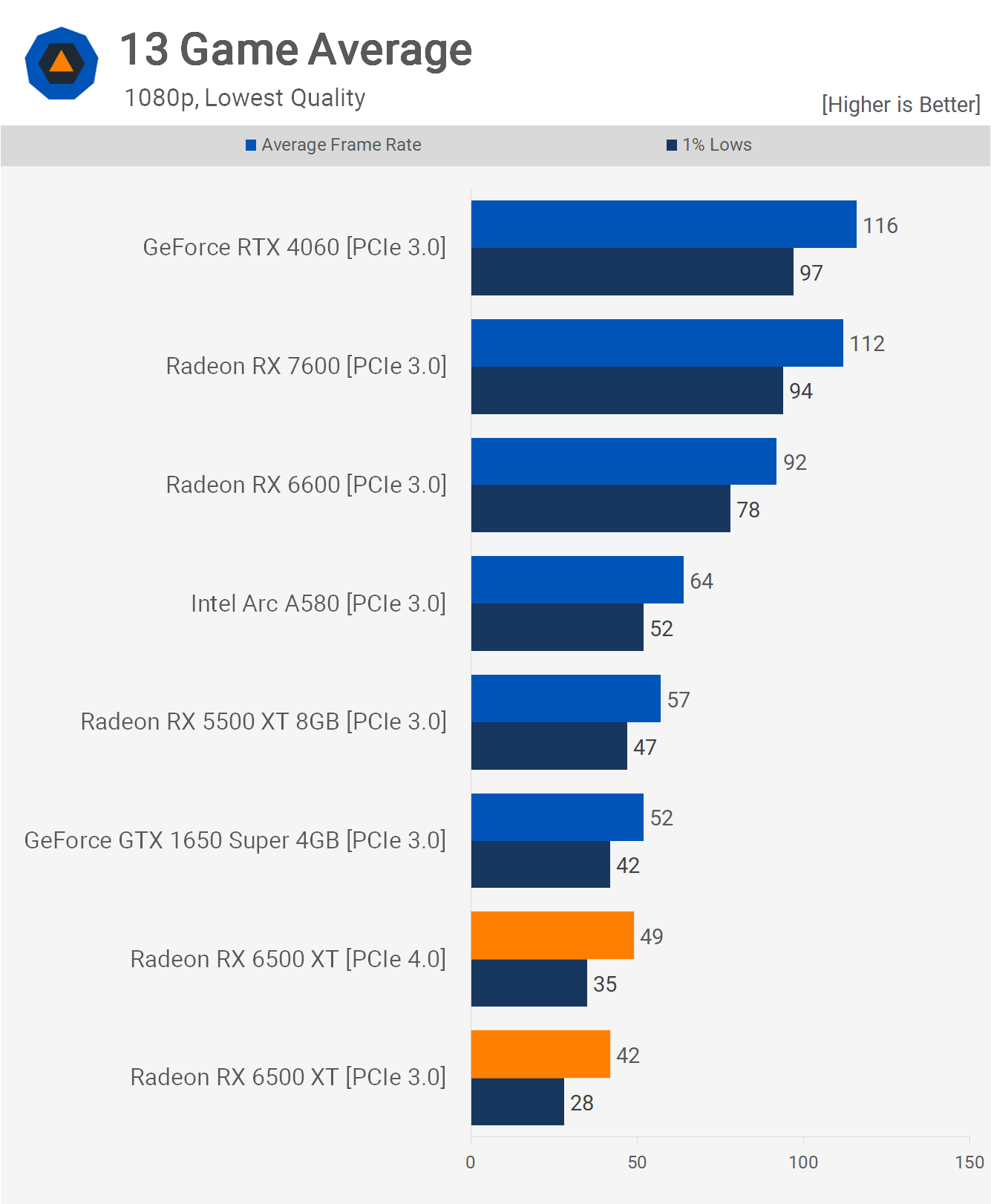

Here's the 13-game average, and as you can see across all the games tested, including Counter-Strike 2, the 6500 XT only averages 49 fps using PCIe 4.0 and 42 fps with PCIe 3.0, so both are well below 60 fps.

In comparison, the 5500 XT averaged 57 fps and the newer A580 64 fps. Then, the RX 6600 offers 120% more performance on average when comparing the PCIe 3.0 data.

Cost per Frame

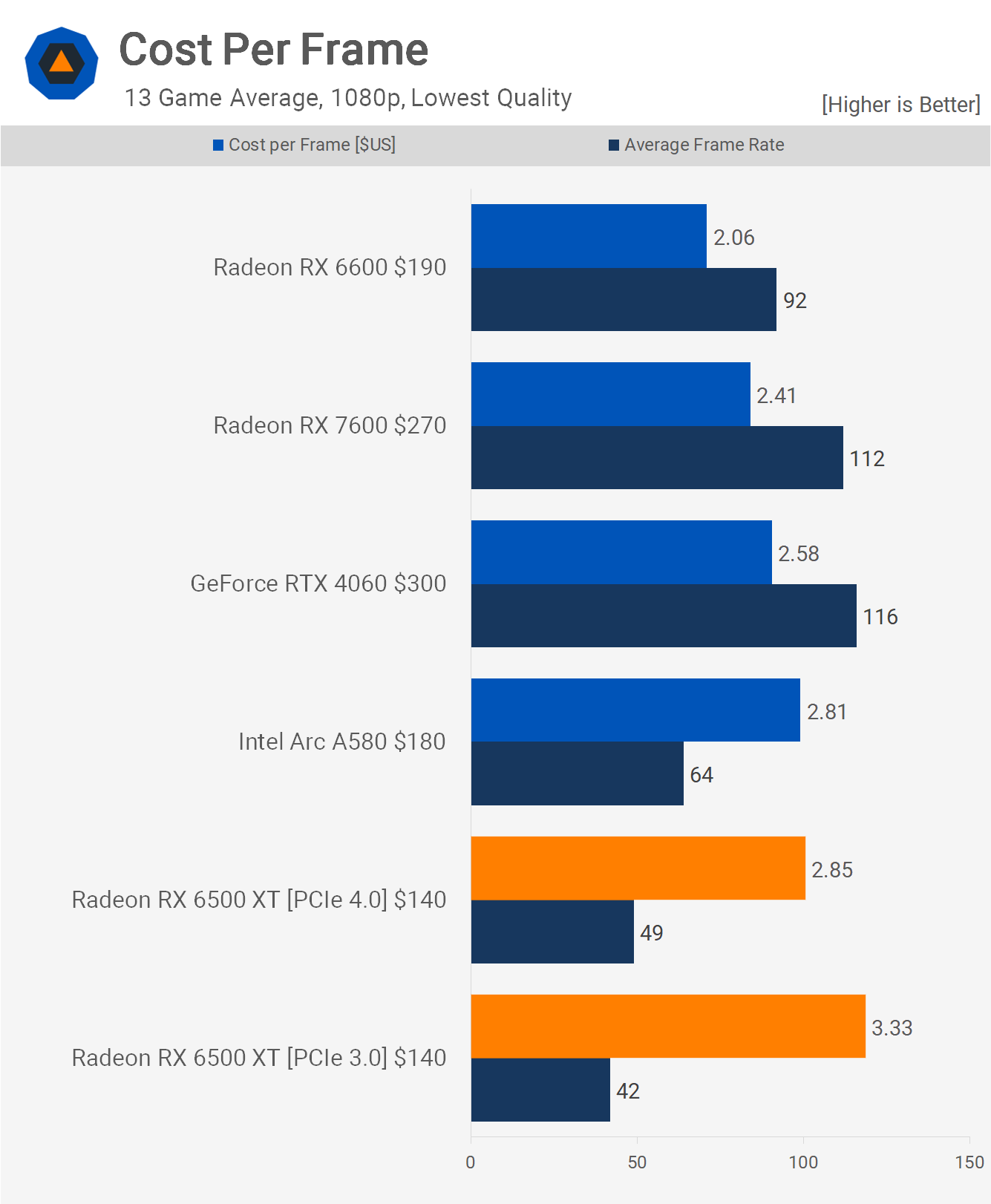

Now, comparing the cost per frame data using today's pricing, we see that the 6500 XT is still poor value, even at $140, because GPUs such as the RX 6600 are priced at $190. As a result, the 6500 XT costs at least 38% more per frame, and that doesn't factor in all the missing features.

Then, if we compare the PCIe 3.0 data, the 6500 XT ends up costing 62% more per frame, which is excessive. Even the RTX 4060 and RX 7600 offer better value, and we weren't particularly impressed with either of those products.

Not for Modern Games

So, how is the Radeon RX 6500 XT performing in modern games? Not well. If limited to PCI Express 3.0, it only achieved 60 fps at 1080p using the lowest possible quality preset in 2 out of 12 triple-A games tested – or just 3 titles when using PCIe 4.0, which is clearly inadequate.

It was often much slower than the 8GB 5500 XT, which admittedly was a more expensive product when the 6500 XT was released in early 2022, though the 4GB version was not, and is still a better choice. Additionally, the cheapest 6500 XT listing back in January 2022 was $270, dropping to $250 the following month, and by April they were at $210. By that time, the Radeon RX 6600 had dropped from $350 to $210 later in the year.

Today, the Radeon 6500 XT costs $140, while the Radeon RX 6600 is available for as little as $190, so a 35% premium for what is often 2x the performance, with twice as much VRAM, hardware encoding support, AV1 decoding, and more than 2 display outputs. Not only that, but when we look at cost per frame, the RX 6600 is almost 40% better value.

The 6500 XT was a poor release from AMD, and it was poorly timed. It should have been released for no more than $150 and sold for no more than that price. Today, it can't be priced over $100; anything over that, and you're better off investing in the RX 6600, or perhaps even the Intel Arc A580, though the RX 6600 is the value king at the $200 price point or lower.

It's no surprise to us that the 6500 XT is already obsolete for many modern titles. We just feel for those who were lured into paying as much as $300 for this GPU as that's a harsh deal.