The third and final installment in our 'Needs to Fix' series is focused on Nvidia. Having previously discussed what we feel Intel and AMD can do with their upcoming products to become more consumer friendly, it's now time to look at the green team. As before, we're looking at this from the perspective of the consumer and not as industry analysis. Here we go...

Misleading product names

After the GTX 970 mess, we thought Nvidia would want to play it cool with the GeForce 10 series. But considering that was one of their best selling GPUs, they clearly didn't see the need. Nvidia started off strong in May 2016 with the GTX 1080, followed up by the 1070 in June and 1060 in July. It was a strong line up covering the $250, $400 and $600 price points.

In late 2016 Nvidia threw us a curveball with the 3GB 1060, a new model featuring half the VRAM which in itself wasn't a particularly big deal. However they also disabled a SM unit taking the CUDA core count from 1280 down to 1152. This meant despite having the same name, it actually featured 10% fewer cores.

Calling the 3GB model a GTX 1060 was misleading given the specs suggested only the VRAM capacity had been changed. Ideally Nvidia should have called this model the GTX 1050 Ti, before the 1050 Ti was launched in October. Admittedly this isn't something I pulled Nvidia up hard enough on at the time, I've since called them out a few times on it, but I regret not making more noise in our day one coverage.

It seems Nvidia didn't care about the negative feedback they received from some reviews and many customers as they went on to later release a 5GB 1060 and although that model does have all the cores enabled, the reduced memory capacity in this case also means less memory bandwidth. More recently they also released a 3GB version of the GTX 1050 which has less bandwidth than the original 2GB and 4GB models. So we want to see Nvidia do better in this regard and stop with the misleading product names and we'll be making a bigger effort to push back against this anti-consumer practice.

Bait-and-switch

If you thought for a second we had missed the whole GT 1030 fiasco when discussing misleading products names, we didn't. But this one is so bad we feel it deserves its own category. The 3GB version of the GTX 1060 certainly was misleading, but for around 20% less than the 6GB version, those playing at 1080p should only see a ~7% dip in performance. So in terms of value it's actually pretty good. And with this I'm not justifying it, I'm simply noting that it's not a bad proposition.

However, what Nvidia has recently pulled with the GT 1030 really is disgusting. Although the core configuration hasn't been touched, performance has been severely crippled by swapping out GDDR5 memory with DDR4, the stuff modern desktop PCs use for system memory.

The end result is almost 3x less memory bandwidth, dropping down from an already anemic 48 GB/s to just 16.8 GB/s. This means that in memory intensive workloads, in other words games, the new DDR4 version is often 1 - 2x slower and it's not half the price either, it's $10 cheaper. Yes, $10.

This is incredibly deceitful. The risk to gamers on a tight budget is extreme and while it's unfair to take advantage of anyone, robbing those struggling the most to get into gaming is truly distasteful in our opinion.

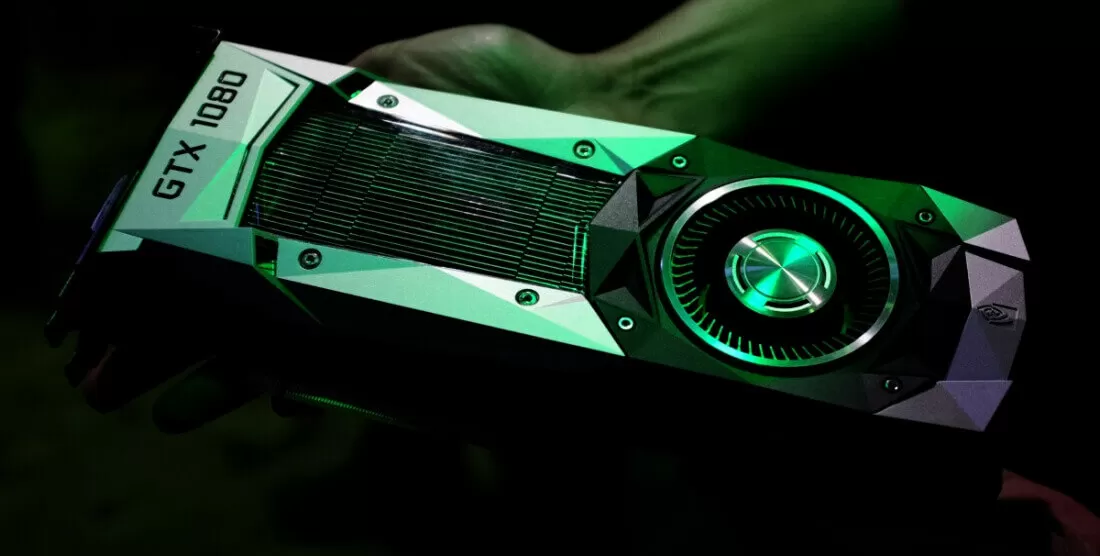

"Founders Edition" price premium

With the release of the GeForce 10 series Nvidia introduced the "Founders Edition," a sexy looking reference card that no one should have bought, and we said as much in our day one coverage. In terms of quality the Founders Edition models aren't bad, thermals leave a bit to be desired, but overall build quality is good and they look great, too.

Personally, I don't have an issue with the FE cards but readers' consensus was to include them in this list. I can certainly understand where you're all coming from. Nvidia charges a premium for reference design graphics cards and often make them the only choice for early adopters. That said, you do have the option to wait and get a board partner's card at a cheaper price, which is what we recommended in our day-one GTX 1080 review.

If you're after a Titan then you'll be forced to buy a reference card, but hey we also understand market dynamics and here Nvidia is simply reserving the big guns to themselves, which does work nicely in terms of brand marketing and profit margins.

But back to Founder Edition boards, it's not a huge concern for us and charging a premium is somewhat understandable. Not letting partners compete with custom designs at launch and delaying is not so nice. So ideally we'd like to see Nvidia do what they did with the GTX 1070 Ti release.

Dribbling products out: Titan X, GTX 1080 Ti, Titan Xp

Speaking of the GTX 1070 Ti, we'd like Nvidia to stop drip feeding us new GPUs. Sure, release a new generation over the course of a few months if you must, but after that stop it with the uncessasary cut-down models that share the same names as the fully fledged parts. Stop it with the cards we don't need, and stop rolling your most loyal customers in the process.

We've already talked about the dodgy cut-down models, so no need to go over that again. As for releasing models we don't really need late in a product cycle, such as the GTX 1070 Ti, it's not terrible but it can confuse people.

The big issue with releasing so many products within the same series is that Nvidia always ends up tripping over themselves. If you bought a Titan X in late 2016 you were probably feeling pretty good about yourself: 40% more CUDA cores than the flagship GeForce part, so at the time it was a real beast. Of course, you were also paying twice the price, making absolutely no sense in terms of cost per frame, but still it was the undisputed king of the hill.

Seven months later though, Nvidia were like "I hope you enjoyed those bragging rights, because now we're offering essentially the same product for $500 less." Then a month after that they were like "Are you upset about the GTX 1080 Ti yet? No? Still I denial huh, well how about a Titan Xp, the 'P' is for taking the piss edition, we're adding 7% more CUDA cores and going back to charging $1,200.

Oh, and if you're a Star Wars fan it might have paid to wait a further six months for the Titan Xp Collector's Edition. It's not easy being an Nvidia fan with deep pockets.

Calm down with GPU prices

This is a tricky one to tackle because Nvidia can and will only charge as much money as you're willing to pay for a graphics card. We also have to contend with cryptocurrency mining and we've seen how this can blow up demand for GPUs to the point where AMD and Nvidia's MSRP's mean nothing.

Back in 2010 Nvidia were charging $500 for their flagship part, the GeForce GTX 480. They did the same with the GTX 580, but later released the $700 GTX 590 and it was at that point that Nvidia probably started to realize that a certain group of gamers are willing to pay more for premium graphics cards. By the time the GTX 600 series launched in 2012 they were well aware of this and we got the GTX 690 for $1,000. It made no sense at that price but they still sold.

With confirmation that Nvidia could sell $1,000 graphics cards, in 2013 the Titan range was born and this helped them justify a $700 asking price for the flagship GeForce part. You could say the Titan Z was somewhat of an experiment to see just how much some people would pay for bragging rights, though that was also a compute heavy product, so maybe justified.

Armed with that information, the Pascal Titan series climbed to $1,200 and now gamers everywhere are in fear of how much the next generation models are going to cost (Titan V is $2,999). Much needed competition from AMD will certainly help here, but even so the days of $500 flagship gaming GPUs are well and truly over.

Nvidia GameWorks

First let me just say that I don't think the GameWorks program is as bad as some would have you believe, but there has certainly been some underhanded tactics that need to stop.

For those of you unaware GameWorks is an assortment of propriety technologies that Nvidia provides developers allowing them to include cutting edge effects such as realistic hair, destruction and shadows without having to create them from scratch. However, Nvidia seems to often go out of their way to make sure these effects are done in a way that makes them perform poorly on AMD GPUs, and sometimes they do so at the expense of their GeForce customers.

We've seen evidence of abusing some technologies with the intention of giving Nvidia an advantage. For example, the use of tessellation in Crysis and The Witcher 3. This situation has caused backlash from gamers, even those using Nvidia hardware as these dirty tricks often hurt GeForce owners. We've seen examples where Nvidia will take an unnecessarily large performance hit rather than optimise an effect, and they do this to ensure the performance hit for AMD is even greater. Nvidia denies these claims, but we've seen some pretty hard evidence that this is indeed going on.

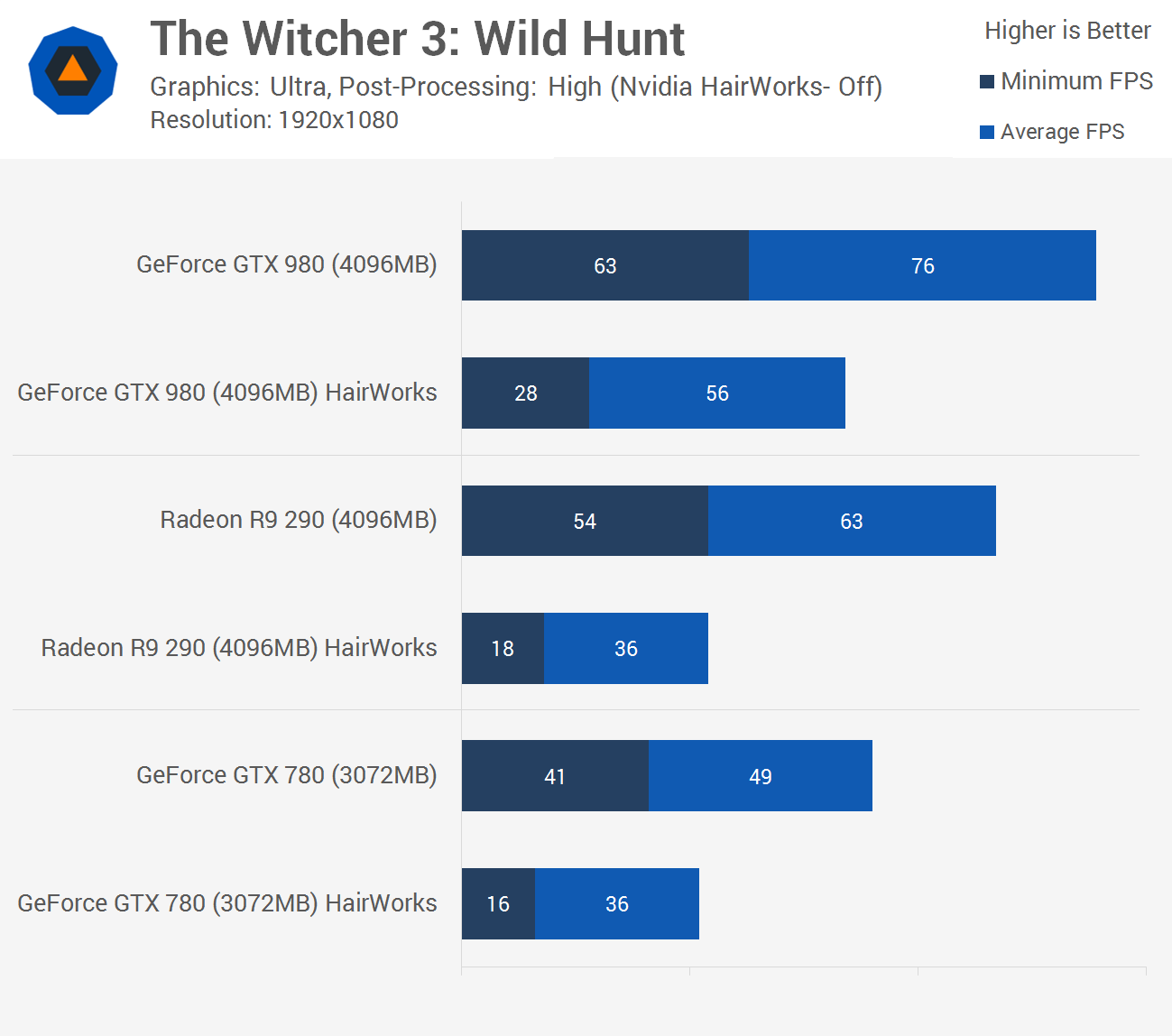

Upon release we noticed a massive performance hit using both AMD and Nvidia hardware when benchmarking The Witcher 3 with HairWorks enabled. I reported a 55% reduction in performance for the GTX 980, but a 67% drop for the Radeon R9 290. With HairWorks disabled the GTX 980 was 16% faster than the R9 290 and that's a fairly significant win for Nvidia. But with HairWorks enabled the GeForce GPU was now 56% faster.

There were a few issues with this though. First because the GameWorks feature meant that GTX 980 owners were going from a minimum of just over 60 fps at 1080p with HairWorks disabled, to less than 30 fps with it enabled. This lead us to recommend at the time no one use HairWorks.

However it was later discovered that Nvidia were abusing their tessellation performance advantage at the expense of their own customers. By default the tessellation level was set to x64, despite the fact that there was no real visual difference between x16 and x64, even when analysing side-by-side screenshots. In fact, x16 was barely better than x8, while x8 was really only slightly better than x4 and then the drop down to x2 did make the effect look quite poor.

Reducing the tessellation level from x64 to x16 more than doubled frame rates for scenes that made heavy use of HairWorks, while x8 boosted performance by a further 20%. In short, using tessellation x8 provided basically the same visuals with very little impact on performance opposed to not using the technology at all.

You can't get away with blaming the developer here, it's well documented that Nvidia worked very closely with The Witcher 3 team, so it's either sabotage or incompetence. We've also seen much more recent examples of unjustifiably large performance hits in GameWorks titles, though thankfully we're all very aware of what seems to be going on and as a result the Final Fantasy 15 issues were sorted out quite quickly after initial benchmarking was complete. The developer also seemed quite happy to take the blame on that one, so perhaps just an honest mistake.

GeForce Experience login

We didn't give AMD a hard time over driver support, and we're not going to give Nvidia a hard time either. Sometimes the display drivers aren't as good as they could be, but I feel for the most part both companies do a pretty good job. After all, ensuring that the current range as well as a number of previous generation GPUs all work in a massive range of games can't be an easy job, so I'm going to cut them a little slack.

What we're not fans of is GeForce Experience. The software can be very clunky at times and in many ways feels outdated. However my biggest gripe is that Nvidia requires you sign-in to use the software and in my opinion that's totally unnecessary.

This isn't new. Nvidia made this change years ago and we probably didn't push back hard enough at the time. I know some of you might not see this as a big deal, and for many of you it'll be rare that you have to manually login. Even so, you'd think that forking out hundreds of dollars for a GeForce GPU was enough to avoid having to hand over your details.

Maybe we're nit-picking on this one, and technically GeForce Experience is optional software, but we feel it would be ideal to get this perk with no strings attached.

Stop anti-consumer practices: GPP

If you're reading this article, you've probably heard of the GeForce Partner Program, or GPP. If not, here's a quick refresher: Nvidia tried to get all of their partners, like Asus, Gigabyte, MSI and so on, to sign a document called the GeForce Partner Program that would impose heavy restrictions on how they operated.

The bad parts of the document, which was leaked earlier this year by HardOCP, suggested that if a company didn't align their gaming brand exclusively with GeForce, they would no longer receive important development funds and other incentives.

This is another one of those anti-competitive and anti-consumer moves that Nvidia seems to enjoy making from time to time. Rather than simply producing compelling products and going about business fairly, Nvidia feels the need to shut out their competitors using underhanded tactics. GeForce graphics cards, particularly at the high end, are already the better buys and Nvidia holds far more market share than AMD, so it's just bizarre that Nvidia continually feels the need to bury competitors.

No need to post clearly garbage statements on your website about how these dodgy deals "benefit" gamers and are about "transparency". Just play fair, play nice, and ultimately do the right thing for consumers.

G-Sync monitors

What G-Sync brings to monitors is pretty good: adaptive sync support, mandatory low framerate compensation, monitor validation and so forth. You can buy a G-Sync monitor and know you're getting something quality. But the way Nvidia has implemented and locked down G-Sync is unfriendly to buyers.

G-Sync requires a dedicated hardware module. The module only works with Nvidia graphics cards. The module is also fairly costly, adding approximately $200 to the price of any given monitor. This means any Nvidia GPU owner wanting to buy a G-Sync monitor needs to spend an additional $200 compared to AMD GPU owners that have access to very similar FreeSync technology.

And there's no real reason why Nvidia GPUs can't support FreeSync, other than Nvidia wants to lock out their own customers from competing tech. FreeSync is an open standard, in fact it's basically just VESA Adaptive Sync, so Nvidia is free to implement support whenever they like and give Nvidia GPU buyers access to cheaper adaptive sync monitors. But they don't, because they can force gamers into buying G-Sync monitors, while locking AMD owners out of G-Sync and making it harder to switch GPU ecosystems.

Closing remarks

While this list of may come off as almost entirely negative, it's not to say Nvidia hasn't released some truly amazing products with their GeForce 10 series. We love the GTX 1080 Ti, it's an incredible flagship GPU. Scratch that, we really like the bulk of the Pascal lineup (and have repeated recommendations to prove it) which makes products such as the GT 1030 DDR4 all the more disappointing.

This is merely a wish list and we don't expect Nvidia to address any of these. Some in fact may affect their bottom line which make them even harder to implement, but looking deeper down into what they could address to become more consumer friendly, it would be great to see a few of them tackled over the next year.

TechSpot Series: Needs to Fix

After attending Computex 2018, the very PC-centric trade show, we found ourselves discussing internally a few areas where Intel, AMD and Nvidia could improve to become more consumer friendly. At the end of that discussion we realized this would make for a good column, so we're doing one for each company.

- Part 1: Things Intel Needs to Fix

- Part 2: Things AMD Needs to Fix

- Part 3: Things Nvidia Needs to Fix