Also, higher prices for consumers, when they are higher, are not due to the (huge) costs of moving to a new process node.........Will the money saved by moving to the next process node be more than the cost to move?

This is usually the main consideration when you aren't working with the highest complexity, largest chips.

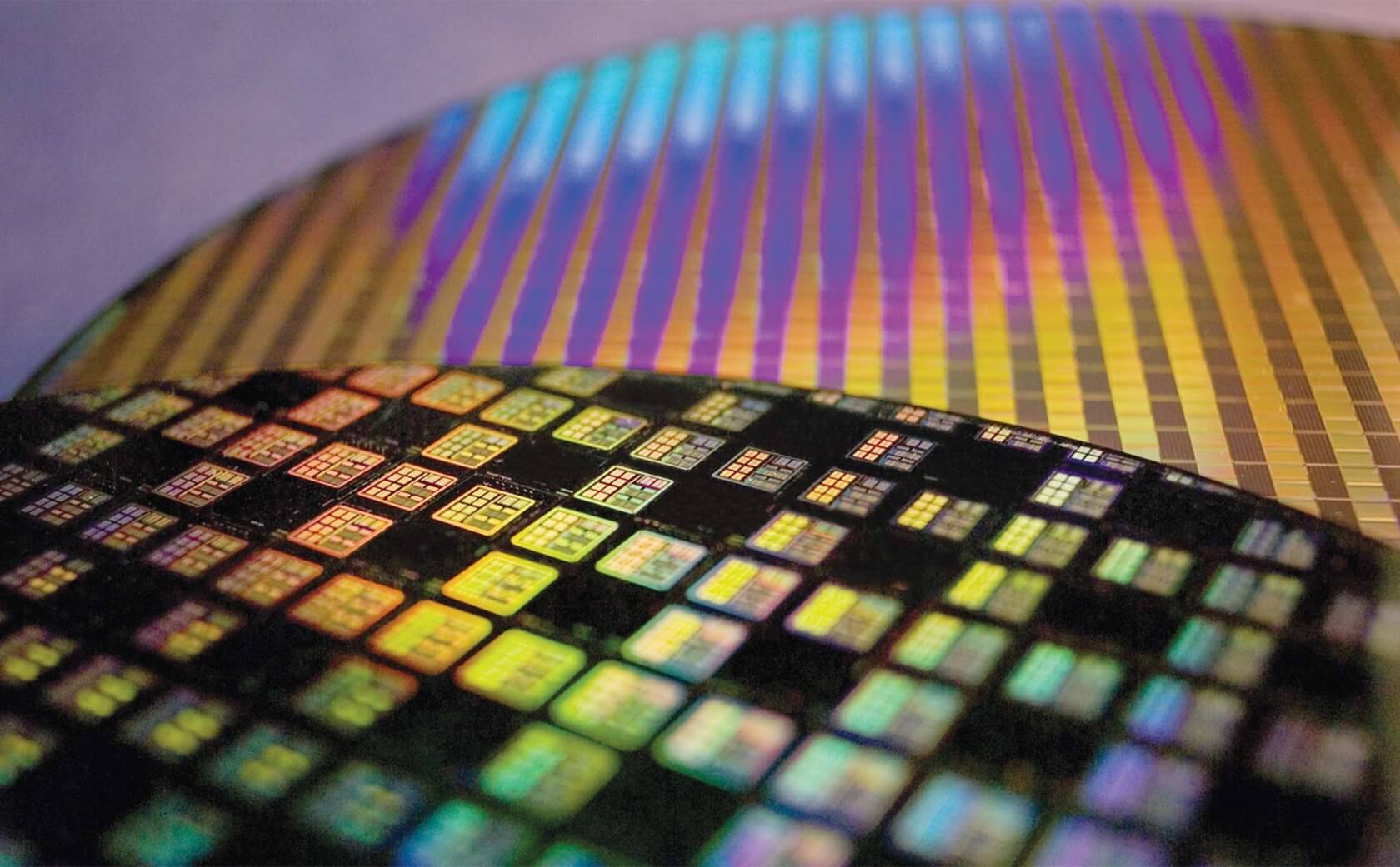

Manufacturing process matters a lot less if you are building sound chipsets, or network controllers, or I/O processors. Simpler designs. Extreme transistor density for maximum performance is not the primary design goal. Cost considerations outweigh cutting edge performance in most of those cases. Margins are very tight in these sectors. Small chips are built in big volumes per wafer for a couple bucks each. Going to a

brand new expensive smaller node gains you effectively nothing significant for a larger manufacturing cost. You lose money in an extremely competitive market, with dozens of other players. You have to wait until the cost per wafer drops so the math works, as you said.

But when you are talking about higher end CPU and GPU design- parts where performance or performance per watt (mobile) is critical, the gains are too irresistible to ignore despite the inevitable much higher cost of taping out a new chip for that node. In general, you

have to go to a newer node when they are reasonably mature or you fall behind your competitors.

You especially try and go to that new node as soon as you can make it work if you're a big player with only a handful of direct competitors. Apple, Qualcomm, Samsung, Intel, AMD, Nvidia etc are usually chasing it to deliver the best products they know they can sell at a premium price, higher than the previous generation. Justifying that with significant gains. This drives the industry forward.....

The main reasons why this specific high performance area of the industry wouldn't chase a newer node that hard is when development of the node (or product intended for it) is not shaping up well. Maybe it's not a big gain for the application, or it's delayed, perhaps yields are poor. Sometimes the next, better node is close anyway at that stage and the cycle is short. 20nm TSMC you mentioned was classic case in point, with Nvidia calling it 'worthless' and AMD deciding after evaluation it wasn't well suited to high end chips. Or another (rarer) case might be you don't need to transition quickly because your arch is already way better than the competitor so you're reaping big margins as long as you can (cough, Nvidia Pascal/Turing, Intel Skylake.)

For the big players where that ultimate, market leading performance is king for these companies, you do whatever it takes to reach the next level. You just pass the cost onto an eager consumer hungry for the next perceptible step.