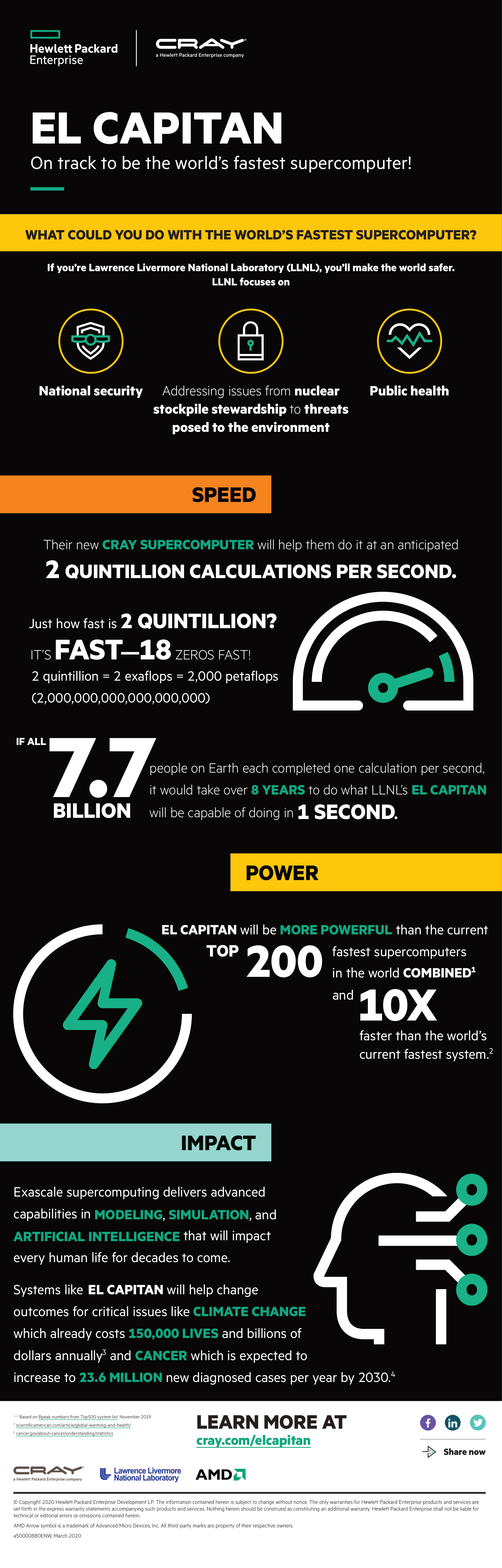

Something to look forward to: The 'El Capitan' supercomputer at Lawrence Livermore National Laboratory (LLNL) will be built with both AMD processors and GPUs. It is expected to pack more than 2 exaFLOPs of performance and will come online in early 2023. El Capitan will be a huge leap forward in supercomputing performance with more power than the current top 200 fastest systems combined. That is also 10 times faster than the current fastest system.

The new system will be maintained by the US Department of Energy's (DOE) National Nuclear Security Administration (NNSA). Its main purpose will be to help model how America's existing nuclear weapons stockpile is aging through simulations and artificial intelligence.

In addition to national security workloads, El Capitan will also target some other key areas. This includes a partnership with the National Cancer Institute and additional DOE labs to accelerate research towards cancer drugs and how certain proteins mutate. El Capitan will also be used in research to help fight climate change.

This system is a big win for both AMD and Hewlett Packard Enterprise (HPE), who designed the system. Supercomputers used to be dominated by Intel CPUs and Nvidia GPUs, but AMD's improvements in both sectors are starting to eat away at that.

El Capitan will use 4th generation EPYC CPUs, codenamed "Genoa," based on the Zen 4 architecture. On the GPU side, it will use Radeon Instinct cards with the 3rd generation Infinity architecture.

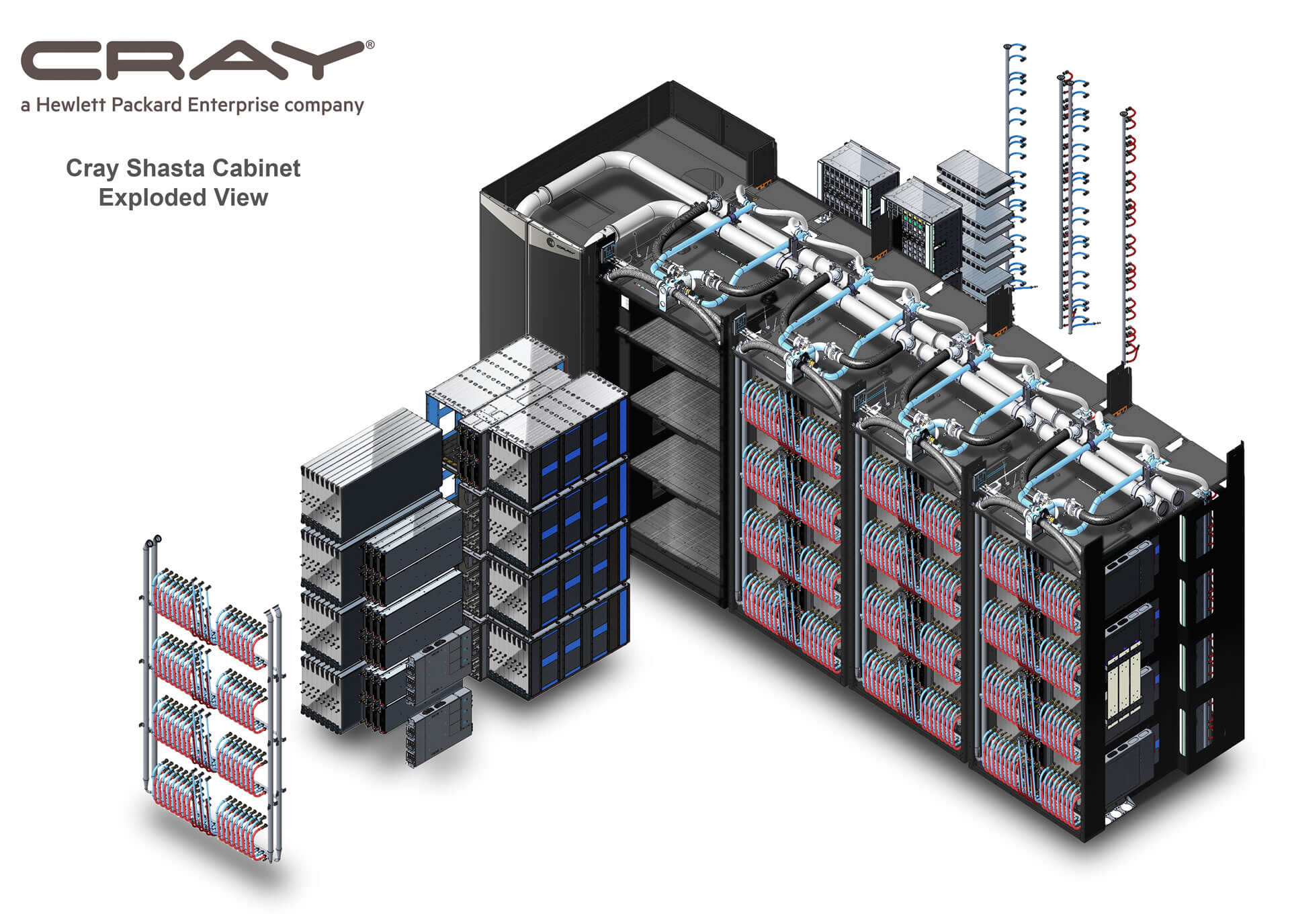

The compute hardware will be implemented using Cray's Shasta system and Slingshot interconnect.

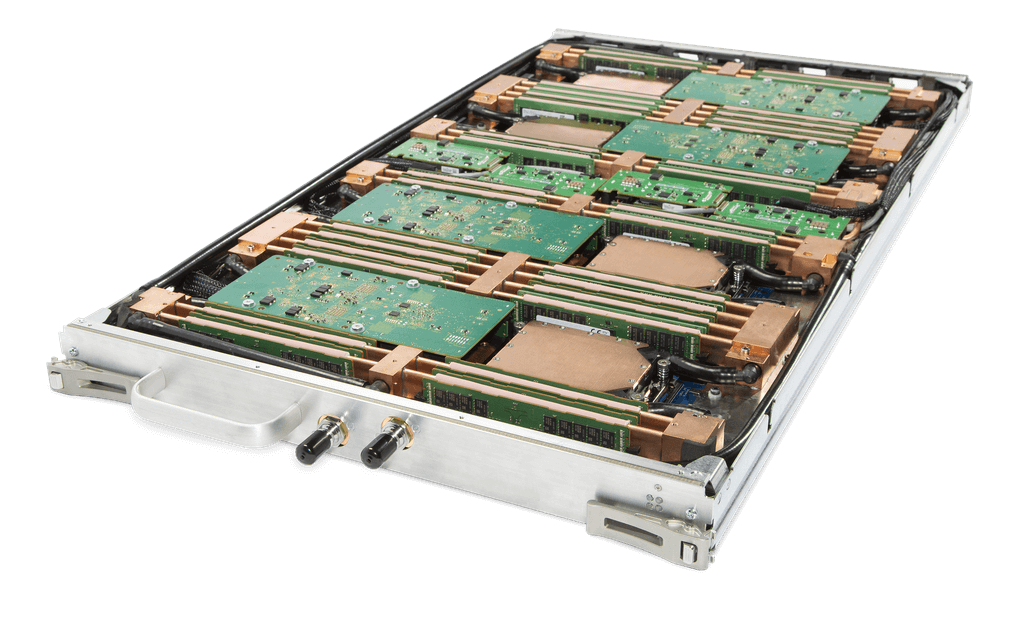

This features a 4:1 GPU to CPU ration with local flash storage for improved access speed. To help manage the massive heat generated by such a system, the blades are all individually water cooled. In addition to El Capitan, HPE and DOE are also working on two other exascale systems, Aurora and Frontier.

https://www.techspot.com/news/84266-amd-cpus-gpus-power-future-world-fastest-supercomputer.html