I can't say that it's disappointing considering the context. The RT performance is actually better than I expected it to be (I don't care but many do). Sure, it's behind nVidia but that's to be expected since nVidia has an extra generation (2+ years) of RT development. As a result, hoping that they're at nVidia's level is just plain unrealistic.

Anyone who is disappointed that AMD isn't on par with nVidia in RT is expecting AMD to somehow advance in RT faster than nVidia. With everyone and their mother throwing money at nVidia, being disappointed that AMD hasn't caught nVidia is basically being disappointed that a miracle hasn't occurred. That's just plain stupid.

What the focus

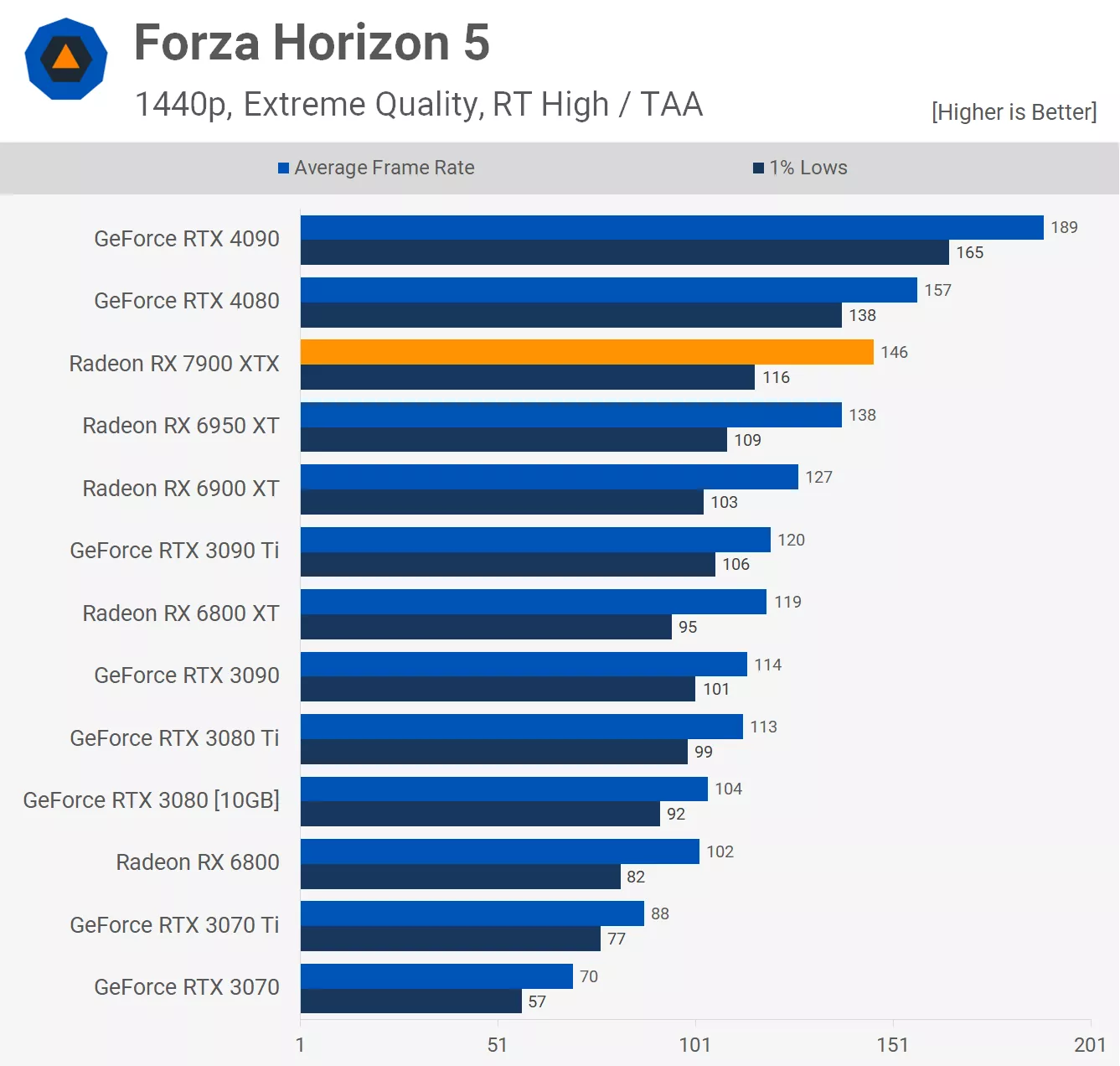

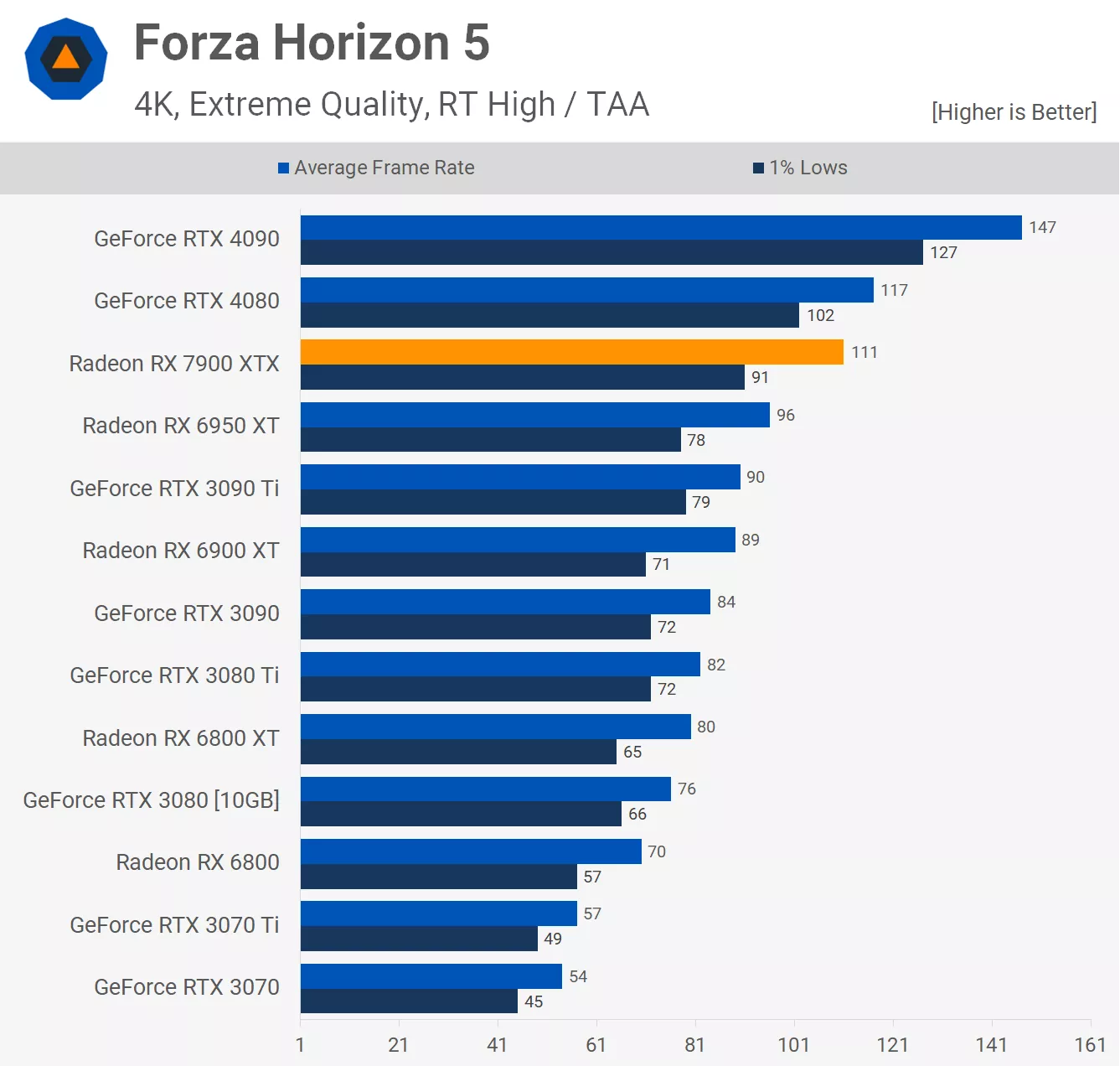

should be on is the fact that they've made a good step from the RX 6000-series to the point that RT is actually usable in several games without needing FSR enabled. That's a huge thing because it's the difference between "good enough" and "not good enough". Let's also not move the goal posts here because the RT performance was "good enough" on the RTX 3000-series to the point that it was a big selling feature. Now the RX 7900 XTX is roughly on par with the RTX 3090 Ti in RT performance. There were enough people that bought the RTX 3080 specifically for RT and the RX 7900 XT is better at RT than that card which means that it's usable. It would've been disappointing if there wasn't a significant improvement in RT performance but that's not the case.

Then of course, there's the elephant in the room... When the RX 7900 XTX is clearly hamstrung in Forza Horizon, Steve rightly points it out:

We think AMD's dealing with a driver related issue for RDNA 3 in Forza Horizon 5 because performance here wasn't good. There were no stability issues or bugs seen when testing, performance was just much lower than you'd expect. At 1440p, for example, the 7900 XTX was a mere 6% faster than the 6950 XT. And that sucks."

Yes, it does suck but it's also not a permanent thing. However, Steve makes sure that he bashes the performance numbers at higher resolutions despite being

fully aware that there's a driver issue making a good showing impossible.

Then, where the RX 7900 XTX beats both the RTX 4080

and the RTX 4090, Steve dismisses it as an outlier, which could be called fair:

"These results are certainly outliers in our testing, but Modern Warfare 2 and Warzone 2 are very popular games, so this is great news for AMD."

The question is, why wasn't Steve equally dismissive of the Forza Horizon results when he

knew that something wasn't right? Instead, he continued with testing the game t at higher resolutions, despite the fact that a bad result was already a foregone conclusion. If he had also dismissed this score as an outlier the way he did the others, I would have nothing to say here, but that did not happen:

"This margin improved a lot at 4K, but even so the 7900 XTX was just 16% faster than the 6950 XT, a far cry from the 50% minimum AMD suggested. This meant the new Radeon GPU was 5% slower than the RTX 4080, so a disappointing result all round."

Instead, he calls it disappointing that the RX 7900 XTX came within 5% of a card that costs 20% more while hamstrung with a driver issue (that is by no means permanent). Just imagine what the result will be when the bug is ironed out. Sure, it's disappointing

for now but it's clear that the RX 7900 XTX will be the faster card in this game.

NOTE: I have since noticed that the Forza Horizon test had Ray-Tracing TURNED ON:

"This margin improved a lot at 4K, but even so the 7900 XTX was just 16% faster than the 6950 XT, a far cry from the 50% minimum AMD suggested. This meant the new Radeon GPU was 5% slower than the RTX 4080, so a disappointing result all round."

So, according to Steve's "logic", if the RTX 4080 is a paltry 5% faster at 4K with RAY-TRACING SET TO HIGH than the RX 7900 XTX, a card that is US$200 cheaper, the Radeon should be "disappointed". Are you fracking kidding me? The RX 7900 XTX comes to within 5% of the RTX 4080 at 4K with ray-tracing set to high? That's fracking INCREDIBLE, not disappointing! However, people who don't know better won't notice this insanity and that's what you're counting on, eh?

ANY Radeon card that costs US$200 less than the RTX 4080 and is only 5% behind it in a game is ALREADY A WIN for the Radeon. The fact that RT was on and set to "HIGH" makes it a HUGE WIN for the Radeon. I don't know how this could be considered disappointing in the least.

Then there's this little pot-shot that I still don't understand:

"The 4K data is much the same, as in the 7900 XTX and RTX 4080 are on par, though this time that meant the 7900 XTX was just 14% faster than the 3090 Ti and 30% faster than the 6950 XT."

Yeah, but it also means that a card that costs $200 MORE is

also just 14% faster than the 3090 Ti and 30% faster than the RX 6950 XT. Steve's wording is clearly misleading here because he's framing this as a negative for the RX 7900 XT when it's a

much bigger negative for the RTX 4080 because it costs an extra $200.

Let's also remember that Techspot originally gave the RTX 4080 a score of 90/100. Truthfully, this is simply a case of the cards all being terrible values and while my review of the RX 7900 XTX wouldn't be glowing either, it would at least be comparable to my review of the RTX 4080. That wasn't the case here at Techspot however because let's just look at the sub-headings from the two articles:

AMD Radeon RX 7900 XTX Review

RDNA 3 Flagship is Fast

I've never seen a more generic sub-heading in my life. I don't even know why you bothered. It's clear that you didn't consider it to be worth the effort.

Now the RTX 4080:

Nvidia GeForce RTX 4080 Review

Fast, Expensive & 4K Gaming Capable All the Way

Huh, look at that. You actually put effort into this one.

So, I guess then that the RX 7900 XTX is not "Expensive" or "4K Gaming Capable All the Way", eh?

Then of course, there's also the fact that you completely ignored the 8GB VRAM difference between the RX 7900 XTX and RTX 4080. I guess that when you're paying through the nose, longevity isn't important, eh?

- This is just inexcusable

When you add all of these little (and some aren't so little) problems with this article and consider that it makes the RTX 4080 article look like an nVidia love-in. It's pretty clear which card that Techspot is trying to promote and it's not the RX 7900 XTX. I have never wanted to say something like this, but I can't deny it any longer.