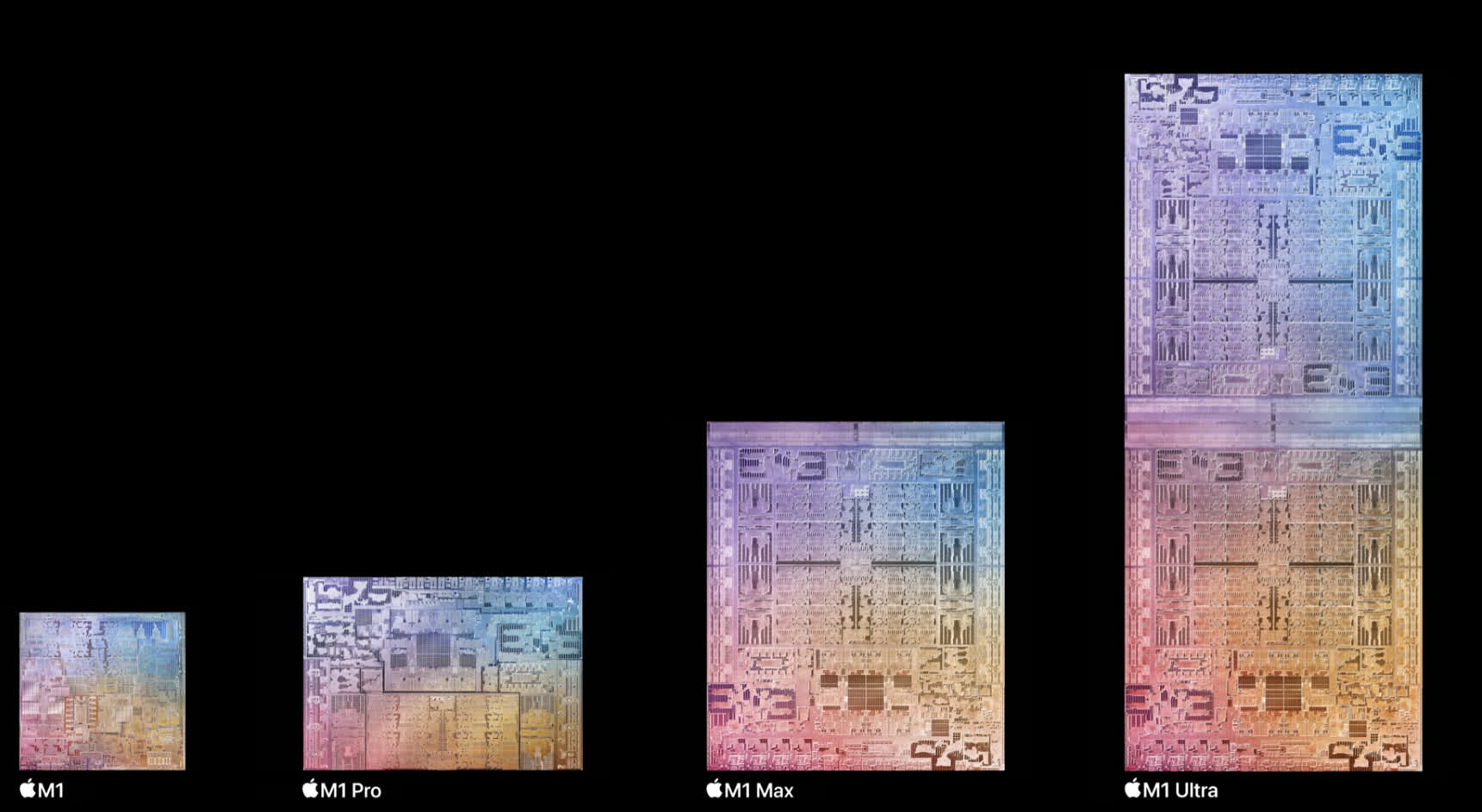

The big picture: Apple is pushing boundaries with its 114 billion transistor behemoth M1 Ultra. It uses state-of-the-art interconnection technology to connect two discrete chips into a single SoC. Fortunately, developers will not have to jump through hoops to utilize the Ultra's full potential as it will behave as a single unit on the system level.

Apple recently announced the M1 Ultra, its new flagship SoC that will power the all-new Mac Studio, a compact yet high-performance desktop system. It claims the M1 Ultra powered Mac Studio would provide CPU performance up to 3.8 times faster than the 27-inch iMac with a 10-core processor.

"[The M1 Ultra is a] game-changer for Apple silicon that once again will shock the PC industry," said Johny Srouji, Apple's senior vice president of hardware technologies.

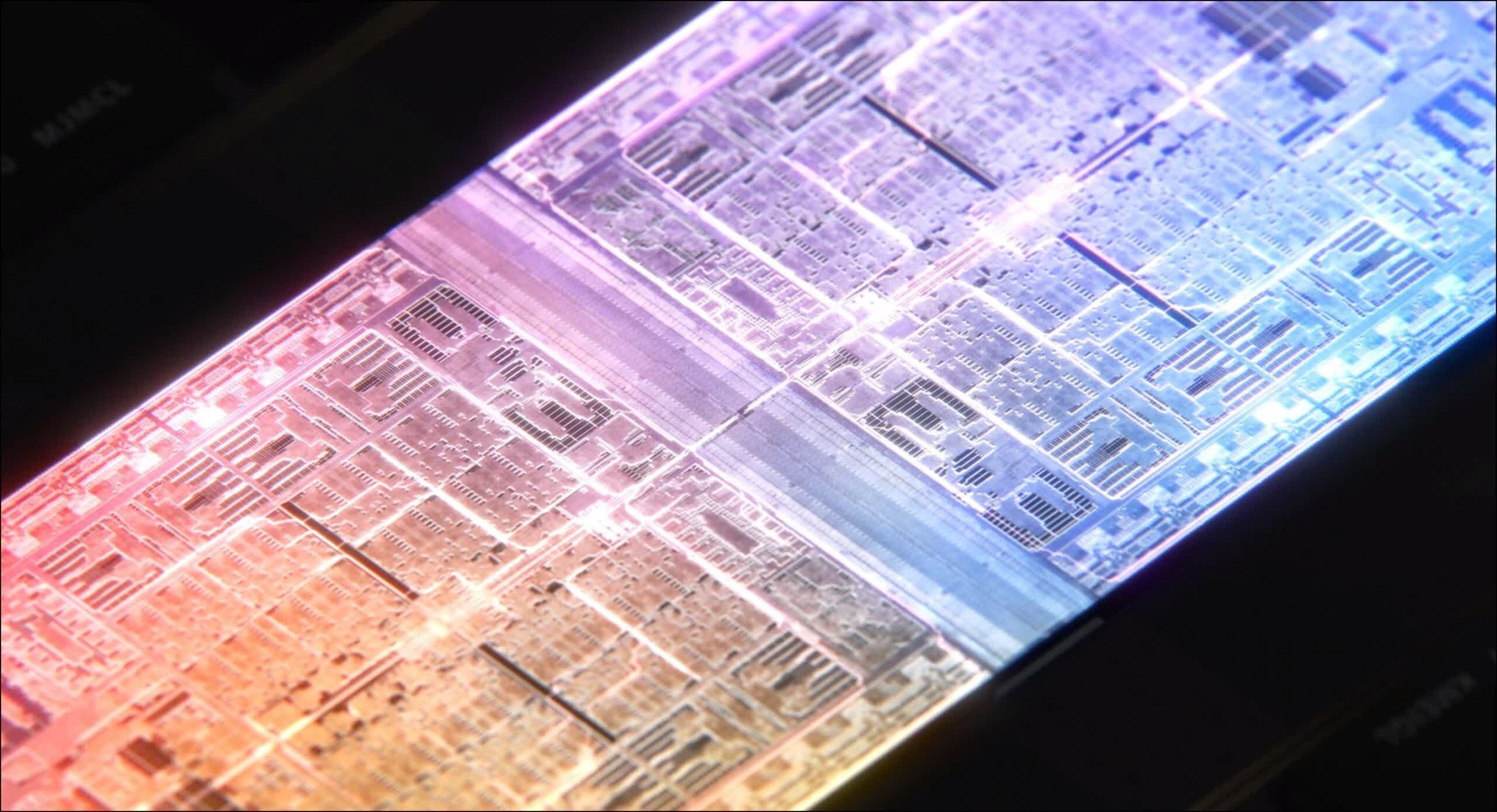

The M1 Ultra is undoubtedly a powerhouse. It combines two M1 Max chips over what Apple calls the UltraFusion interprocessor interconnection that offers 2.5 terabytes per second of low latency, inter-processor bandwidth.

As per Apple, UltraFusion uses a silicon interposer with twice the connection density & four times the bandwidth of competing interposer technologies. Since each M1 Max has a die area of 432 mm², the UltraFusion interposer itself has to be over 864 mm². That is in the realm of AMD and Nvidia's enterprise GPUs featuring HBM (High Bandwidth Memory).

Another advantage of Ultrafusion is that developers won't need to rewrite their code, as on a system level, the Mac will see the dual-chip SoC as a single processor.

Built on TSMC's 5-nanometer process, the M1 Ultra has 114 billion transistors, a 7x increase over the original M1. It can support up to 128 GB of unified memory with a memory bandwidth of 800 GB/s, made possible by its dual-chip design. It includes 16 performance cores with 48MB L2 Cache and four efficiency cores with 4MB L2 cache, while the GPU can have up to 64 GPU Cores. It also sports a 32 core Neural Engine that can execute up to 22 trillion operations per second for accelerating machine-learning tasks.

Digitimes reports that Apple's M1 Ultra SoCs use TSMC's CoWoS-S (chip-on-wafer-on-substrate with silicon interposer) 2.5D interposer-based packaging process. Nvidia, AMD, and Fujitsu have used similar technologies to build high-performance processors for datacenters and HPC (high-performance computing).

Taiwan chipmaker TSMC has a newer alternative to CoWoS-S in its InFO_LSI (InFO with integration of an LSI) technology for ultra-high bandwidth chiplet integration. It uses localized silicon interconnects instead of large and expensive interposers, similar to Intel's EMIB (embedded die interconnect bridge).

It is believed that Apple chose CoWoS-S over InFO_LSI as the latter was not ready in time for the M1 Ultra. So Apple might have played it safe by opting for a proven but more expensive solution over a cheaper, more nascent technology.

The Mac Studio will be available starting March 18, with a starting price of $3999, which includes 64GB of unified memory and a 1TB SSD.

https://www.techspot.com/news/93755-exploring-apple-m1-ultrafusion-chip-interconnect.html