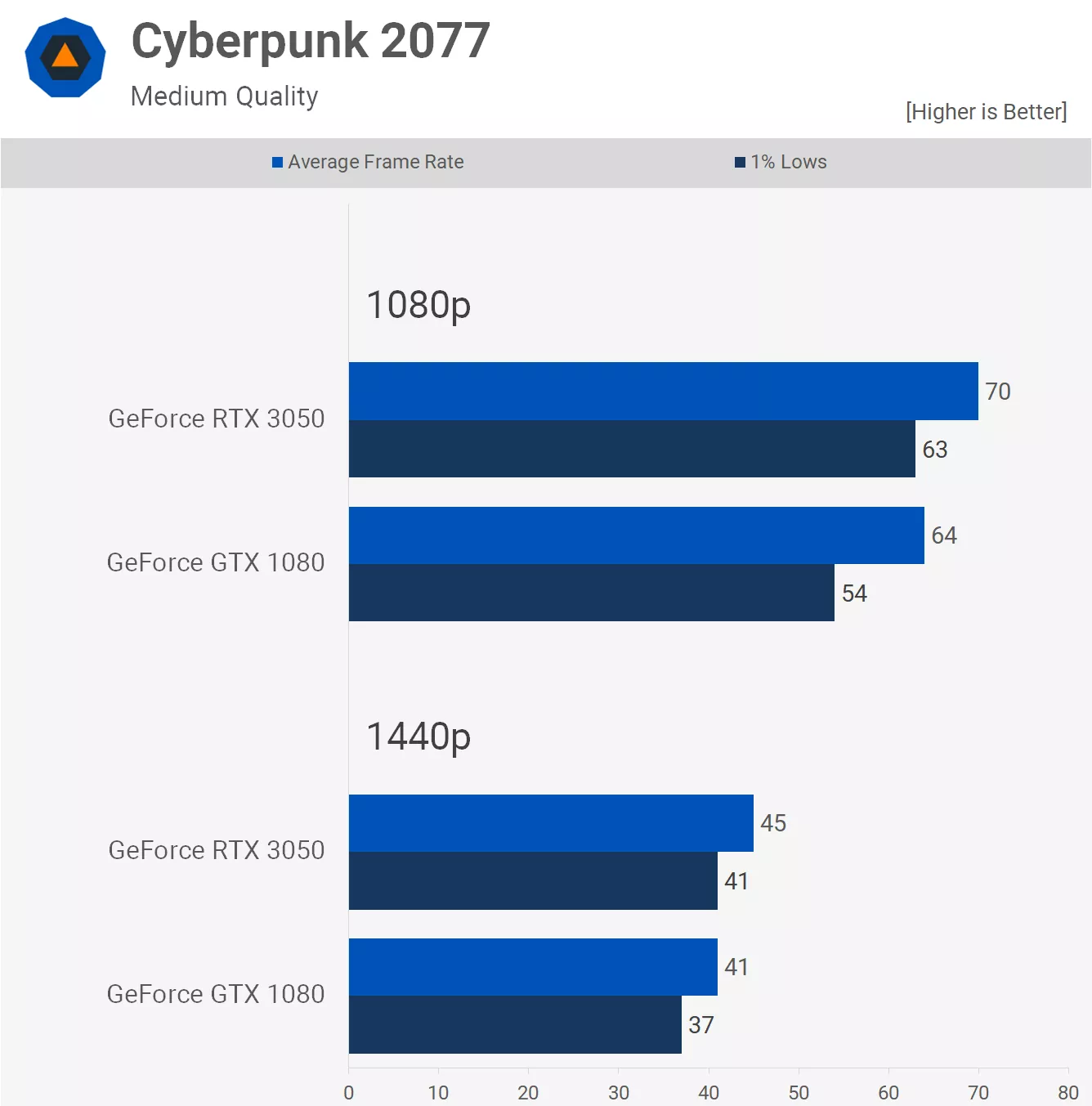

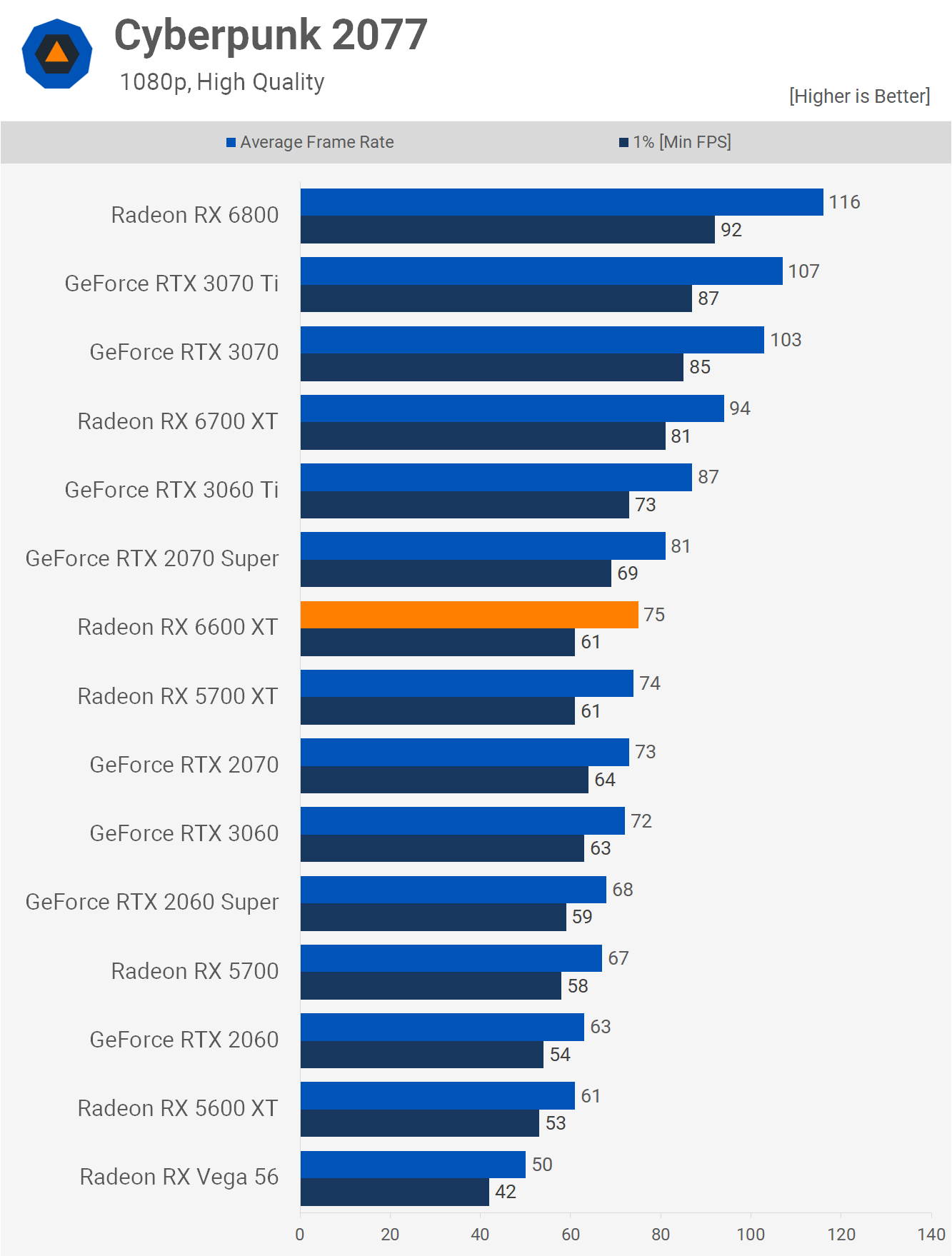

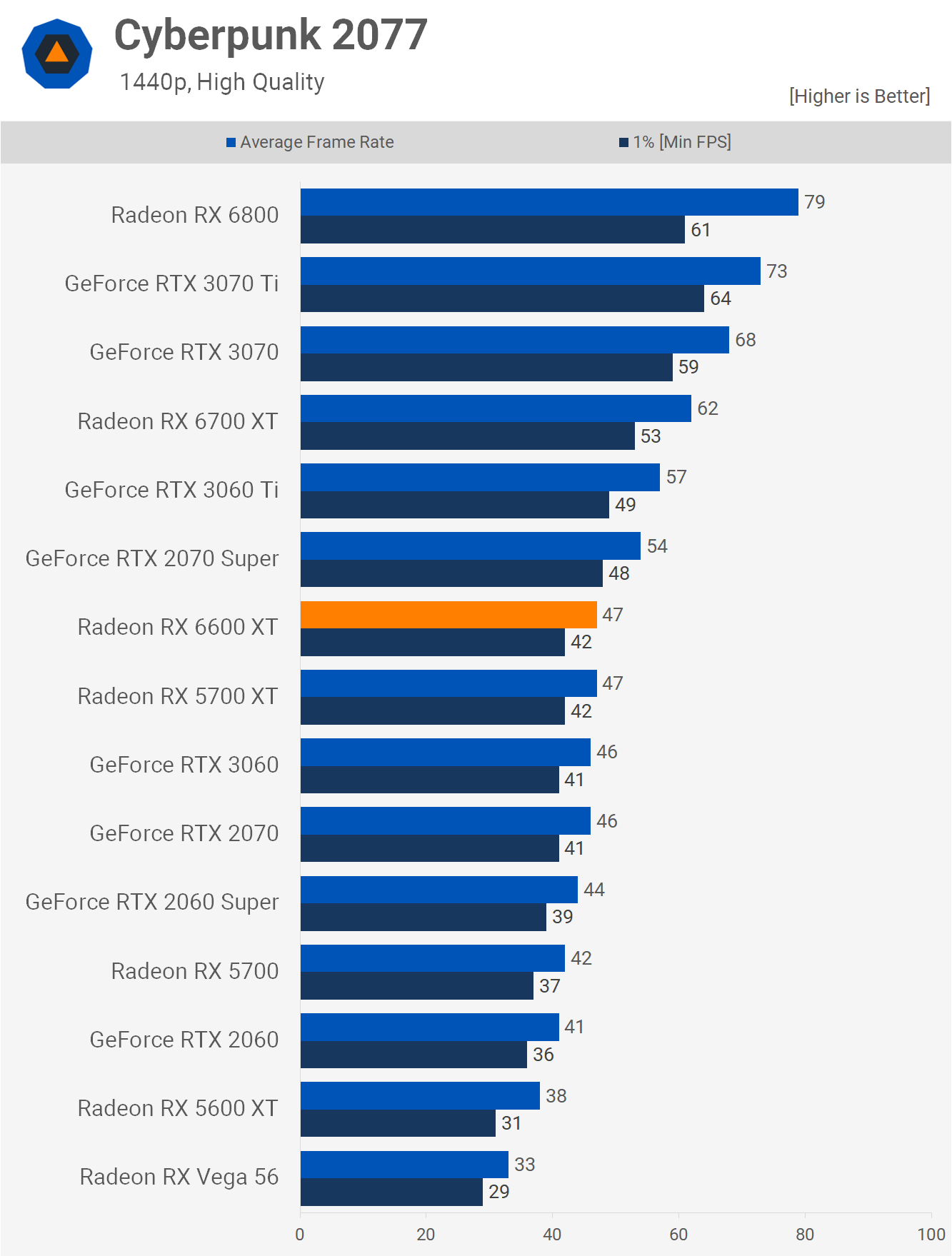

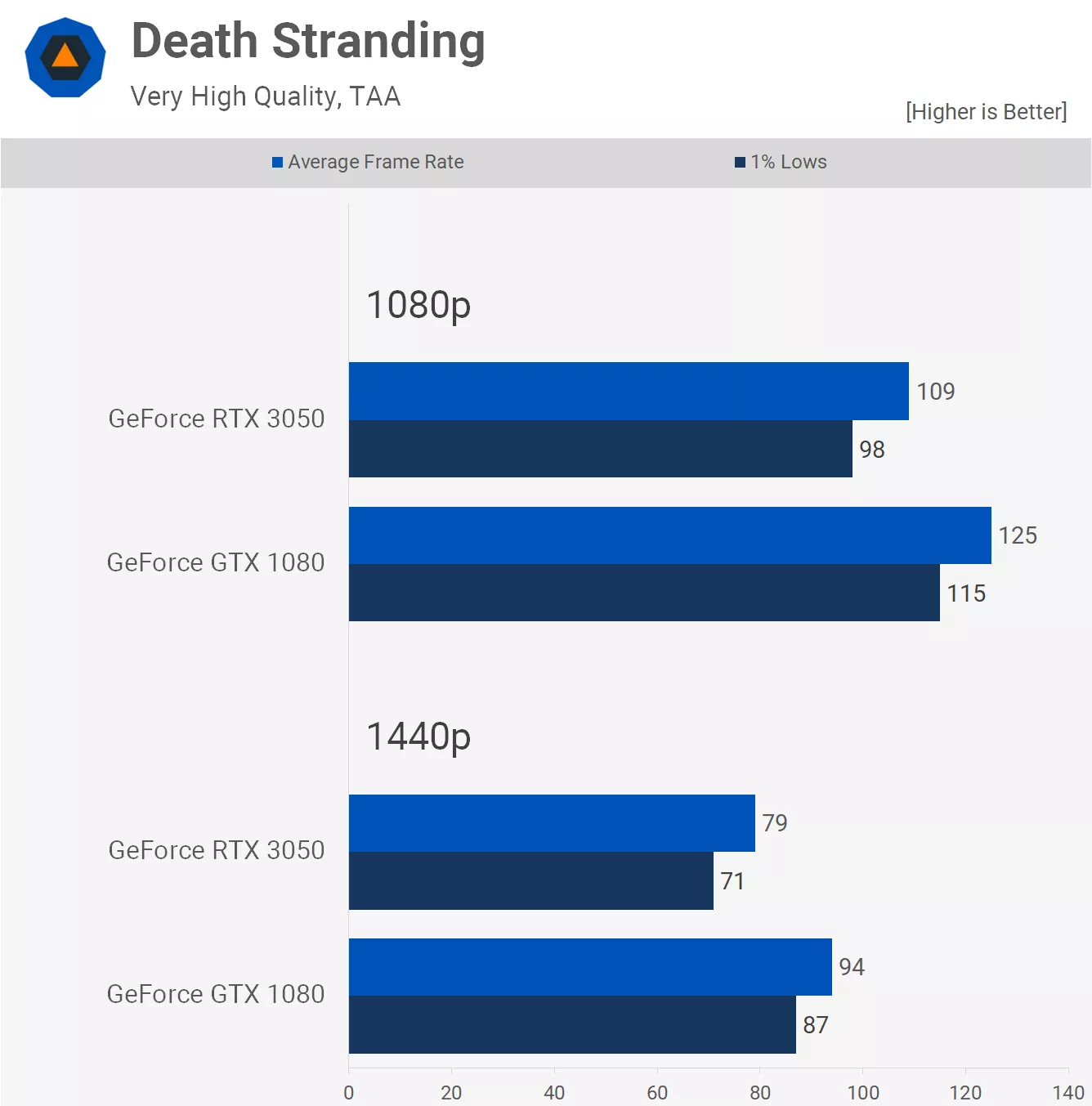

The GeForce GTX 1080 was released back in 2016, so six years later the RTX 3050 arrived at half the price. After many years and multiple GPU generations, can 2022's $300 GeForce GPU beat 2016's $600 GPU?

https://www.techspot.com/review/2524-budget-geforce-rtx-vs-gtx-1080/