A hot potato: While there are an increased number of safeguards used by online services that identify and flag child abuse images, these systems are not infallible, and they can have a devastating impact on the wrongly accused. Such is the case of one father whose Google account is still closed after the company mistakenly flagged medical images of his toddler son's groin as child porn.

According to a New York Times report, the father, Mark, took the photos in February last year on the advice of a nurse ahead of a video appointment with a doctor. Mark's wife used her husband's Android phone to take photos of the boy's swollen genital area and texted them to her iPhone so they could be uploaded to the health care provider's messaging system. The doctor prescribed antibiotics, but that wasn't the end of it.

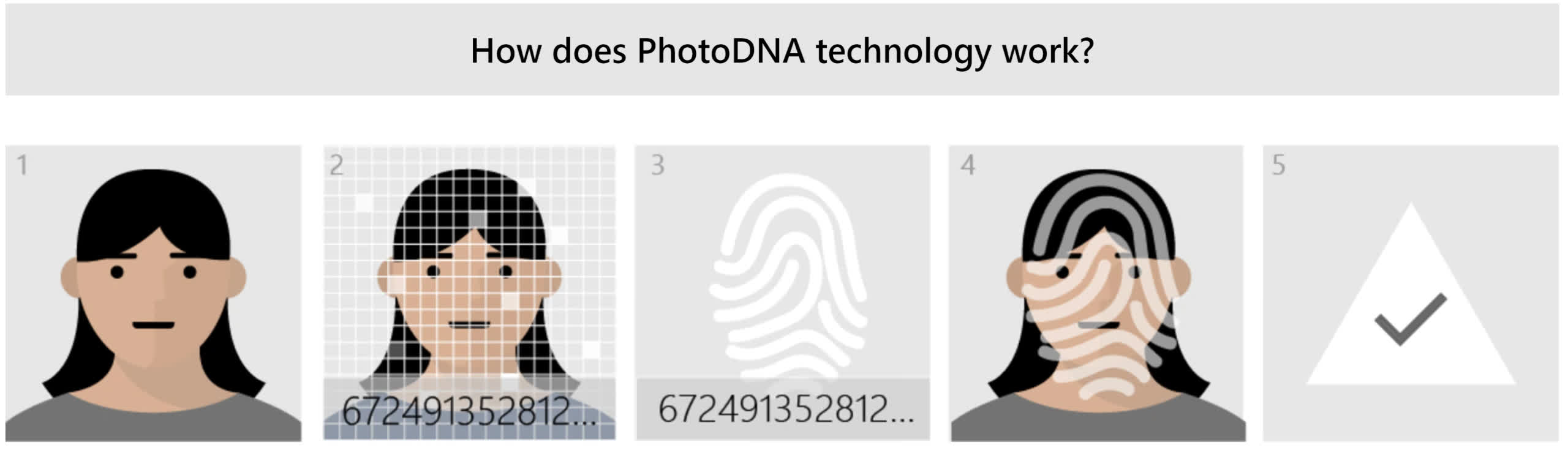

It seems that the images were automatically backed up to Google Photos, at which point the company's artificial intelligence tool and Microsoft's PhotoDNA flagged them as child sexual abuse material (CSAM). Mark received a notification two days later informing him his Google accounts, including Gmail and Google Fi phone service, had been locked due to "harmful content" that was "a severe violation of Google's policies and might be illegal."

Courtesy of Microsoft

As a former software engineer who had worked on similar AI tools for identifying problematic content, Mark assumed everything would be cleared up once a human content moderator reviewed the photos.

But Mark was investigated by San Francisco Police Department over "child exploitation videos" in December. He was cleared of any crime, yet Google still hasn't reinstated his accounts and says it is standing by its decision.

"We follow US law in defining what constitutes CSAM and use a combination of hash matching technology and artificial intelligence to identify it and remove it from our platforms," said Christa Muldoon, a Google spokesperson.

Claire Lilley, Google's head of child safety operations, said that reviewers had not detected a rash or redness in Mark's photos. Google staff who review CSAM are trained by pediatricians to look for issues such as rashes, but medical experts are not consulted in these cases.

Lilley added that further review of Mark's account revealed a video from six months earlier showing a child laying in bed with an unclothed woman. Mark says he cannot remember the video, nor does he still have access to it.

"I can imagine it. We woke up one morning. It was a beautiful day with my wife and son, and I wanted to record the moment," Mark said. "If only we slept with pajamas on, this all could have been avoided."

We're winning.

— EFF (@EFF) September 3, 2021

Apple has announced delays to its intended phone scanning tools while it conducts more research. But the company must go further, and drop its plans to put a backdoor into its encryption entirely. https://t.co/d0N1XDnRl3

The incident highlights the problems associated with automated child sexual abuse image detection systems. Apple's plans to scan for CSAM on its devices before photos are uploaded to the cloud were met with outcry from privacy advocates last year. It eventually put the feature on indefinite hold. However, a similar, optional feature is available for child accounts on the family sharing plan.

Masthead: Kai Wenzel

https://www.techspot.com/news/95729-google-refuses-reinstate-account-man-after-flagged-medical.html