Forward-looking: After much anticipation and tons of leaks for the last few months, Intel is finally ready to unveil their first "Alder Lake" 12th-gen Core desktop processors and they're giving us a performance preview based on their benchmark tests ran in-house. Come next week, you'll also have TechSpot's independent review of the CPUs as we start exploring all the related elements to Alder Lake, like DDR5 vs. DDR4 performance, which motherboards are worth buying, and so on. Lots of fun times ahead.

Intel had already given us a whole range of details about the Alder Lake architecture including the new hybrid design with P-cores and E-cores, but today that all comes together into the actual CPUs that will become available starting November 4.

In previous generations, Intel had launched their mobile processors first, but that's set to change with the 12th-generation lineup. The first CPUs to be released next week are enthusiast desktop K-series models, with everything else scheduled to launch early next year. Given Intel’s desktop parts are finally moving to a new process node with a brand new architecture, it seems they want to lead with their highest performing models before filling out the rest of the series.

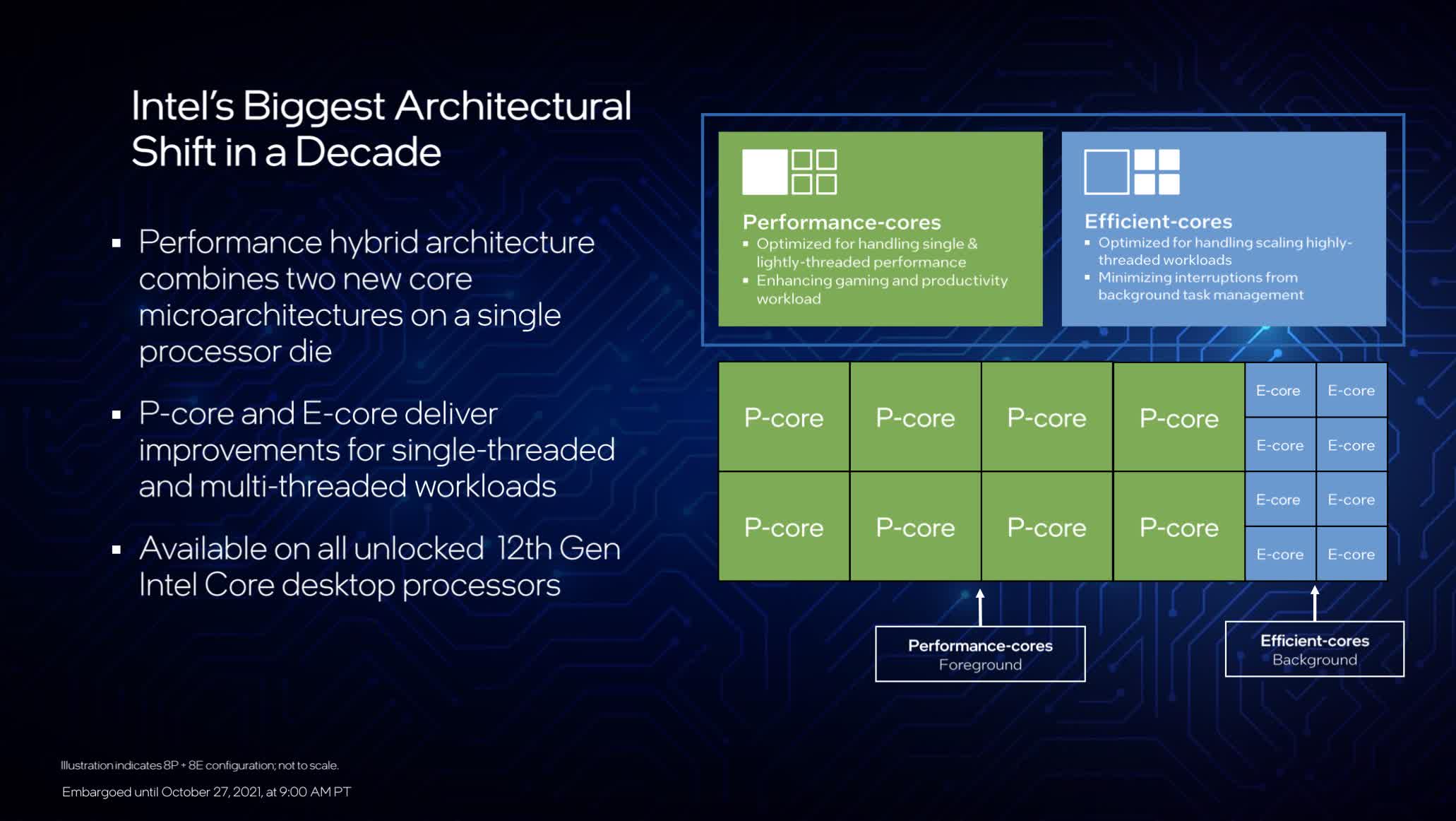

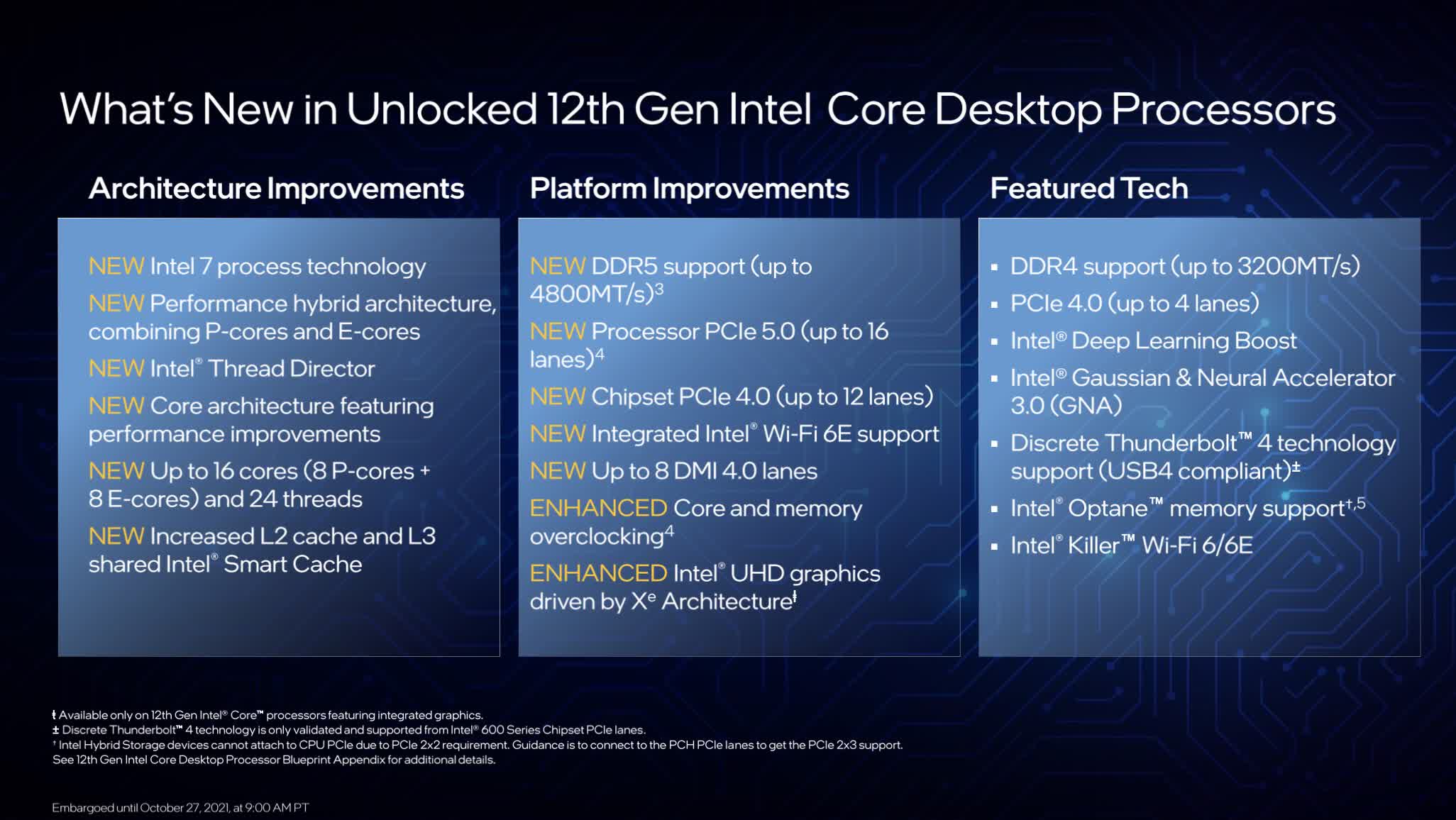

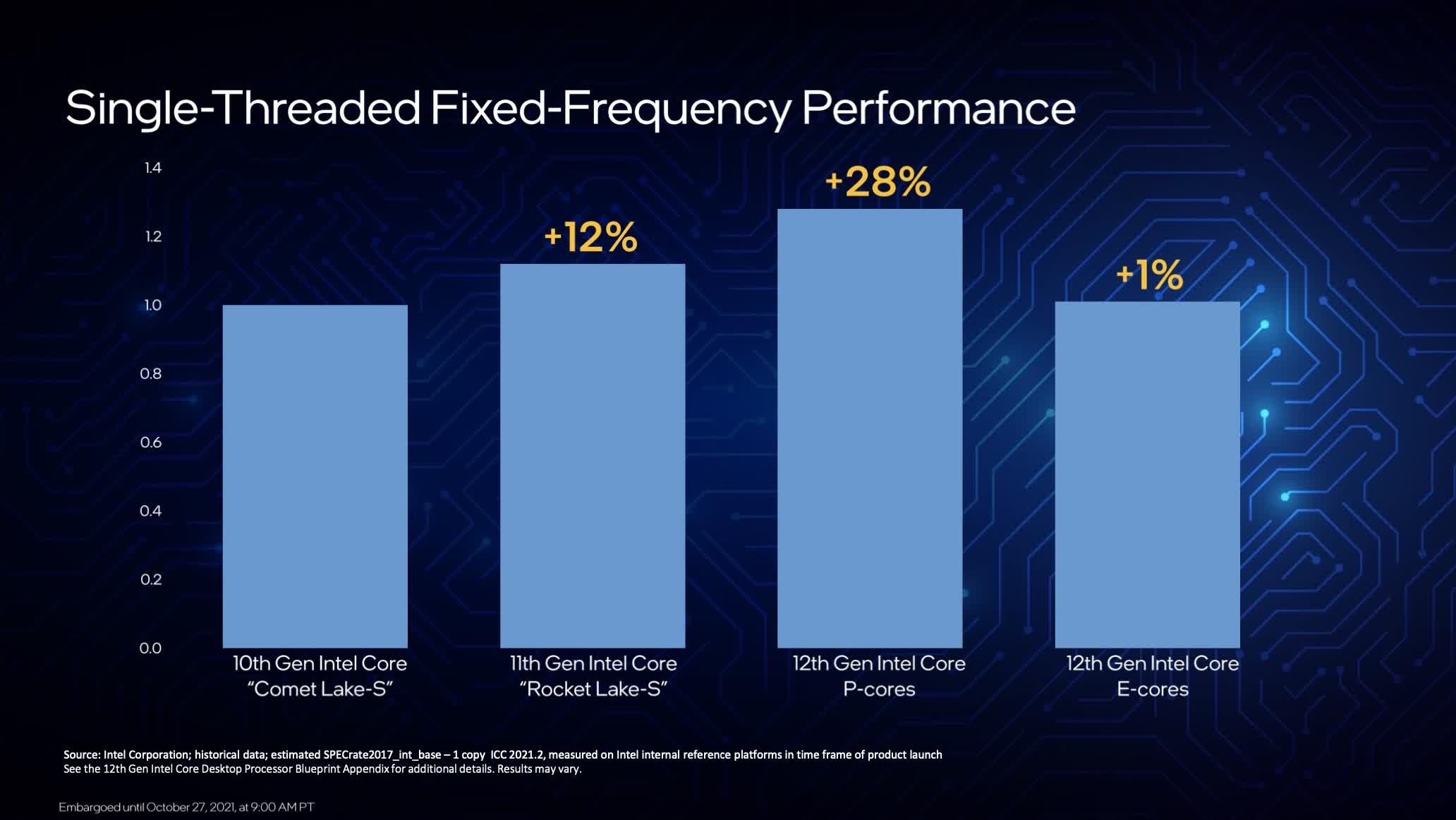

As a quick refresher on Alder Lake, Intel is moving to a new hybrid design that features both performance cores (P-cores) and efficient cores (E-cores). The P-cores are an overhaul of Intel’s existing high performance cores bringing a bigger, wider, deeper design with more cache and improved features. Intel are claiming a 19% IPC improvement for these P-cores versus the Cypress Cove cores seen in Rocket Lake.

Meanwhile the E-cores are a big overhaul of Intel’s old Atom cores, improving performance into the range of Skylake, while being much smaller in terms of die space, and more efficient in terms of power consumption. Intel says that these cores are mostly designed for background applications, but they are no slouch and should significantly help with multi-thread performance in some applications.

Joining all of this together is Intel’s new cache architecture. Each P-core has 1.25 MB of L2 cache, and each group of four E-cores has 2 MB of L2 cache. Then, accessible across both P and E cores, Intel are providing up to 30 MB of shared L3 cache. Rocket Lake topped out at 16 MB of L3 cache for an 8 core design, so the jump up to 16 cores with Alder Lake sees that cache almost double.

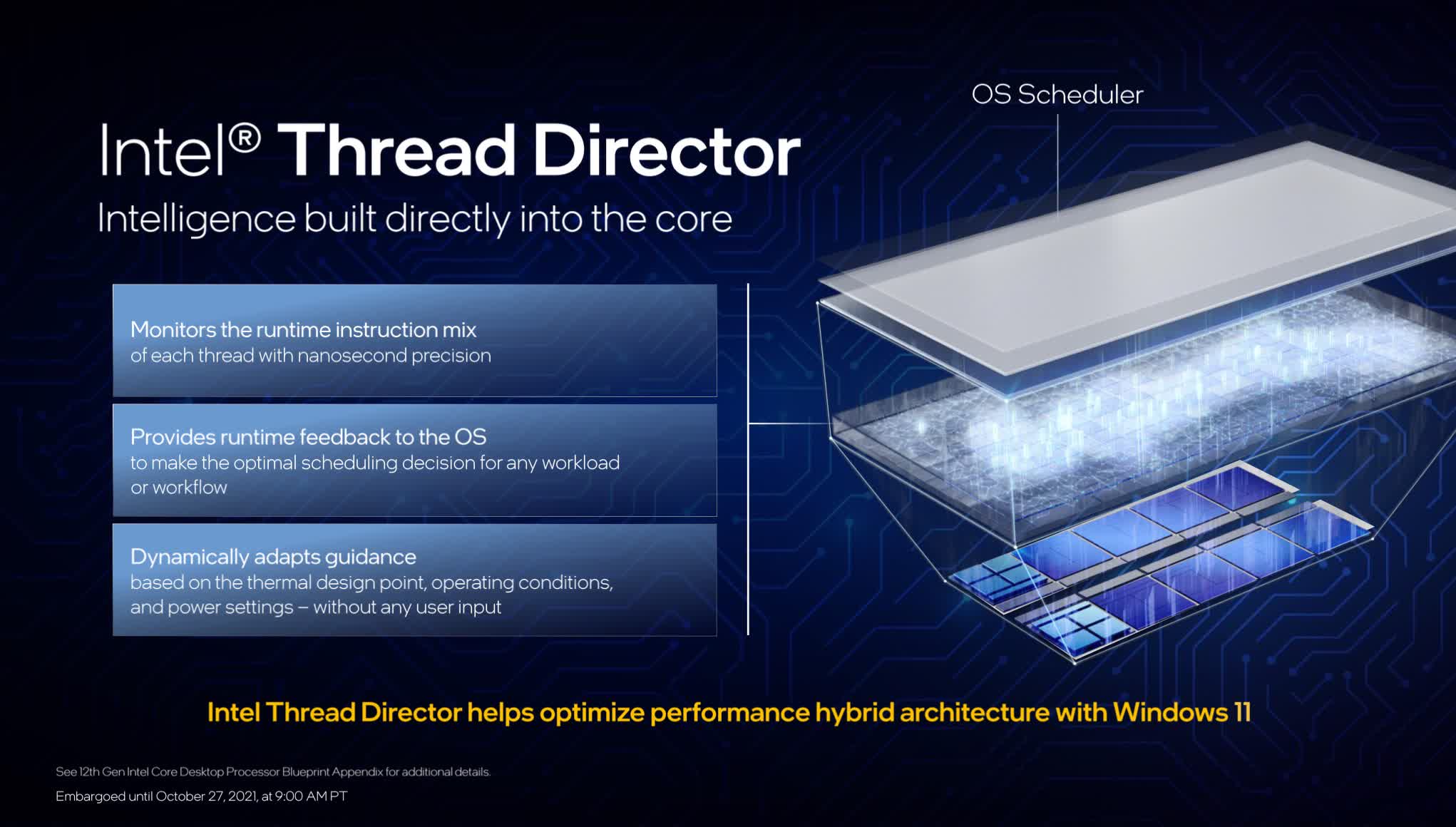

Also part of Alder Lake is the Thread Director, a hardware scheduling feature that assists Windows 11 in allocating tasks to the appropriate cores. When you have a hybrid design, it’s of utmost importance that applications are run on the right cores – foreground, high performance apps on the P-cores, and background tasks on the E-cores. Thread Director provides feedback to Windows 11 that assists with that process.

The CPUs

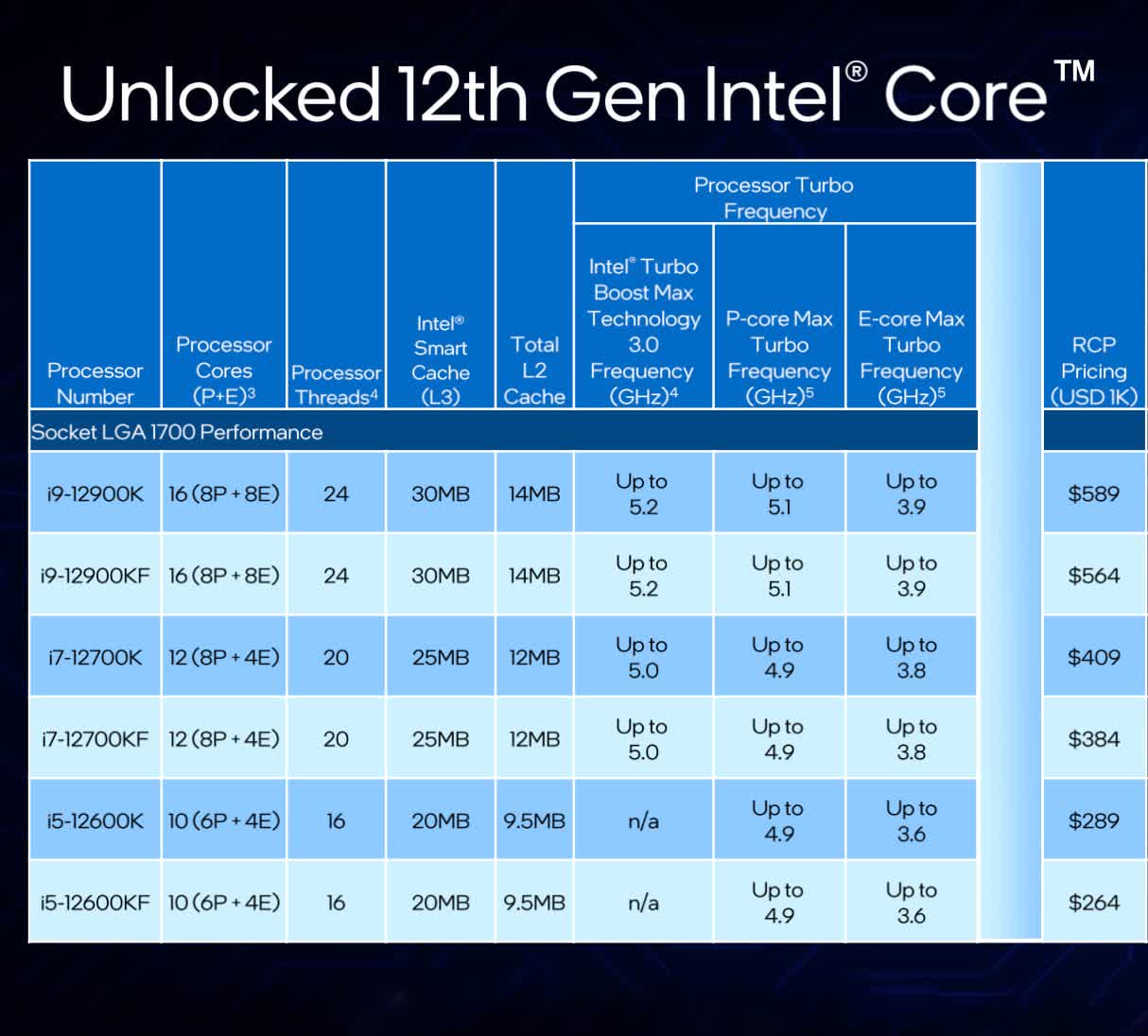

Intel are launching six 12th-gen Core models, three in the K series and three in the KF series. So essentially we’re getting a Core i9 design, a Core i7 design and a Core i5 design, each with and without integrated graph.

The Core i9 model, the i9-12900K, is the fully unlocked Alder Lake die. It brings with it 8 P-cores and 8 E-cores for a total of 16 CPU cores and 24 threads. Why not 32 threads? Well, the E-cores do not have hyperthreading, so the P-core cluster is providing 8 cores and 16 threads, while the E-core cluster has 8 cores and 8 threads. There’s also 30 MB of L3 cache.

Click graph for full spec sheet

Because there are two types of cores in this CPU, clock speeds are more complex than before. The P-cores run at between 3.2 GHz base and 5.2 GHz boost, while the E-cores sit at 2.4 to 3.9 GHz. So while the top frequency the P-cores can hit is similar to previous generations, the E-cores are clocked a bit lower in addition to having lesser IPC.

The Core i7-12700K brings 8 P-cores and 4 E-cores for a total of 12 cores and 20 threads, with 25 MB of L3 cache. Boost clock speeds are slightly lower than the Core i9 model, though base frequency is higher: 3.6 to 5.0 GHz for the P-cores, and 2.7 to 3.8 GHz for the E-cores.

Then we have the Core i5-12600K, which has 6 P-cores and 4 E-cores for a total of 10 cores and 16 threads, plus 20 MB of L3 cache. The P-cores are clocked between 3.7 and 4.9 GHz, while the E-cores sit at 2.8 to 3.6 GHz.

All models support overclocking, and the K-SKUs with integrated graphics have Xe-based UHD Graphics 770, though Intel hasn't go into much detail on what this would bring. All models also include DDR5-4800 and DDR4-3200 memory support, though you’ll have to choose which technology to use, you can’t use both at the same time.

Intel is going straight for the throat by pricing the 16-core Core i9-12900K at $589

Intel are not messing around with pricing. Even though AMD’s competing Ryzen 9 5950X CPU with 16 cores has an MSRP of $800, Intel is going straight for the throat by pricing the 16-core Core i9-12900K at $589. You’ll then be able to save around $25 opting for the Core i9 KF model. This is looking very competitive, although we haven’t seen how it performs yet.

The Core i7 and Core i5 models are even more aggressive. The 12700KF is going for a $384 tray price, roughly the same as the current price for AMD’s Ryzen 7 5800X. However, Intel is offering not just 8 performance cores, like the 5800X, but four efficient cores as well, which could deliver a decent boost to multi-threaded performance. Intel is clearly looking to regain enthusiast market share with this pricing.

Then we have the Core i5-12600KF at just $264, lower than the Ryzen 5 5600X’s $300 price tag, but with 10 cores instead of 6. Personally, I’m pretty excited to see this sort of price war brought back to the desktop market as AMD hasn’t exactly been offering the best value parts with their Ryzen 5000 lineup, even though performance has been impressive. If Intel can deliver great performance with these CPUs at a lower price than AMD, that’s a big win.

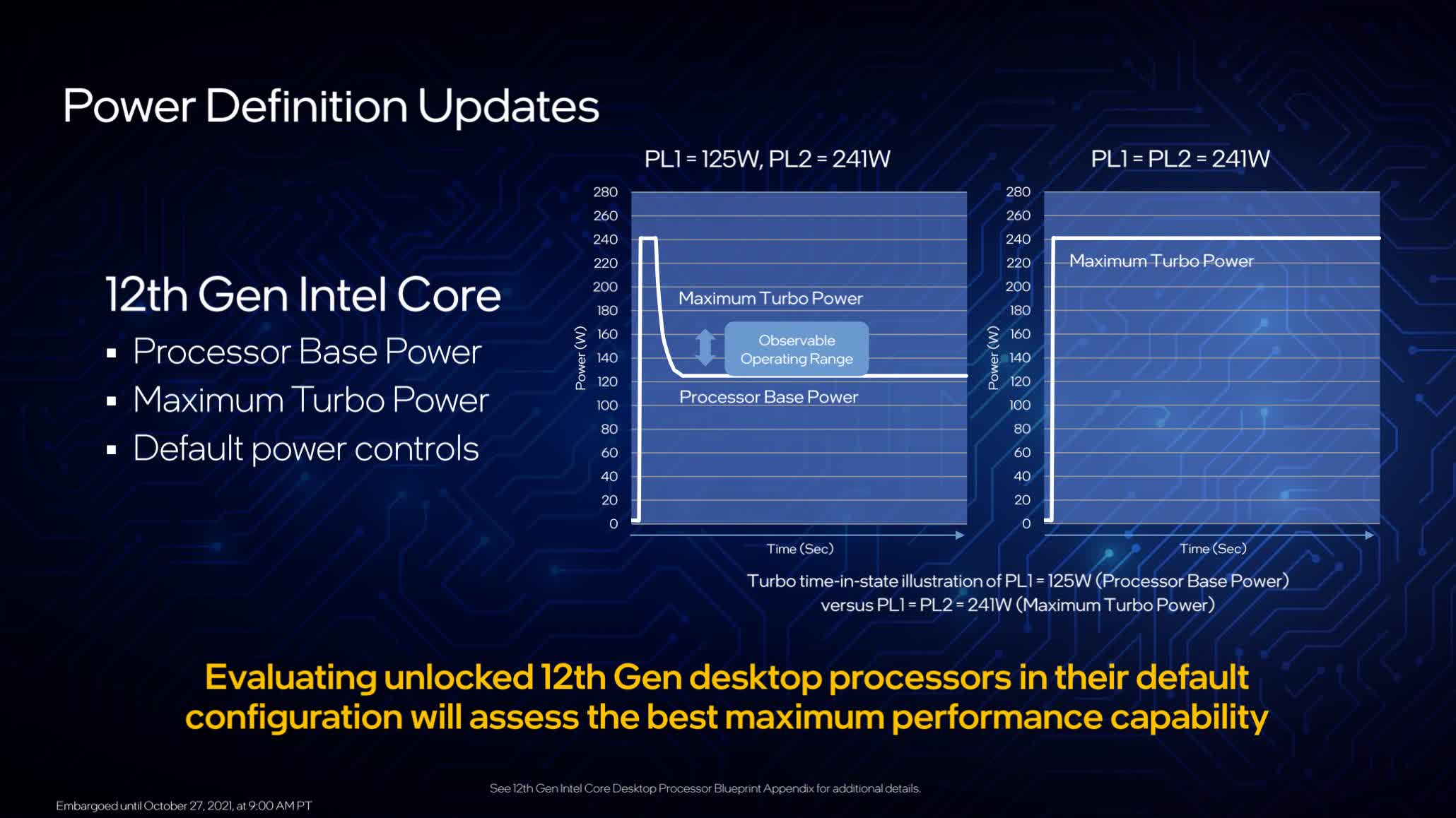

Another interesting thing to note about the lineup is that Intel has changed the way they report power, depreciating the "TDP" in favor of two metrics: processor base power, and maximum turbo power. Processor base power is essentially the same as the “PL1” power limit of previous generations, and maximum turbo power is the same as PL2 – it’s just that both of these values are being exposed and listed on Intel’s spec sheet to make it much easier for buyers to see what power level these chips will run at. No more "125W" CPUs that run in excess of 200W: Intel are showing you the full turbo value, which ranges from 150W for the Core i5s, to 241W for the Core i9s.

But there’s a bigger change here as well...

Intel is finally updating their "default" power configuration to fall in line with how motherboard makers have been running these CPUs for years now. For all K-SKU processors, the default power mode will be to run the CPU at its maximum turbo power indefinitely (the graph on the right) for the best performance. Previously the default configuration was technically what you see in the left graph, except almost all motherboards overrode this by default to run the CPU like the graph on the right. Both configurations were always in-spec, but it caused confusion as to which configuration was “correct” or “default” and which mode reviewers should test with.

Intel is clearing that up this generation, so there’s no confusion and no argument over what way these CPUs should be run. The Intel default spec is now to run the CPU at the maximum turbo power indefinitely, which is the default out of the box configuration motherboards were running these CPUs in. Running at base power is still in-spec as well, but it’s now being clarified as being an optional configuration that you’ll have to enable.

Platform Features

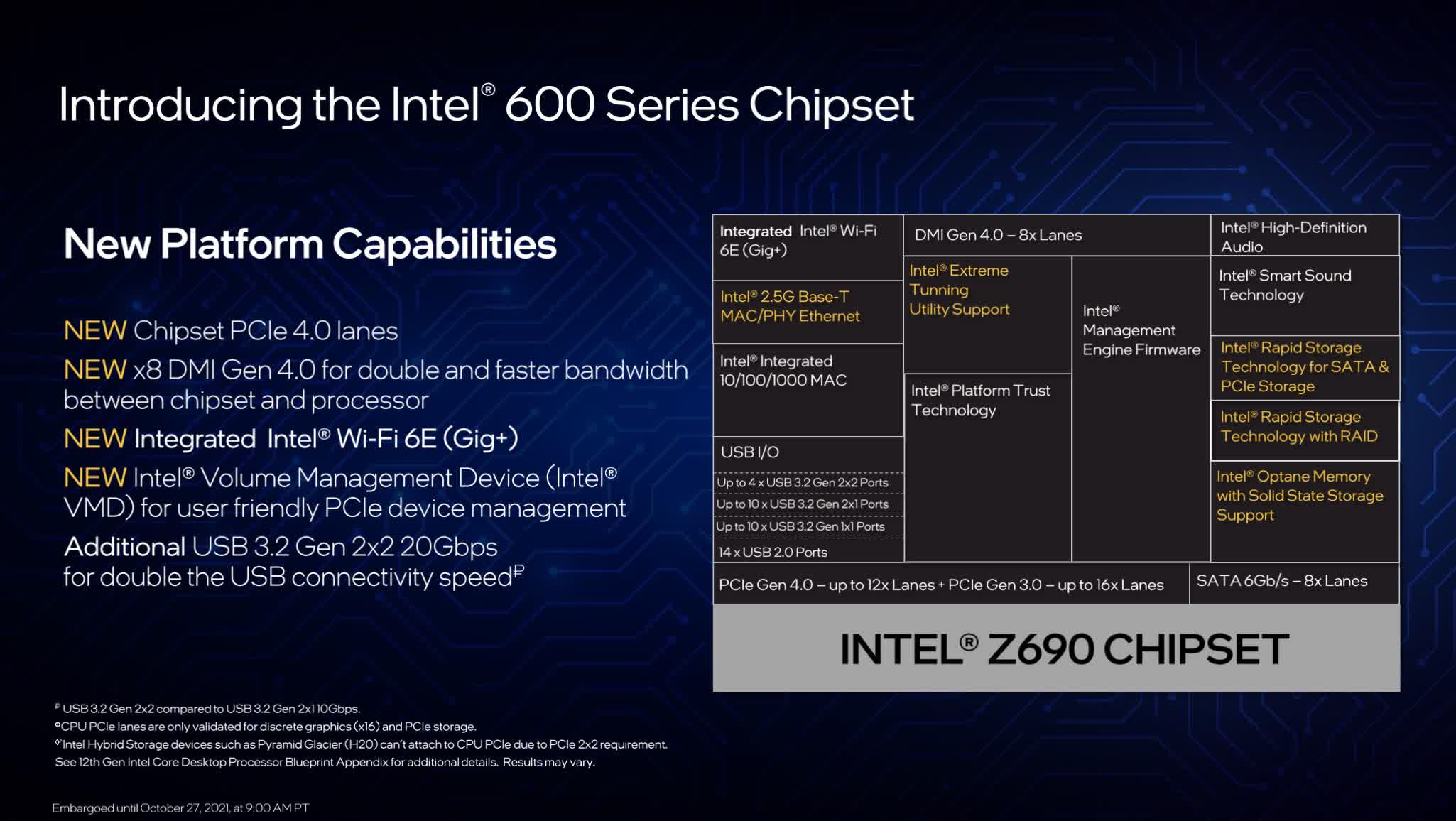

Each Alder Lake CPU announced today has 20 PCIe lanes direct from the CPU. This is split into 16 lanes of PCIe 5.0, and 4 lanes of PCIe 4.0. In addition to this, the new Z690 chipset is providing up to 12 PCIe 4.0 lanes and up to 16 PCIe 3.0 lanes, plus various USB port configurations. This is a substantial improvement to the PCIe connectivity compared to prior generations, with faster lanes direct from the CPU, and the new addition of PCIe 4.0 lanes from the chipset.

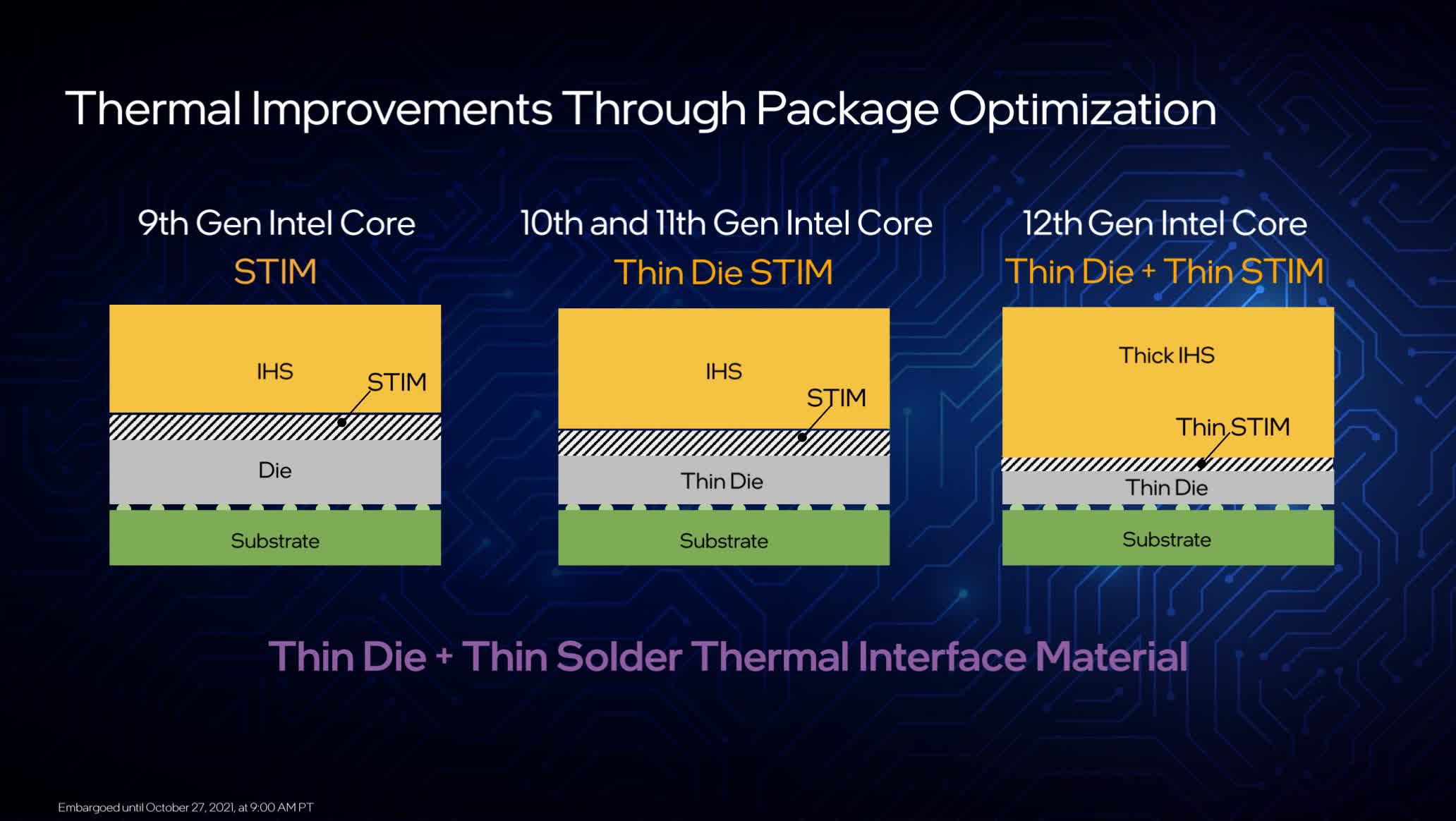

The package for the CPUs is different, too, requiring the LGA 1700 socket which is larger and more rectangular than previous Intel sockets. The CPUs include what Intel is calling a “thick IHS + thin STIM” design: the die is 25% thinner, the solder thermal interface material is 15% thinner, and the IHS is now thicker.

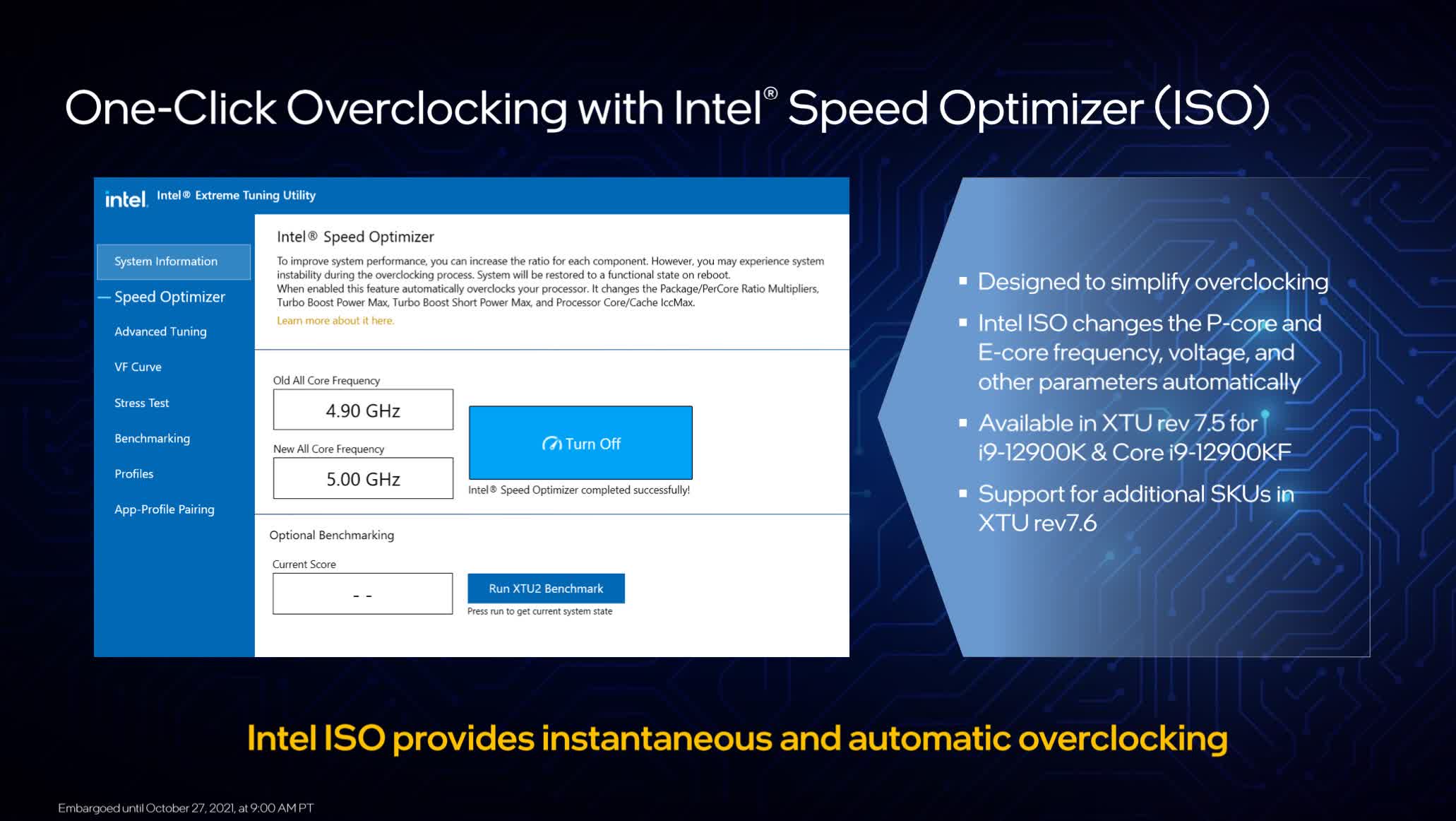

For overclockers, there are lots of features provided with Alder Lake. You’ll have full control over the core ratios for both the P-cores and E-cores, as well as control over BCLK and ring/cache frequency. Intel lets you dial down even further if you want with stuff like per core enabling, full voltage controls, AVX offsets and so on. All of this stuff will be available in a new version of the Extreme Tuning Utility, including a one-clock overclocking tool.

Then for memory, Intel is introducing XMP 3.0 with DDR5. We already know Alder Lake will be the first desktop platform to support DDR5 memory – and again you’ll have to choose between using DDR4 and DDR5, they can’t be used at the same time and you’ll likely need to buy either a DDR4 or DDR5 motherboard. But if you do choose DDR5, you’ll gain access to XMP 3.0, which introduces new features.

The big one is the increase in stored profiles from 2 to 5: three of which are vendor profiles, and two are rewritable profiles that are user configurable. There’s also descriptive profile names which is going to make it much easier to know what profile does what, along with a few other improvements.

So that’s most of the platform features: P-cores and E-cores, new cache layout, DDR5 support with XMP 3.0, PCIe 5.0 support and improved PCIe from the chipset, new overclocking features and of course the SKU list.

Performance Preview

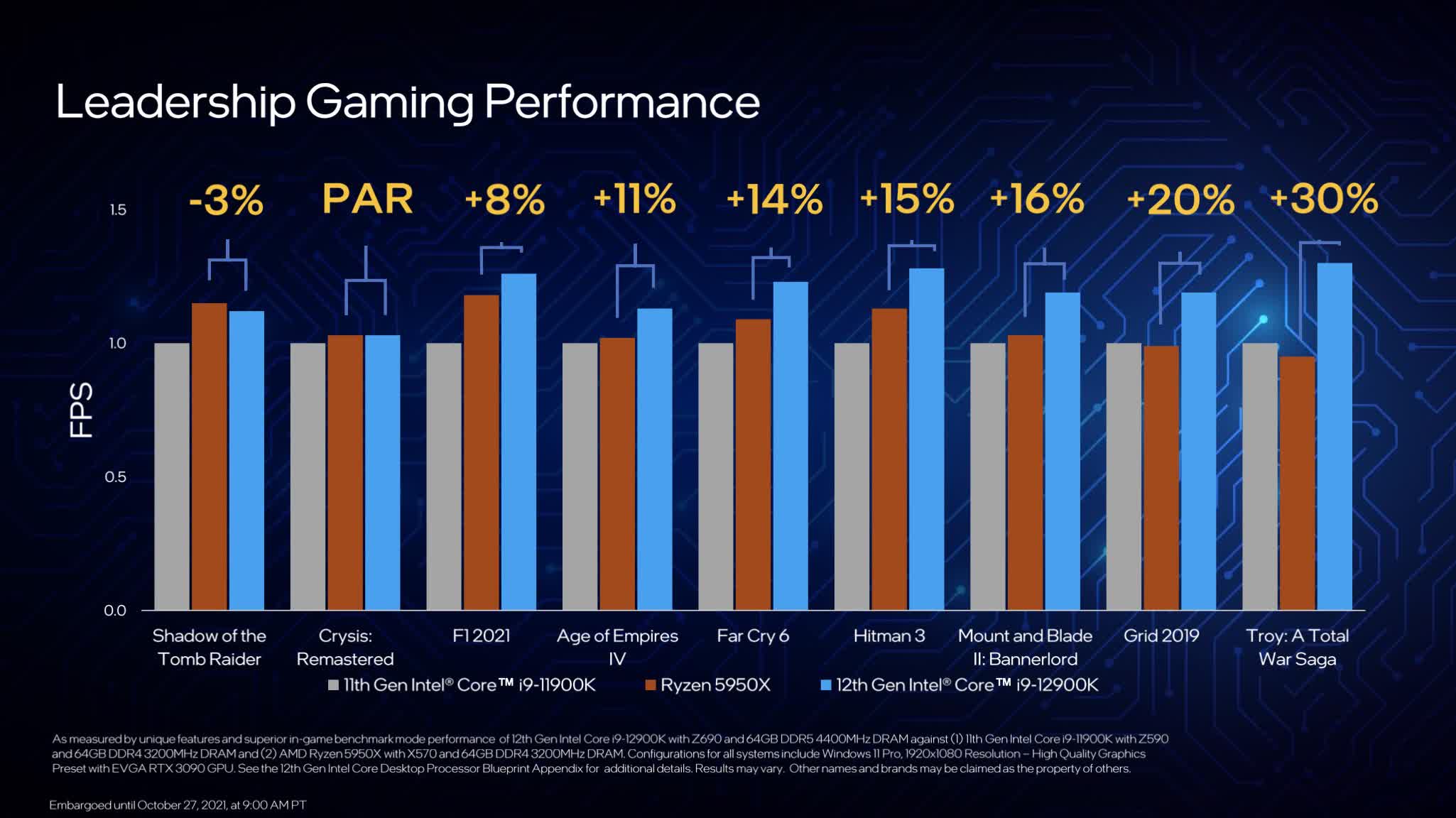

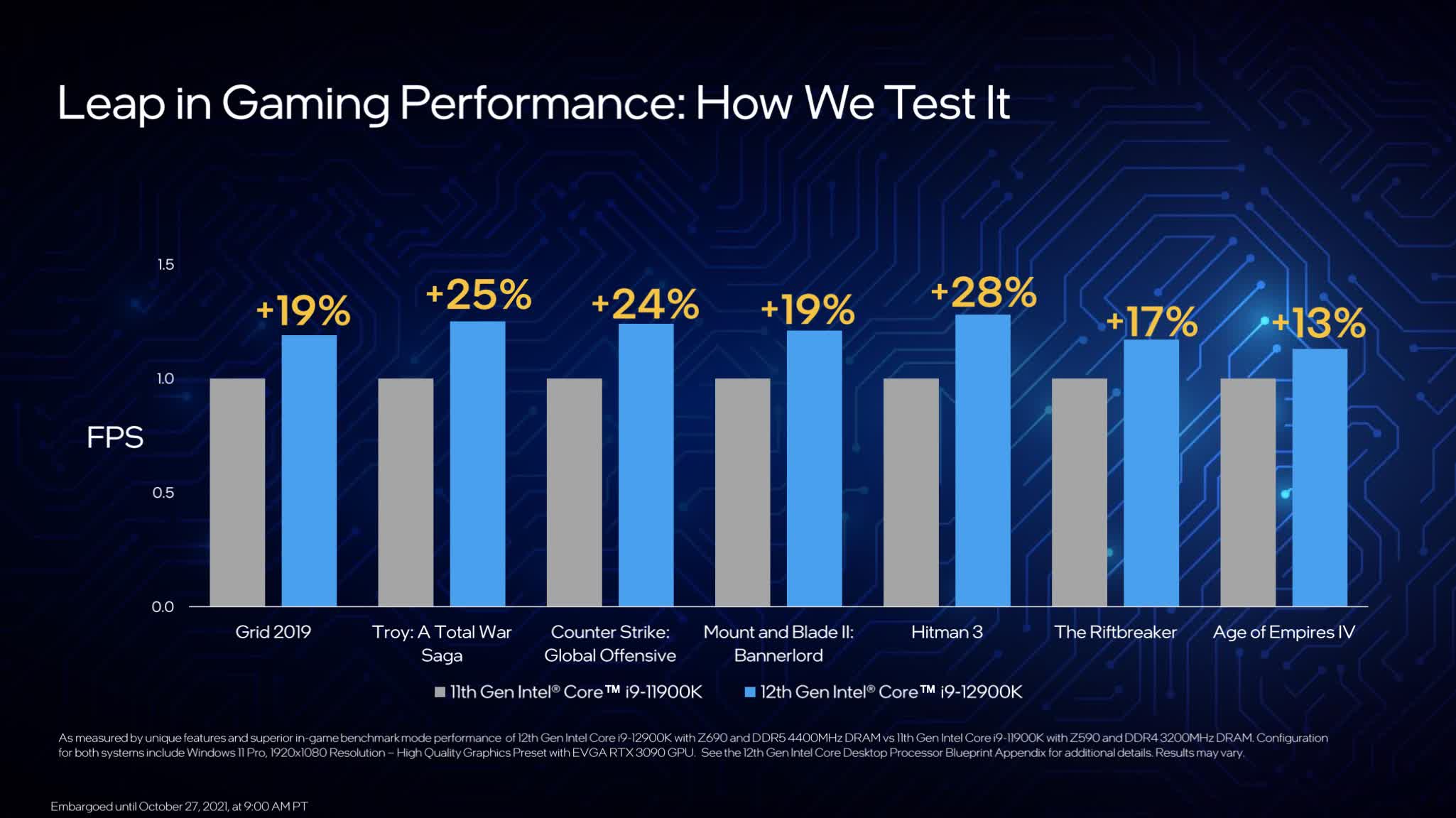

First up, we have gaming performance. Intel claims their new 12th-gen processors are the world’s best for gaming. Across 31 games tested, Intel is showing the Core i9-12900K to be 13% faster on average than the 11900K. These benchmarks were run at 1080p with High settings, using a GeForce RTX 3090. The 12th-gen platform is using DDR5-4400 memory, which Intel incorrectly lists as 4400 “MHz” – though Intel do say that DDR5 should be faster for gaming than DDR4 based on their testing, though mature DDR4 still performs well according to them.

Intel shows showing the 12900K being anywhere from slightly slower than the Ryzen 9 5950X, to being 30 percent faster. However, Intel does admit that these benchmarks were captured on Windows 11 before the performance patch for AMD CPUs was available, so the results aren’t as meaningful as they would have been had they tested the 5950X in its best performing mode, such as using Windows 10 or waiting for the patch to be available. Intel also claims that the 12900K would still be the “world’s fastest gaming CPU” if they used DDR4 memory, though they didn’t show the data to back this up.

It should also be noted that Intel tested with Windows 11’s virtualization-based security feature enabled. We recently showed in our Windows 11 vs Windows 10 benchmark test that VBS does hurt performance on the Core i9-11900K in games, though the degree to which performance is impacted depends on the game. If the 12900K is superior at managing or accelerating VBS, then the gains shown versus 11th-gen may be greater than they would have been if both platforms were tested with VBS disabled. That’s something we’ll have to explore in our review.

Intel spent some time talking about the software challenges of working with a hybrid CPU architecture and some of the best practices for developing applications. It sounds like while Alder Lake should work fine for a large number of applications, there will be some initial teething issues. Of specific interest for gamers is that Denuvo DRM wasn’t initially compatible with Alder Lake, although Intel worked with Denuvo to correct this, which will need to be applied as a patch to affected games. Intel said 91 games were impacted, and 32 have yet to be fixed, though 16 of those should be fixed before Alder Lake launches.

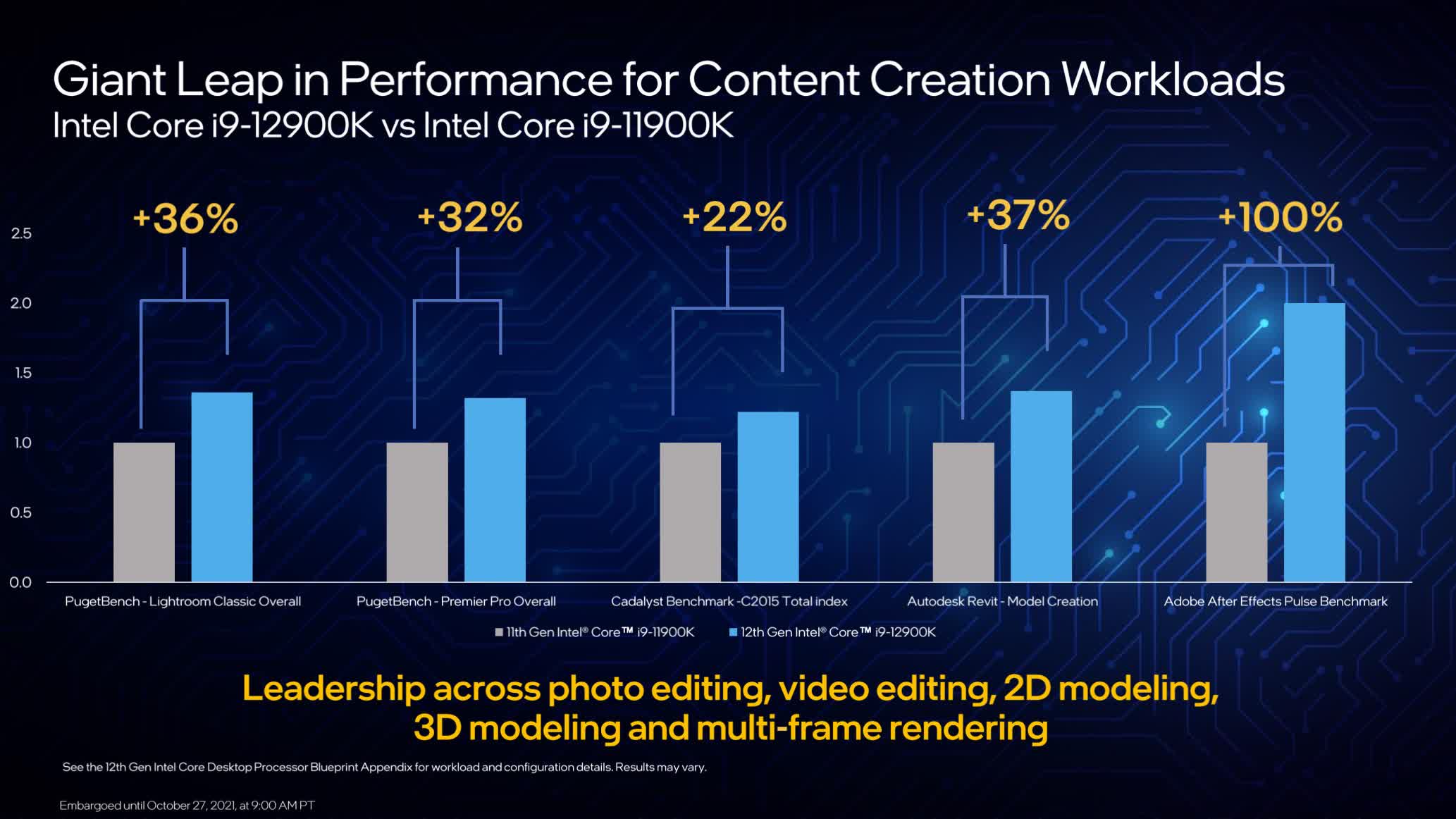

For productivity performance, Intel is talking about significant performance gains over previous parts. For example, Intel is showing gains in excess of 30% comparing the 12900K to the 11900K in Adobe applications, and similar gains in apps like Autodesk Revit. In more single-threaded productivity apps, Intel expect gains of at least 15%, such as in UL Procyon.

However, at no point during Intel’s presentation did they compare 12th-gen CPUs to AMD’s competitors in productivity workloads, and made no claims about being the world’s fastest chip for productivity, like they did for gaming. This suggests that Intel are unlikely to beat AMD in productivity, and it’ll also be interesting to see how the 12900K compares to the 10900K in some of these apps, given the 10900K was faster than the 11900K at times.

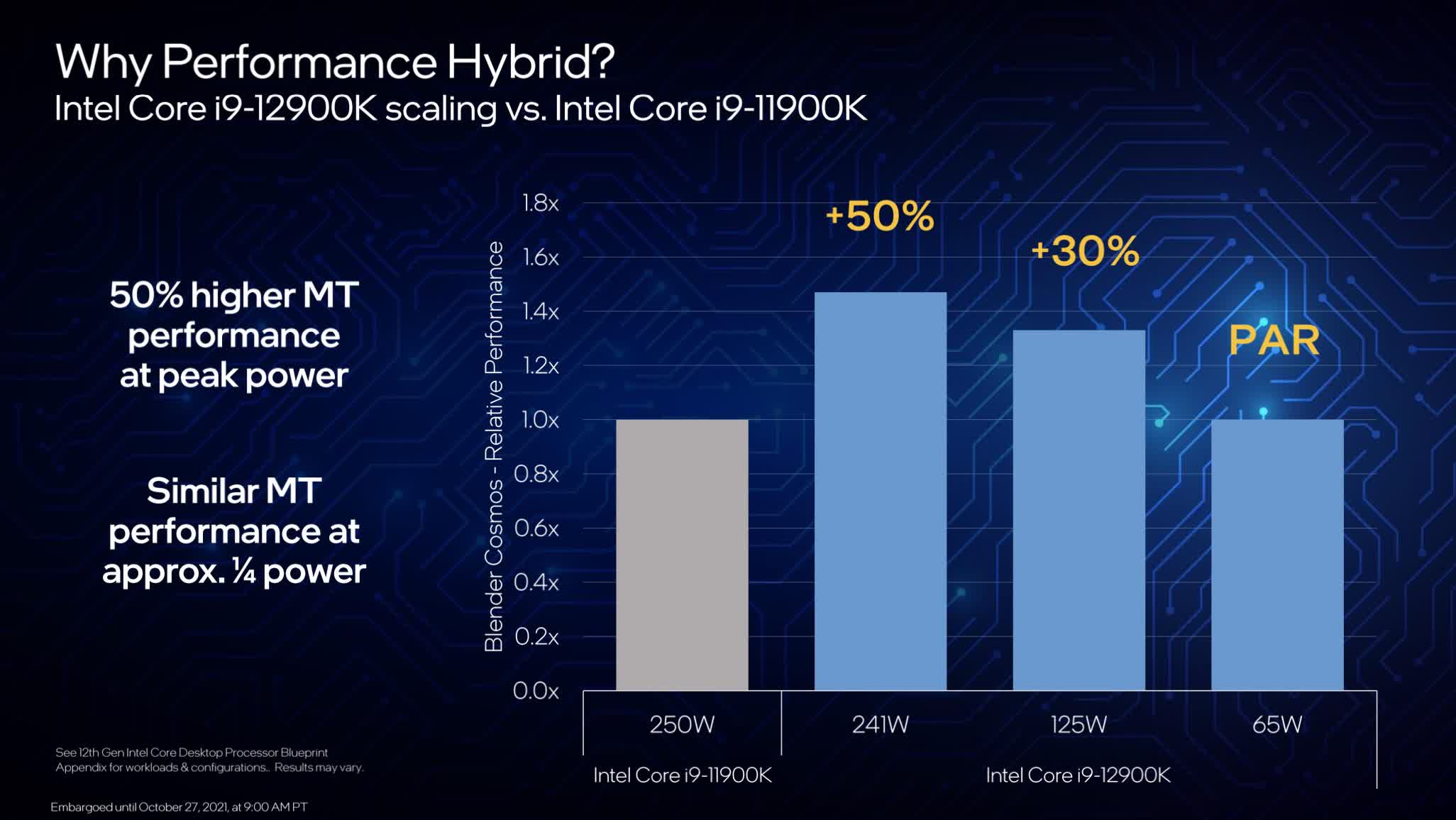

Intel also showed an IPC benchmark showing Intel’s various core architectures at the same frequency. 12th-gen P-cores ended up 28% faster than 10th-gen, and 14% faster than 11th-gen, while the E-cores effectively matched 10th-gen in IPC despite being significantly more efficient. In fact, Intel believes the 12900K is 50% faster than the 11900K at the same peak power level, 30% faster when limited to 125W, and on-par when using just 65W compared to the 11900K’s 250W. In other words, Intel claims this new architecture is significantly more efficient, especially at lower power levels.

Availability

Intel is opening up pre-orders for their 12th-gen Core parts right now, with availability scheduled for November 4th. What Intel has shown here is pretty decent and certainly exciting, especially from a value perspective, but it’ll still have to go through our full benchmark suite in the next week or so.

There are other questions that remain unanswered for now, such as how the 12900K compares to the Ryzen 9 5950X in productivity benchmarks. We don't have a clear idea about total platform cost either. While these 12th-gen CPUs seem cheaper than AMD’s counterparts, this isn’t factoring in the cost of Z690 motherboards and DDR5 memory, which we expect to be quite expensive. Most of these questions will be answered when we review the CPUs next week, so check back soon for all of that testing and beyond.

https://www.techspot.com/news/91949-intel-12th-gen-core-cpus-official-performance-preview.html