The big picture: Intel recently confirmed that its desktop Arc GPUs are going to break cover in Q2 2022, and also promised to deliver millions of them to gamers later this year. Early OpenCL benchmarks don't seem that promising, but there's still hope that Intel's discrete GPUs could win some gamers' hearts in what is otherwise a heated market, where prices and availability are the biggest factors in making a purchasing decision.

Intel’s Arc (Alchemist) GPUs are still shrouded in mystery, and the expectations around them have been tempered by the ongoing chip shortage and rumors of slow progress on driver development. The company says we’ll see the first models in laptops starting next month, but outside of a few odd leaks and the occasional small teaser from Raja Koduri, details about the upcoming GPUs have been scarce.

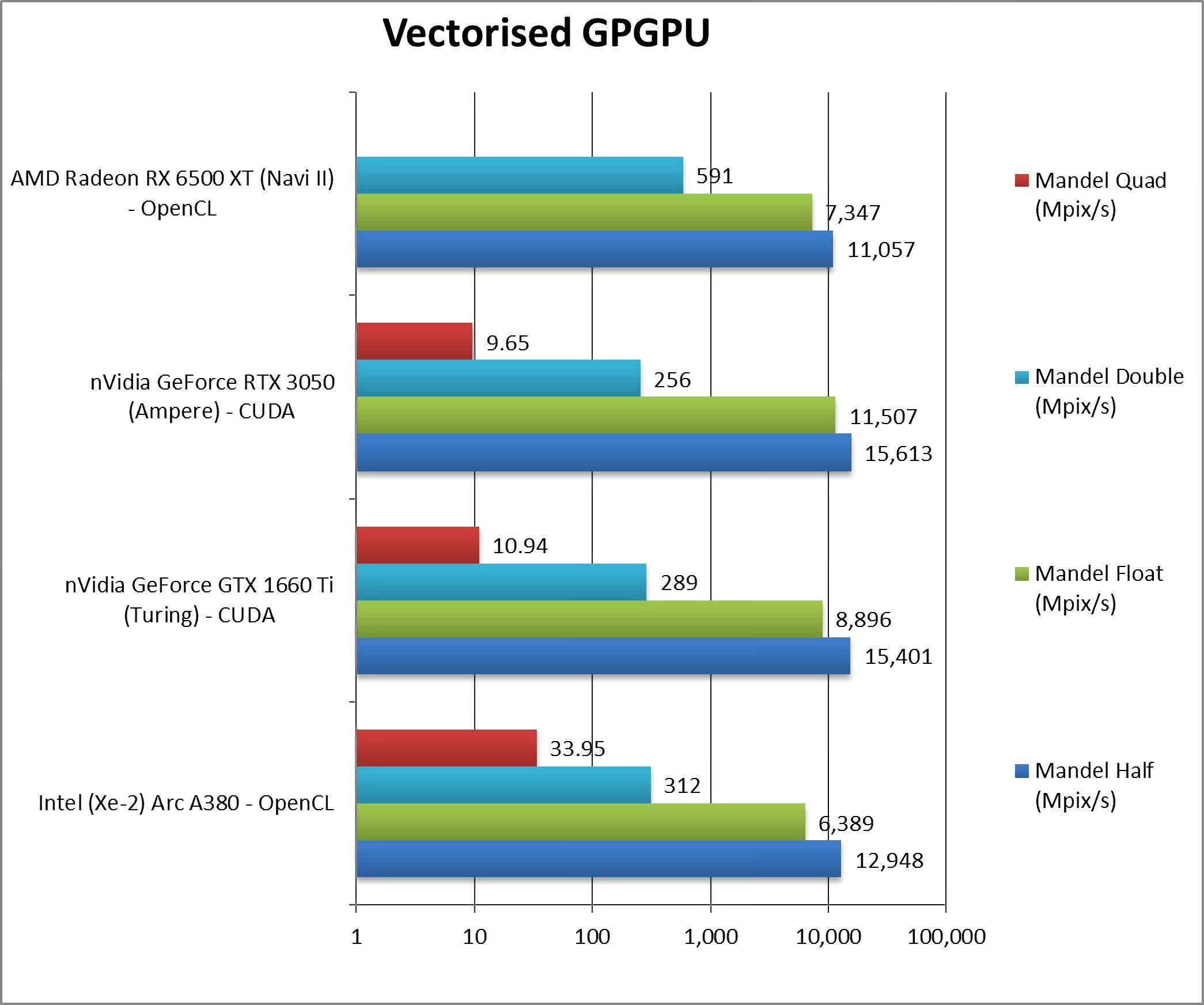

Thanks to a review done by SiSoftware on pre-release hardware, we now have a slightly better idea on what to expect, at least when it comes to the entry-level “A380” graphics card in the Alchemist lineup. Spec-wise, the A380 apparently has 128 compute units for a total of 1024 shaders, paired with six gigabytes of GDDR6 operating at 14 Gbps. Additionally, there are 16 Xe matrix units (XMX) that can be used for Intel’s XeSS upscaling solution.

It’s also worth noting that SiSoftware only used OpenCL benchmarks in its testing, so the results may not be indicative of gaming performance. Despite having a 96-bit memory bus, Intel’s A380 card was slightly slower than AMD’s Radeon RX 6500 XT, which has an even more modest 64-bit memory bus. It was also significantly slower than Nvidia’s entry-level RTX 3050 and GTX 1660 Ti graphics cards.

These results don’t look good for Intel’s much-awaited discrete GPUs, save for the power draw which is rated at 75 watts — significantly lower than the 107-watt TGP of the RX 6500 XT and the 120-watt TGP of the GTX 1660 Ti. It’s possible Intel will market the A380 for small form-factor PCs where low heat and quiet operation might offset the modest performance.

That said, the “A500” series and “A700” series GPUs are expected to pack a bigger punch, and SiSoftware has yet to test these. The A700 series will have 512 compute units paired with 16 gigabytes of GDDR6 over a 256-bit bus, and leaked benchmarks so far suggest it could perform close to a GeForce RTX 3070 Ti. The mid-range A500 series is expected to be comparable to an RTX 3060 or even an RTX 3060 Ti.

Intel says its first Arc desktop graphics cards will land sometime in Q2 this year, so it won’t be long before we can see them in action. Behind the curtain, the company is also poaching AMD veterans to work on its ambition to become a proper third player in the discrete GPU space.

Masthead credit: Moore's Law is Dead

https://www.techspot.com/news/93514-intel-arc-a380-gpu-disappoints-early-benchmarks-but.html