Ok, it's possible but why doesn't this happen between 3600 and 3700X?

In the Zen and Zen+ architectures, each core has 4 blocks of 0.5 MB L3 cache, giving a total of 2 MB per core, and 8 MB per CCX. Any core, in any CCX, can access any L3 block. In Zen 2, the blocks are 1 MB each, so each CCX has 16 MB, giving a lot more room for L2 data ejects.

And this cache is no longer global to any core, in any CCX - so when data is hoofed out of a core's L2, it only goes into the blocks associated with that core/CCX. Transfers between CCXs is also handled by the I/O chip, rather than letting one CCX poke about with the other's cache.

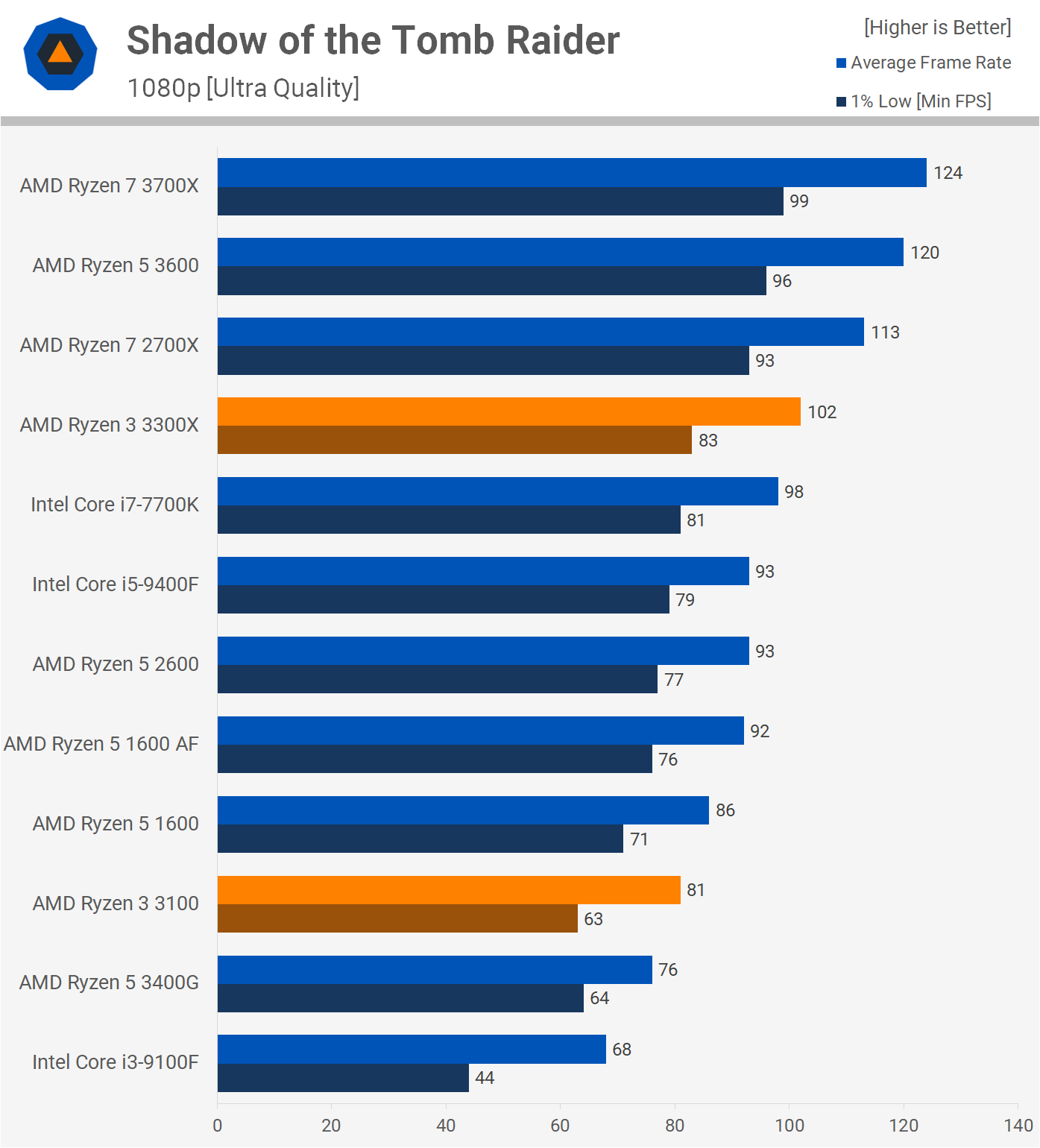

But this is just guessing. I wonder if one can possibly pick out the impact of the L3 cache in our

3300X/3100 review:

So the performance drops (1% Low/Avg) for some of the Ryzen chips are:

Zen 2

3700X = 16.2% / 20.2%

3600 = 15.6% / 18.3%

3300X = 12.0% / 7.8%

3300 = 6.3% / 2.5%

Zen/Zen +

2700X = 17.2% / 24.8%

2600 = 2.6% / 1.1%

1600 AF = 2.6% / 2.2%

1600 = 5.6% / 3.5%

The issue possibly involves CCX-to-CCX accesses, as even though the 3300X takes a hit, it's nowhere near as bad as the 3700X and 3600. But the 3300, 2600 (and it's carbon-copy, the 1600 AF) and original 1600 all have much smaller performance drops going from 1080p to 1440p.

The CCX/L3 cache structures are:

3700X = 2 CCXs, 4 cores each, 16 MB L3 cache per CCX

3600 = 2 CCXs, 3 cores each, 16 MB L3 per CCX

3300X = 1 CCX, 4 cores, 16 MB L3 per CCX

3300 = 2 CCXs, 2 cores each, 8 MB L3 cache per CCX

2700X = 2 CCXs, 4 cores each, 8 MB L3 cache per CCX

2600 = 2 CCXs, 3 cores each, 8 MB L3 cache per CCX

1600 AF = 2 CCXs, 3 cores each, 8 MB L3 cache per CCX

1600 = 2 CCXs, 3 cores each, 8 MB L3 cache per CCX

It's perhaps just the oddities of the respective architectures. In the original Zen, it would seem that the L3 cache doesn't like having 8 cores all trying to access each other's cache; in the Zen 2, the I/O chip should remove this issue, which would explain why the 3600 and 3700X drops are similar. The 3300X only has one CCX, so it doesn't have CCX-to-CCX concerns. But what about the 3300? It's possible that it's low performance drops are actually a sign that the chip's layout is just a lot less efficient than the 3300X's and it's already struggling.

It's all very peculiar!