TL;DR: The first benchmark of the Intel Xe DG1 graphics card has been added to the Basemark GPU database. It's still too soon to draw conclusions, but based on this result alone, the OEM-oriented Intel graphics card seems slightly inferior to the Polaris-based Radeon RX 550 that we tested back in 2017.

The Basemark entry spotted by Apisak implies that this is the Asus DG1-4G that we have previously shown to you. To put the entry's result into perspective, Apisak compared it to a Radeon RX 550 graphics card, a sub-$100 entry-level GPU from four years ago.

Indeed, back in 2017 we published an Esports GPU battle between two GPUs that were supposedly selling under $100: AMD's Radeon RX 550 and Nvidia's GeForce GT 1030.

Based on Intel's 10nm SuperFin node, the Asus DG1-4GB features a cut-down Xe Max GPU clocked at 1500 MHz with 80 execution units (EUs). The memory buffer is composed of 4 GB of LPDDR4X-4266 across a 128-bit bus, and the whole card consumes about 30 W.

As for the Radeon RX 550, the 14nm GPU comes with 10 compute units (CUs) clocked at 1,183 Mhz and 4 GB of GDDR5 at 7,000 MHz on a 128-bit bus. Rated with a 50 W TBP, this Radeon GPU offers a memory bandwidth of up to 112 Gbps.

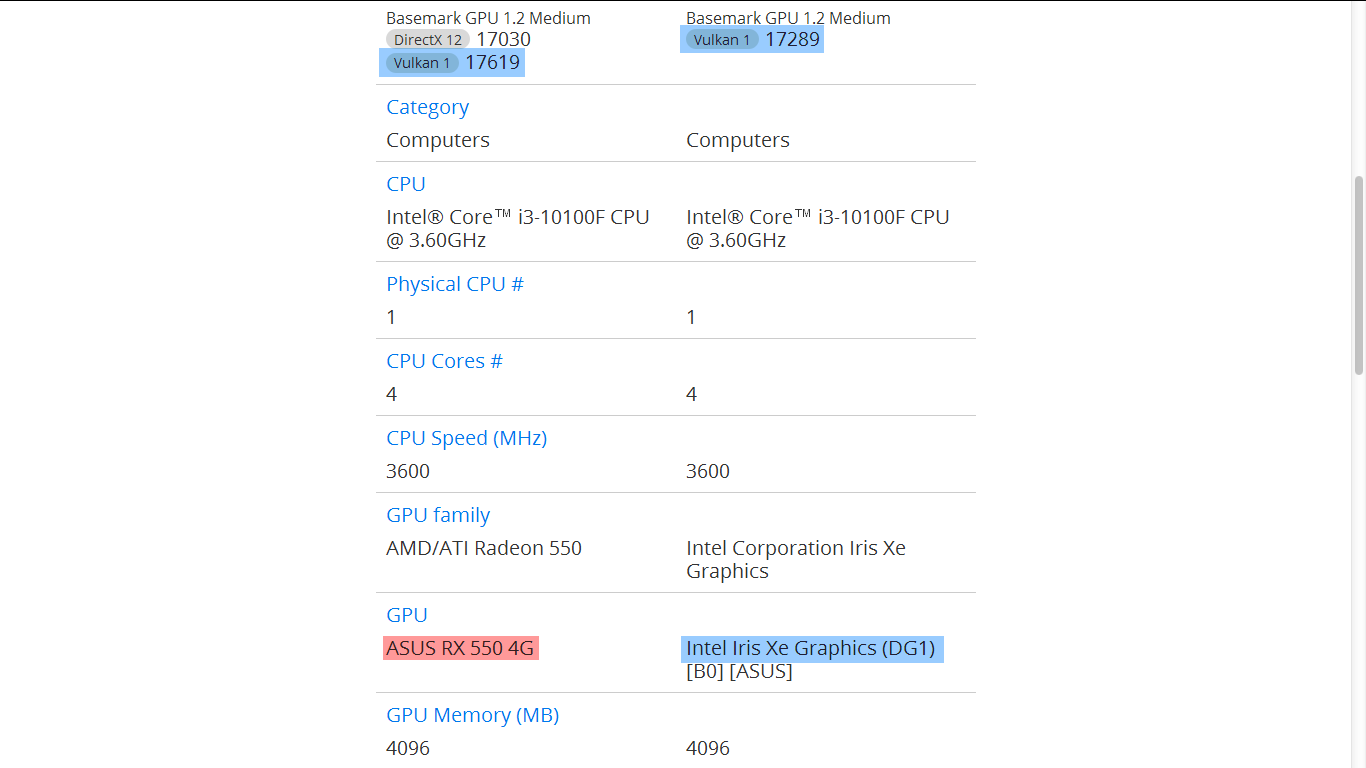

Both systems are using an Intel Core i3-10100F. For a fair comparison, only the Vulkan API scores will be considered. Comparing the 17,289 points of the DG1 graphics card to the Radeon RX 550's score of 17,619, there's a small 2% difference between the two.

Also read: The Last Time Intel Tried to Make a Graphics Card

Such marginal difference in a single benchmark isn't enough to determine which one is faster, but it gives us an idea of what the DG1 will be capable of. As Intel launches more GPUs into the market, we'll certainly see higher-performance cards than this one. We still don't know when this will happen, but it should be sometime this year.

https://www.techspot.com/news/89162-intel-iris-xe-dg1-gpu-first-benchmark-suggests.html