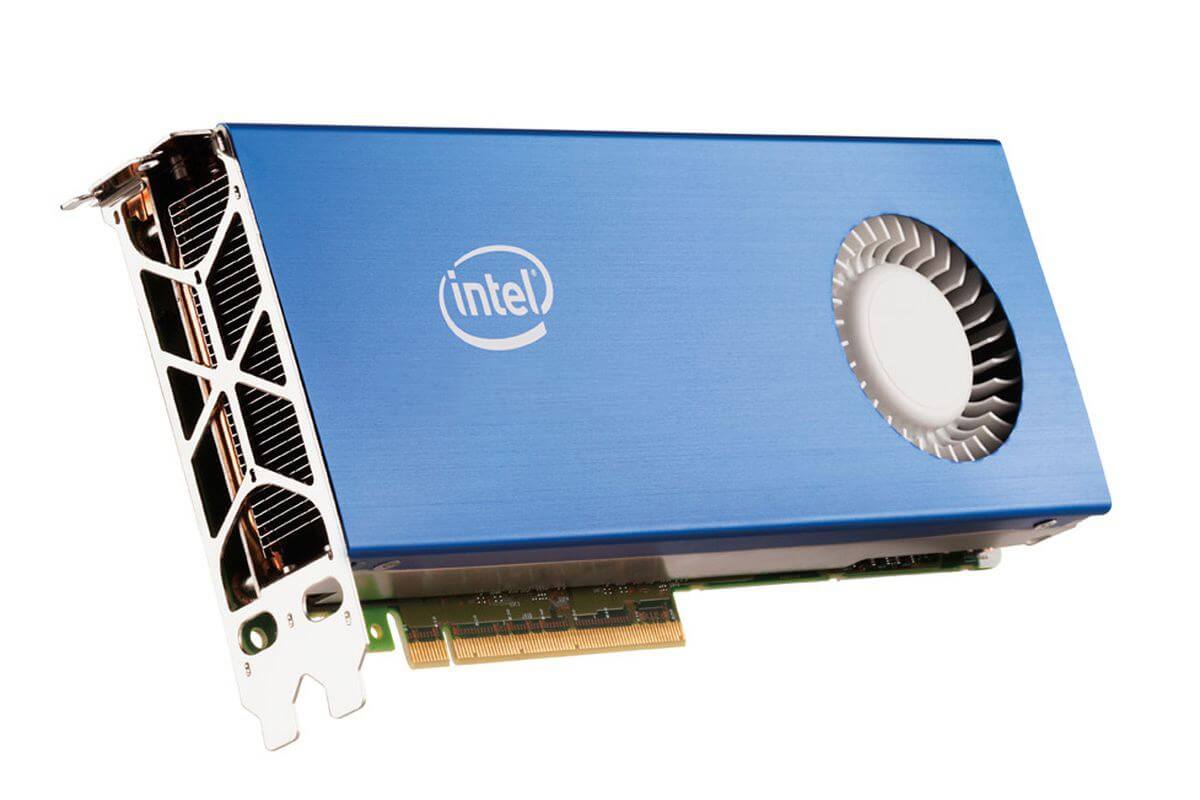

We know that Intel is working on a new discrete GPU, but what we don’t know is when it will arrive. According to Anthony Garreffa at TweakTown, however, the company’s big unveiling could take place at CES 2019.

Garreffa’s industry sources say Intel has reached the end of one of the “big steps” related to the manufacturing of the GPU and is now preparing for launch. If it is unveiled at next year’s Consumer Electronics Show, then the card could release a few months later. This launch date would be a lot sooner than expected, so it’s best to take the report with a pinch of salt.

Back in November, Raja Koduri, the head of the Radeon Technologies Group at AMD, left the company to become Intel’s GPU chief architect, where he heads its newly formed Core and Visual Computing Group. A couple of weeks ago, AMD’s former director of global product marketing, Chris Hook, also joined Intel as its “discrete graphics guy.” A role that will see him lead the company’s GPU marketing push.

Intel has said it will focus on "high-end discrete graphics solutions for a broad range of computing segments" for the PC market, which sounds like it could include gaming.

Last February saw details of Intel’s first prototype discrete GPU revealed, though this was just a proof-of-concept and not a future product.

Intel’s GPU is reportedly codenamed Arctic Sound. According to Ashraf Eassa of TheMotleyFool, it was originally targeted for video streaming apps in data centers, but there will be a gaming variant arriving at some point.

Bonus: Apparently @Rajaontheedge is redefining Arctic Sound (first Intel dGPU), was originally targeted for video streaming apps in data center, but now being split into two: the video streaming stuff and gaming. Apparently wants to “enter the market with a bang.”

— Ashraf Eassa (@TMFChipFool) 6 April 2018

We still have no idea just how powerful Intel’s GPUs might be, but the idea that it could go up against Nvidia and AMD in the gaming sector is an interesting one. While a reveal next January is possible, expect to learn more about the cards before then.

https://www.techspot.com/news/74532-intel-rumored-unveil-discreet-gpu-ces-2019.html