Dubbed the "memory of the future," a new type of integrated circuit combining resistors with memory capabilities was recently shown off by HP and touted as a possible alternative to transistors in both computation and storage devices. Today, these functions are handled by separate chips, meaning data must be transferred between them, slowing down the computation and wasting energy. Could this new tech mean the unification of memory and CPU?

In simplest terms, transistors are the on/off mechanisms which allow CPUs to compute and memory to store data. CPUs are often made with tens or even hundreds of millions of transistors. As the performance and capacity of devices increase, so do the number of transistors. Memristors have the potential to fundamentally change circuitry by performing the same duties as the transistor, but more efficiently and occupying far less space.

They also provide some unique properties such as three-dimensional stacking, faster switching, retention of off/on states without power and a proven durability which exceeds hundreds of thousands of reads/writes.

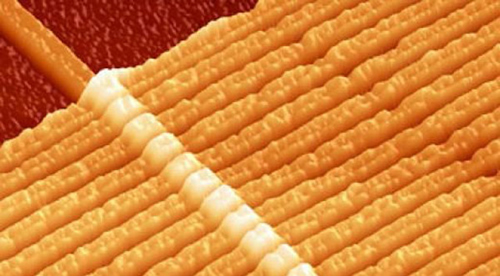

Little more than theory in 1971, the development of actual memristors didn't become reality until 2008 by an HP Labs research team led by Professor Stan Williams. The idea may have been floating around for some time, but finding the processes and materials to physically engineer memristors have remained elusive. A detailed article on the subject can be found here, explaining some of the principles behind how memristors work. If the technology proves viable, it will no doubt become a hugely important breakthrough for our increasingly digital lives -- perhaps in as little as three years.

https://www.techspot.com/news/38536-memristors-could-make-cpus-and-ram-obsolete.html