Something to look forward to: The GPU market has been a bit stagnant lately. Nvidia hasn't released a new series of video cards for some time, and AMD isn't performing much better. However, AMD's new graphics chief, David Wang, is shaking things up now. At Computex, Wang said AMD would shift their focus to an annual GPU release cycle to make the business "more fun" for consumers.

Although Nvidia has a commanding lead in the video card market, it's no secret that enthusiasts and hardcore PC gamers have a few issues with the way the company does business.

While the recent controversy surrounding the GeForce Partner Program is one example of this, Nvidia's unpredictable GPU release schedules are also the source of some frustration among gamers.

The last GPU generation the company released was their GTX 10-series cards, which launched in 2016. Gamers who have been eagerly awaiting the company's next GPU line-up recently got some unfortunate news when Nvidia CEO Jensen Huang said it would be a "long time" before their GTX 11 or 20-series cards hit the market.

For the time being, it seems the company is more interested in developing hardware that's geared towards AI and machine learning.

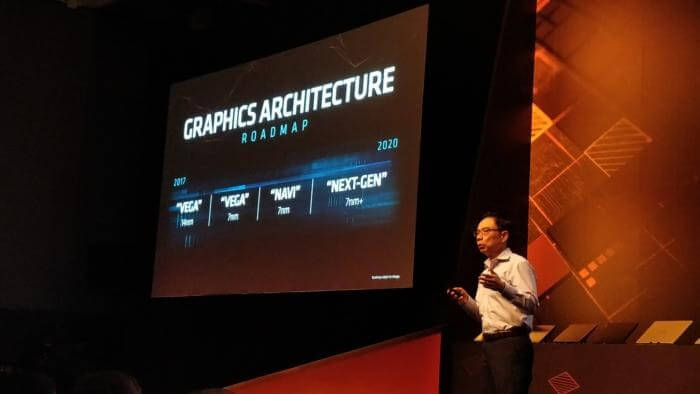

As unfortunate as that may be, PC gaming enthusiasts may have found their savior in AMD's new graphics chief, David Wang. At his first public appearance during Computex this week, Wang claimed AMD is fully committed to releasing a new GPU product every single year.

Wang admits that his company has lost a bit of momentum in the GPU market lately, adding that he wants to "make this business more fun" by returning to a more consumer-oriented GPU release cadence.

According to PCWorld, this annual release cycle could include entirely new GPU architectures, process changes, or "incremental architecture changes." Even if the changes aren't always significant, hardware enthusiasts will undoubtedly be pleased to see AMD make an effort to release more products, more regularly.

It's not clear when AMD plans to release their next GPU, but hopefully, we'll see it sooner rather than later.

Image credit: PCWorld

https://www.techspot.com/news/74985-new-amd-graphics-chief-david-wang-company-release.html