Bottom line: The Titan RTX brings new possibilities for AI researchers, content creators, and data scientists. Turing architecture will help speed up development and reduce compute times for those solving difficult problems.

After teasing the Titan RTX on social media sites with well-known influencers, Nvidia has officially pulled the sheets off the new most powerful desktop GPU. Built on Turing architecture and nick-named the T-Rex, Nvidia is boasting of more than 130 teraflops of compute performance for deep learning applications with 11 GigaRays of ray-tracing.

To churn out all those calculations, 576 Turing Tensor Cores can be found on the die. An additional 72 Turing RT Cores are responsible for handling all ray-tracing needs. A cool 24GB of GDDR6 memory provides bandwidth of up to 672GB/s. Real-time 8K video editing is possible thanks to all of the available memory and bandwidth.

It turns out that the Titan RTX is actually very similar to the Quadro RTX 6000 in terms of available features. The Titan RTX and Quadro series allow for full FP32 accumulation, unlike the GeForce series that is limited to half the performance for FP16 with FP32 accumulation. The GeForce RTX 2080 Ti is also a powerful card, but this key difference will set the Titan RTX apart.

For those that need more performance than a single GPU can provide, a pair of Titan RTX GPUs can be linked at 100GB/s via NVLink. Gamers need not worry about buying two of these cards though. AI researchers are the intended audience for multiple GPUs. Doubling the amount of memory from previous generation offerings will allow for significantly larger data sets to be handled efficiently.

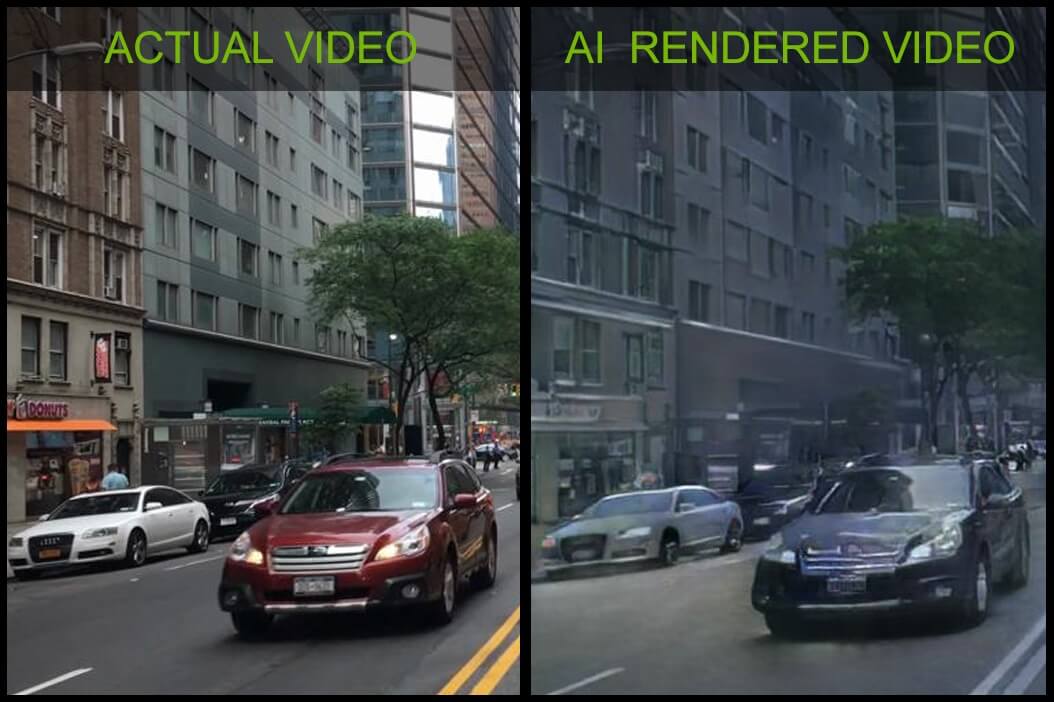

Nvidia estimates that there are at least 5 million content creators using PCs that could greatly benefit from real-time 8K video editing. For those that are not yet ready to make the jump beyond 4K, ray-tracing can still produce some impressive animations and special effects that would otherwise take too long to render with CPU computation.

Here is the part where your wallet will begin to cry. The Titan RTX will be available in the US and Europe later this month for the bargain price of $2,499. Considering that researchers and professional content creators are the main targets though, the price is not likely to be a problem.

https://www.techspot.com/news/77677-nvidia-debuts-titan-rtx-world-most-powerful-desktop.html